-

Posts

7,028 -

Joined

-

Last visited

-

Days Won

22

Everything posted by Starship Krupa

-

Owner of a Lonely Heart (reimagined) plugins

Starship Krupa replied to Eusebio Rufian-Zilbermann's topic in The Coffee House

Phoenix did come in a surround version. Who knows. I’m tickled that one of my favorite musicians/producers would share my taste in reverbs, especially if he’s using Phoenix rather than Nimbus or Stratus (both of which I also have). I still listen to the first Buggles album to this day. He’s a ferocious bass player. -

Owner of a Lonely Heart (reimagined) plugins

Starship Krupa replied to Eusebio Rufian-Zilbermann's topic in The Coffee House

I'm trying to find where he lists them. Phoenix (and its successors, Nimbus and Stratus) Reverb is the best sounding reverb I've yet to hear. -

Sonically, I also use them to "glue" similar elements together with similar processing. For instance, let's say I have rhythm acoustic guitar doubled with the same thing played on an electric. I'd send both tracks to a bus and put something like Cakewalk's PCA2 compressor on them so that they share similar dynamics, and use a reverb send from the bus rather than the individual instruments. This helps to create the sonic illusion of a band all playing in one space. I use the heck out of them for "rock band" type recordings, especially on the tracks that come from the 4 mics I use on my drum kit. I don't use them as much with my electronic pieces. With those, it's pretty much the Master bus and that's it.

-

Well, given that DAW's such as Studio One 6 are still copying features that Cakewalk/SONAR has had for years, I don't think that protecting the competitive advantage is a negligible consideration. 😄 Check this out: "Customizable user interface The all-new Customization Editor lets you view only the tools you need for the task at hand by creating a custom user interface that works for you, and you can save your unique customization settings for instant recall. Beginner Customizations Default customizations are available for essential workflows so you can start with only the functions you need and add new tools as you’re ready to learn more. Advanced Customizations Create our own customizations from the default options and hide nearly every tool or feature you don’t need to clear away distractions at any time." So now in Studio One 6 you can have different....spaces....in which to....work, I guess. 🙈🙉 Nice to have the sincerest form of flattery bestowed upon our favorite DAW.

-

4 DAW's, 4 renders, 4 results

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

Okay, I took my files, and using a tool recommended by @John Nelson, HOFA 4U+ Blind Test, I was able to do a blind listening test. Drawback to the tool: it's a plug-in, so you wind up listening through a DAW's playback engine. At least I used a DAW that didn't automatically copy and convert imported audio files (I know Cakewalk can be set up like that). Results: I couldn't reliably pick a favorite. Conclusions, lessons learned: For the It Is Carved In Stone: All DAW's Sound Alike crew you can add it to the "some guy on a forum tested it" file, I suppose. For me, who is kinda agnostic on the matter (I believe that it is possible for two different DAW's to sound different), my conclusions are that first, it is really hard to come up with objective ways to test this. You can use sine waves, square waves, impulses, whatever. But it's also really hard to get two DAW's to sound alike. Which suggests that in practice, given similar projects produced using different DAW's, they will inevitably sound different in ways that have nothing to do with how the programmers chose to implement Fourier's theorems. This way and that, they nudge you in certain directions. So another conclusion is that if you sit down and compose and mix an ITB electronica project, doing it with FL Studio vs. say, Ableton Live! will produce sonically different results. Same with a band recording. Even the way the meters look and respond will influence decisions you make about setting levels and so forth. If you're in doubt, it's easy enough to just try it: download the free or trial versions of the software you're considering and mess around with the kind of project you usually do and pay attention to how they sound. If one stands out, there ya go. It's your ears that need to be pleased, even if it's ultimately down to a placebo effect. Hit records and audiophile material have been created in a dozen different DAW's. Some people with very talented ears claim to be able to hear a difference; Ray Charles famously chose Cakewalk SONAR out of all the ones he listened to. Mr. Charles certainly wouldn't have cared what the program looked like. Another lesson (not so much learned as confirmed) is that when doing a final mixdown and render of any project that includes randomized elements like arpeggiators, glitch FX, even (or maybe especially) reverb, multiple renders will be different. So do what I've been doing since day one: render once to lossless, convert as needed. If you do a render for each format you wish to distribute, they will all differ from each other, even if it's just subtly. But I don't think anyone wants the MA4 to sound different from the FLAC which sounds different from the MP3. For the conversion task, I like to use MediaHuman Audio Converter. I'm also investigating AuI ConverteR 48x44. And for heaven's sake, although nobody has ever paid attention to me on this, when you're listening to your rendered files, use a music player that can at least use WASAPI Exclusive, preferably ASIO. Music Bee, AIMP, Foobar, etc. Windows' internal mixer is known to have a negative effect on audio that's sent through it, and it's best to bypass it (as we do in our DAW's). -

Stutters and Glitches while recording

Starship Krupa replied to David DeLisle's topic in Cakewalk by BandLab

Binney, could you tell us what settings you had to change or what program(s) you had to disable to get your results? It would benefit future searchers. -

Please add "End of Song" functionality

Starship Krupa replied to SloHand Solo's topic in Feedback Loop

It will stand a greater chance if you submit it to Support. The devs do participate in the forum but they don't read everything, and zombie topics like this one are some of the least likely to be noticed. -

Take a look at this topic:

-

Well, I wasn't really in the market for such a solution. I play drums, and coming up with my own beats is a big part of the fun of making a song. I have a license for MDrummer because I have a license to use all of their products. What I'd like to be able to do is treat it like I do Boom or Break Tweaker: mostly program my own beats but sometimes use one or two of their canned measures, or program my own measures into the slots and trigger them. I think Roland's beat boxes worked this way from about the TR606 on. You could do single notes, make patterns out of notes and make songs out of patterns. And they had about half of the pattern slots pre-loaded with factory beats. I've never done very well with song creation tools; it always feels like they want me to already know too many things about the song. I've never been someone who could write an outline of something first. In school when a writing assignment called for an outline, I'd always have to finish writing the paper, then write the outline using the paper as a guide. Which I suspect is not the way they envisioned it happening.

-

@Jochen Flach has a small number of videos, but he is a film/trailer composer and his stuff gets into MIDI editing for that context.

-

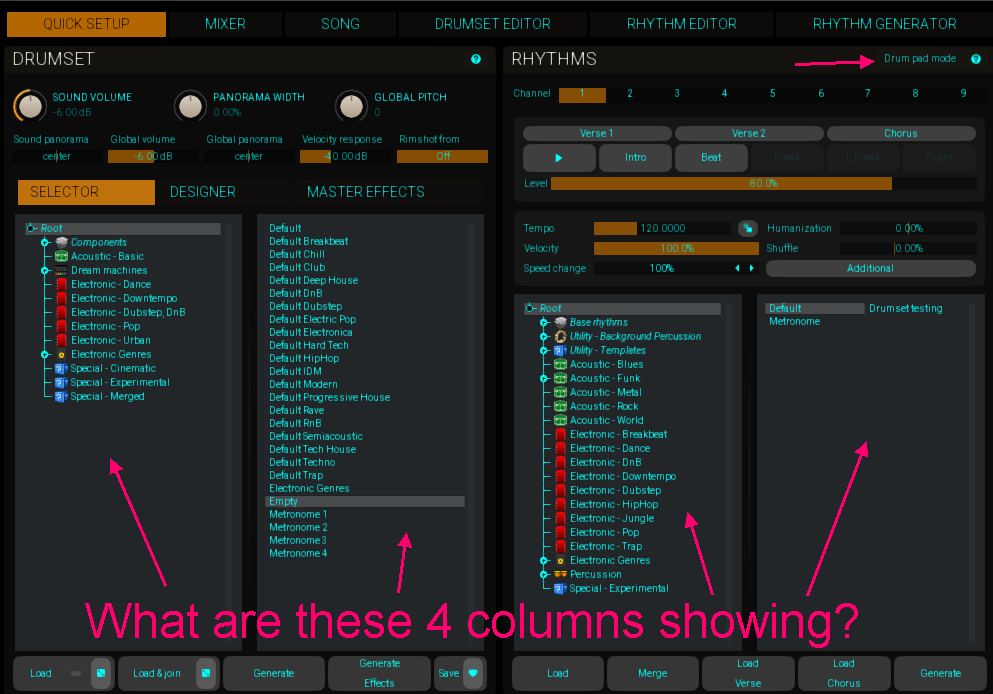

Story checks out. I like the way MDrummer sounds, and heaven knows it comes with enough samples to mean that I'll never have to worry about whether I can get a sound or not. However, I've not been able to use it in any way except "pad mode" as a dumb sample player. All of the creating songs, yada yada is so far unfathomable. The drum machine paradigm I'm used to is that the first couple of octaves on my keyboard are mapped closely to the GM kit standard, then they skip an octave and those keys start triggering different variations of whatever pattern you've got loaded. And there will be intro, outro, verse, chorus, fills and variations of these. No mode switching, you can play it as a drum kit with your left hand and trigger patterns with your right hand. I know that MDrummer is full of great rhythms, whole songs even, and they're broken down into sections, but as far as being able to use those sections, I'm toast. I can get it as far as being able to play and loop one of its internal songs' intro, but only the intro. Can't figure out how to trigger the verse or chorus beats or anything else. That's why I never bought it; what happened the first few times I tried it was that I somehow made it start playing and then couldn't shut it off, and I get unusually angry when I can't turn a racket-making device off (think Han Solo: "It was a boring conversation."). And I could never remember how to get it into pad mode. So I'd install it in demo mode, fail to understand why it wasn't responding to the GM keys, then maybe get it into pad mode for a little bit. Then I'd start clicking things, get an intro playing, forget how to put it back into pad mode and decide that having a canned drum pattern intro jamming away while I failed to figure out how to stop it short of muting its track was a sign that we were just not compatible. I don't usually buy things that anger me while I'm demo'ing them. MeldaProduction's plug-ins are known for being complex, and often also having somewhat obscure controls. I'm a fan of them and I agree. Doesn't bother me until clicking one of those controls results in a canned drum intro racket that I can't stop. I use Piano Roll and Step Sequencer to program beats anyway, so pad mode is fine until I have a free week or two to figure out its advanced features. Here's the interface it presents when you first open it. The controls seem to have been placed just this side of "randomly," and see that little tiny "drum pad mode?" It vanishes once you switch to any view other than the optimistically-named "Quick Setup." 4 huge tree directories dominate the UI, and they are no labels on them indicating what they are listing (I finally figured them out via trial-and-error). As with all Melda plug-ins, clicking on the global help button opens a text file that starts with 1/2 page on how to load a global preset (which, BTW are not what is being shown in any of the 4 big columns), then each of the generic controls on the header....and it's just too depressing to try to wade into documentation this obtuse. It's a freaking drum machine, it has no right to be harder to figure out than Break Tweaker. I think someone finally straightened out Vojtech on how people usually want to start with something simple and then only go to the advanced features when they want or need to really dig into something (I imagine him being someone who gets more of a thrill looking under the hood of a sports car than driving it) MDrummer is likely from before that time.

-

One little problem with this software: it's a plug-in, and therefore has to run inside a host. So I'd be listening to my different audio files as presented by a single host's playback engine. Music Bee, one of my favorite bit-perfect audio players, does allow hosting of VST's, but it's still a 32-bit application, and HOFA have graduated to the 64-bit only category. I also found this: https://lacinato.com/cm/software/othersoft/abx But it plays back using system audio rather than WASAPI Exclusive or ASIO, so I'd be trying to do critical listening through the Windows mixer.😣

-

Neither of the FX would do much for a classic rock production. Glitchmachines' stuff is great for messing about and coming up with unusual sounds. They're notoriously hard to get a handle on, but I don't mind that. They're kind of the ultimate in "twiddle knobs and see what happens." Stutter Edit (along with Image Line's Gross Beat and MeldaProduction's MRhythmizer) is a gold standard plug-in for creating the sort of stutters, tape stops, reverses etc, that producers throw into EDM songs as seasonings. I use MRhythmizer for this more than I use Stutter Edit, but I still consider it a good thing to have in my production toolkit.

-

Absolutely agree. Which is why, when I put up my rendered files, I gave them arbitrary names. The remaining issue now is, ironically, that this doesn't do me any good, because I know what my naming system is. Maybe I'll look around, there must be some software that allows double blind testing of audio files to be done by the computer. Maybe MCompare? I'll check and see. Speaking of tests, Rick Beato famously set up a test to "prove" that even trained listeners like his studio assistants couldn't hear the difference between lossless audio and MP3's at various bit rates (I may not be remembering this exactly). Great idea. The conclusion that he came to is that they couldn't tell the difference. But IMO, the test was useless. He was doing it using a web browser, from another site on the web. Why is that useless? Because web browsers have their own CODEC's for processing audio, and then after that, the audio goes through the OS' mixer. This is similar to running your DAW using the MME driver. An OS' mixer does all sorts of resampling and crap due to having to manage so many different audio streams. That's one of the reasons we use ASIO or WASAPI Exclusive. WASAPI Exclusive bypasses the Windows mixer. Resampling algorithms are not created equal. There is objective proof of this on the SRC comparisons website that nobody ever seems to check out when I post the link. They tested a variety of audio programs' sample rate converters using sines and impulses. The results show some of them throwing off harmonics, ringing, all kinds of crap. I'm not going to bother posting the link, because when I do, nobody ever comments about having checked it out. I'd like to be clear, BTW, that I really, really don't want there to be any sonic difference between DAW's. So when I happened to hear a difference, it caught my attention.

-

Scott has a niche there. He works in a genre that seems kind of underserved (not "undeserved!") on YouTube. There's....plenty....of pop r 'n' b stuff on there, which, to be fair, uses slick production techniques to the hilt, but it's not a genre that I much care for, at least not in its current state.

-

Even Windows 10's Explorer has this functionality built in. I've had to use it from time to time to round up stray files.

-

Indeed they are. But all (physical) mixers don't behave and sound alike, although there was a time when most people believed that they did. And my questions have to do with what choices (and compromises) they make when copying said mixer. I was once a professional software QA engineer (at Macromedia/Adobe), and I know something about how programmers approach problems. Everyone wants to implement their own great ideas, and there's no magic hand that comes down out of the sky and slaps their hand when they decide not to adhere to accepted principles or practices. Here's Harrison on their claims about how their DAW, Mixbus, supposedly sounds "better" than other DAW's: "When the digital revolution came, we were asked to convert the analog "processor" into a digital processor, while leaving the control surface unchanged. Film mixers wanted the control surface to work and sound exactly like the analog mixer they were using for previous projects. This required us to develop a digital audio engine that operated and sounded exactly like the analog mixer they were using for previous projects. This transition was not undertaken by any other company, and it has provided us with techniques and proprietary technology that we have incorporated into all of our high-end mixers. Mixbus gives us an opportunity to share this technology with a much wider range of users." Sure, it's a marketing blurb. And so what? A pile of amateurs having a big debate. Gearspace is known for being a big weenie-waving fest. Yeah, I get that some people are tired to death of this discussion. "Gawd not this again!" So don't follow it! The culture over here is blessedly different. I 100% agree that if one is going to worry about some aspect of the sound resulting from their recording and mixing process, there are many many many many things to be concerned about before you get to "hmmm, I wonder if there is a difference between how different DAW's sound. I better get the 'best' one." The answer to that (from me) is if you're really concerned about it to just frickin' try out the DAW's you're considering. They all have either trial or freebie versions. If you hear a difference between Pro Tools First, Cakewalk, Ableton Live! Lite, REAPER, et al, then go with the one that sounds best to you. Don't go by the "wisdom" of a bunch of guys (and they're always guys) trying to top each other in some discussion. That's my approach: if you're curious, if it's important to you, then try it. I did, recently, and was brave enough to post about my progress through the process. One of the conclusions that I and the people sharing in the discussion have come to seems to be that its really hard to design tests that themselves do not introduce difference. Let's say someone wants to test the summing engine. If they go with already-created audio material, well, that right there bypasses the DAW's own recording engine. Then most DAW's want to convert imported audio files. There's another point of failure, at that point we're also testing their conversion algorithm. And so on and so on. It's really hard to do, and I'll bet that way less than 1% of people who debate this have ever actually tried to test it themselves. People get hung up on making it 100% absolutely objective. So my approach (in that other thread) is to loosen up a bit on trying for an ideal, and go with actual musical program material, generated by the DAW using plug-ins, entirely in the box. People have pointed out that my methodology is imperfect, but my methodology imitates how actual people use music software. We don't record pure sine waves and square waves from test equipment. We record and mix complex program material. I'm flailing around trying it right now, and as far as I'm concerned, the other people weighing in have been really cool about it. They're keepin' me honest. But I'm also emphasizing that what I'm coming up with is imperfect and not intended to prove anything other than maybe that it's really hard to design and implement a test for this. I posted the renders that came out of my exploration for anyone to listen to if they are curious. So now I'm one of those mythical guys (and we're always guys😂) on a forum somewhere who tried testing it. And my "conclusion" is that objective tests are next to impossible. Which is kind of as it should be. When we're trying out other music tools, we're certainly not objective. We noodle around and listen, we claim that blue guitars with gold hardware are ugly, etc.

-

4 DAW's, 4 renders, 4 results

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

Revised the package of files again. I listened to them and Thwarp C.FLAC sounded as if it were lower in volume than the others, so I opened the DAW project to check. All of the track levels were set to what my instructions call for....except the fader for the Master bus, which was set to -3. No idea how it got that way. I never deliberately touched it. Thwarps Beta 3 -

4 DAW's, 4 renders, 4 results

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

At least once?? 😂 Yes, I've tweaked compression settings to perfection using bypassed compressors. About once a month. I expect the frequency to increase as I get further past 60. Ah, but the thing about this scenario is that tweaking the EQ and compression on one track will affect the sound of another track. Right? Make some cuts or compress over here, and then suddenly the track over there starts popping out in the mix. It can even happen with things like delay and reverb where the track we're working on gets moved back in the mix. In the Jenga game of mixing, what affects one track usually affects the others. I stumbled across that one, and while I already "knew" it intellectually due to studying masking and how to reduce collisions, the experience of tweaking the EQ on one track and hearing the results on another track was the revelation moment. 🎆 Okay, here are the revised files. -

4 DAW's, 4 renders, 4 results

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

Yes, for it to produce objective results. I'm working backward, trying to figure out why I heard a difference. Part of that is eliminating as many variables as I can and then listening again as well as submitting my renders for peer review, which in the case of apparently only one person snagging them before I took down the link, has already yielded valuable feedback. I have no illusions that I'll be able to eliminate all variables when working with virtual instruments. In the end, stock patches always have something like reverb or chorus baked in. I'll likely be submitting the anomaliess you noticed to MeldaProduction. They should know that there are a couple of DAW's whose "zero all controllers" schtick messes up MSoundFactory's arpeggiator at render time. -

4 DAW's, 4 renders, 4 results

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

I know you said you only skimmed the thread, but I've explained in detail that I'm not trying to prove or disprove a difference in engines. I fully admit and concede that it's not likely to result in anything other than an interesting exercise. My process here is a "wrong end" approach. It's like the Air Force's UFO investigation project. I heard something and I'm trying to eliminate/minimize as many variables as I can. It's also a method used by pro QA engineers, which I once was. Observe a blip and then try to come up with some way to reproduce it. I've also disclosed that I do think it's possible that there's something about how a DAW implements things that can result in a difference in perceived sonic "quality." For a subject that so many contend was laid to rest such a long time ago, this does seem to be getting a lot of attention. And I do appreciate the lack of dismissiveness toward my flailing about. As for using audio files, yes, I may do that at some point. However, when I heard the difference between the two DAW's, it was when using virtual instruments. That gave me the idea that there might be something about how the DAW's were implementing their hosting of virtual instruments, and from that point forward, well, can't divert a fool from his folly. 🎿 -

4 DAW's, 4 renders, 4 results

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

Wow. Okay, I'm not going to be able to get back to this for several hours, but here's yet another thing I learned: MSoundFactory was changing the behavior of one of the arp patches I used in response to being told to "zero all controllers." Two of the DAW's were set to zero all controllers on stop. So that synth track is there, it's just not doing the lively arp behavior it's supposed to. Thanks again to Bruno for noticing this before I did. This now has me wondering if the famous "Suddenly Silent Synth Syndrome" is a condition that some VSTi's get into and don't recover from when you throw the "zero controllers" thing at them. Is "zero all controllers" a single message like "all notes off" or does it send individual zeroes to each controller? This kind of thing is part of why I did this: I just never know what I might learn. It could be quirks of various synths I try, default or optional MIDI behaviors of the hosts, whatever. -

4 DAW's, 4 renders, 4 results

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

Crap! Thank you, Bruno, that's what I get for not listening closely enough. What you actually caught is the complete LACK of one of synth tracks firing off in the entire snippet. Which is something I was going to mention later: good LORD did I ever learn that Cakewalk wasn't the only DAW where MIDI/virtual instrument tracks stop making sound for no apparent reason. At one point or other, in each of the 4 DAW's I was playing with, I wound up having to do the dance of deleting an instrument track and its MIDI data and creating it afresh before the track would start making sound again. Stand by for revision 2.... -

4 DAW's, 4 renders, 4 results

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

Okay, here's the moment we've been anticipating. I finally was able to get what I think are similar results from 4 different DAW's. What prompted me to want to try this was that I was trying to set up some test datasets for the DAW's I use, and happened to notice that even though the test projects were both playing back the same arps on the same synths, I could hear a difference between the two DAW's. So it got me thinking about why that might be, and how to figure out why, and that led to wondering how I might go about creating actual music files that would be the most suitable for "listening tests." Subjective, of course, using music and ears rather than test tones and impulses. That's not how to proceed were I trying to prove something to someone else, but I'm not. It is trying to solve a problem, but approaching it from the other side: I started with the observation: I heard a difference between two different DAW's that were playing back very similar material at what their controls said were very similar levels. As discussed earlier, although I don't believe that it's "impossible" for the VSTi-hosting/mixing/playback subsystems of two different DAW's to sound perceptibly different (despite whatever proof was presented on a forum decades ago), I do think it would be unusual to hear as much of a difference as I did. What happens if I render the projects out and listen, can I still hear the difference? Yes, as it turns out. Hmm. What if we try it on a couple more DAW's? One still sounds better than the other 3, which sound pretty similar. Hmm. Looks like we got us an outlier. Various iterations of rendering and examining the peaks and valleys in Audacity revealed that there were some big variances between timing of peaks and valleys due to either randomization in the arpeggiators or LFO's not synched to BPM. Or whatever. The hardest part was most definitely finding arpeggiated synth patches that were complex enough not to drive me nuts having to listen to them, yet didn't have any non-tempo sync'd motion, or at least as little as possible. Biggest chore after that was figuring out how to do things like create instrument tracks, open piano roll views, set note velocities, etc. in the two DAW's I was less familiar with. Once I got all the dynamics sorted, the statistics generated by Sound Forge fell better in line. But enough text for now, here are 4 renders from 4 DAW's, as close as I could make them without cheating any settings this way or that. Analyse them, importantly, listen to them, see what you think. I haven't subjected them to critical, close up listening yet, so I don't know how that shook out. The only piece of information I'll withold for now is which rendered file is from which DAW so that people can try it "blind." If you think you hear any differences, it doesn't mean anything. Any audible differences are 100% the result of errors in my methodology, and besides, audible differences can't be determined by people just listening to stuff anyway. The only time you should trust your ears is when you are mixing, and when you are doing that, you should trust only your ears. The Thwarps files, beta 3. P.S. One of the audio files is a couple of seconds shorter than the others because I never did figure out how to render a selected length in that particular DAW.