-

Posts

2,311 -

Joined

-

Last visited

-

Days Won

2

Everything posted by mettelus

-

Word-of-mouth advertising is one of the most effective, but also one that money cannot buy. When someone (or worse, multiple persons) has their time - a commodity that can never be recovered - wasted, that word-of-mouth is nothing but harmful. There are some that only need to experience this once, and they are done for good. I cannot even fathom the embarrassment of this happening in front of clients, let alone what they would say to others after the fact.

- 3 replies

-

- activation

- atcivationrefresh

-

(and 2 more)

Tagged with:

-

Most hardware (tuners, nut, bridge/tremolo) will mirror over from a wood design, but as mentioned above, I would put some forethought into the electronics. I would suspect the sustain on that would be well above average and also brighter due to harmonics. With one pickup, a Seymour Duncan Triple Shot will allow you to series/parallel/single coil each side without any guitar modification (switches are on the ring). With the sustain possibilities, building in a sustainer (where a neck pup would be) is another option, but that requires a power source and possible modification, so might be on the overzealous end. The Triple Shot also has the advantage you can swap the entire assembly out for another pup, but leave enough wire to work with inside the pup cavity. If you really want to get carried away, you can use contacts in the cavity and magnets on the guitar/steel spacer on the ring to pop the whole thing in and out "at will."

-

Another thing to check is Windows settings that may have been reset to default due to Windows Updates. In your situation I would check that USB connections are never allowed to go idle.

-

There is no more embarrassing black eye than the one you give to yourself. And this after all the harping to sell Sonar regarding "shouldn't your work be important enough to pay for?" The free tier wasn't even on the radar then.

-

FWIW, I just did final assembly on the IYV guitar I got yesterday and the G tuner was noticeably looser than the rest (but still didn't unwind itself). To my surprise, tightening that screw also turned the peg with the string on and in tune! I had to hold the peg to tighten it, so a better test might be to see how much force you need to apply to the peg to tighten a tuner (they have a 16:1 torque advantage)... they shouldn't be able to "just move" and should have some resistance to them. If they are lubricated internally (probably why a #1 screw can turn it), that screw may be the only thing applying any real resistance. If a tuner is truly unwinding, the peg would have to rotate to do so (is a worm gear internally), but even at a 16:1 ratio it may not be noticeable visually.

-

The sick irony about Dialogue Match is that it is/was AAX only to begin with! There was never a VST version released, and apparently never will be. This announcement won't affect folks without PTs. More and more of these tools are being included with video applications directly (not as an add-on), so the "need" for 3rd party tools is slipping away.

- 1 reply

-

- 2

-

-

With mulitple instances that are not easy to find, an old trick to remove Region FX from a project was to save as a bun file, then unpack it. Bun files do not store Region FX, so it will strip them all that way. If you try/do this, just be sure to unpack the bun to a NEW folder so the original project is not overwritten (or back the original project up before doing this).

-

Ouch, yeah, Amazon's return queue has a question if "the package has been opened," so this makes me wonder if Amazon actually opens the package when received back to check contents before inventory/resale if the customer says "no." Let alone tracking Serial Numbers on electronics (not sure if they even do this). There have been a slew of "previously used items" reported where people need something for a one-time job and then return it (Home Depot/Lowes have similar issues in this regard)... next person gets it and reports it has already been opened/partially used. I have seen this with automotive parts (Parts Geek in particular), where I received a damaged part that was resold just to see if someone would not return it and eat the costs. Worst one was an A/C condenser (is like a radiator and needs to be sealed or the desiccant will be consumed in a few hours in many cases). Not only were the seals missing, but it had been stepped on so had a nice arc in it (making it impossible to install anyway)... to add insult to injury, I peeled off some tape and there were FOUR previous return stickers on that box!! Companies reselling parts that were returned as "damaged" is just way over the top to me... they are "supposed" to be shipped back to the manufacturer, but seems that is not happening.

-

[SOLVED] Melodyne won't show notes or sound

mettelus replied to Murray Webster's topic in Cakewalk Sonar

Which version of Melodyne are you running (only Melodyne 5 works for Win10/11)? There have been a few posts of such (this is one of them), in addition to Noel posting the options that should be enabled for ARA2 a while ago. Are you working with multiple clips in Melodyne? Is it a case where the blobs are actually there but not in the viewable window (Melodyne handshakes with the DAW pretty well, so is possible the Now Time is where no notes are when shifting around in the DAW). More information on your situation would help us understand what you are seeing. -

Hey Brian, very nice piece and great to see you poke your head back in! When I saw this comment, I bet their would be a lot of interest for folks here on how you went about tracking in a car (especially for vocals). It is a common talking point for people struggling with recording setups, so actually tracking in a car would be a topic that would help others see what "can" be done (if you really want to).

-

+1, "better" is always a subjective term. Also bear in mind that you can use FX both before and after an amp sim rather than just relying on its internal FX. If the internal FX are not up to what you want, there is nothing stopping you from disabling them and using others in an FX chain after the amp sim (IR loaders, EQs, reverbs, etc.). Even prior to the amp sim, you can enhance/trim a signal before the amp sim sees it (this alone sometimes adds more tonal control than the amp sim can do internally).

-

I looked PRS Santana SE up and that seems to have a tremolo (not sure if they all do?). I literally have never had to change tuners out on any guitar, so I would focus more on the tremolo and nut as mentioned above. Graphite nuts are "self-lubricating" but for others you can use a pencil in the groove to get graphite on the contact surfaces. Even with bending, if the bridge can move (tremolo) you can start pulling wire over the nut that won't go back due to friction. The number of springs on the tremolo will also make them more rigid, but if this just started happening, that wouldn't be the cause. On string changes, I actually tune each string two half-steps (full bend) high at first (one at a time), then back them off and tune them properly. The tuning shift from new strings isn't that they actually stretch (a lot of places say this), but that the wraps on the pegs need to "fully take." Tuning them high at the peg takes fewer passes (1-2) for that wrap to take than doing full bends on each string (3-5).

-

Is it ever a good idea to record the vocals first?

mettelus replied to Michael Hopcroft's topic in Production Techniques

There is nothing wrong with recording a vocal/melody first, since that with give overall key and feel for the piece, and can often be the core/backbone of the final piece. That said (HUGE caution here), for the very same reason that Mark mentioned above, never (this is pretty much always a "never") try to "shoe horn" a vocal like this into a final work. Better to think of them as a "demo track." As a piece fleshes out you will tinker/revise/adapt that initial thought into its final product (as with all instruments involved). There is zero harm in using that initial vocal as a creative backbone, but realize there will come a time (often several times) you will need to remove the original from the project and record a better version. That same issue exists with folks on other instruments... a "first pass"/improve/whatever isn't the final version. Repeition leads to refinement, so brace yourself for the moment you highlight that original clip and hit the "delete" key and replace it with something better. A lot of "one hit wonders" fall into this category... they literally played that hit hundreds of times before the final was released; but when asked to repeat that, the "refinement" stage goes away do to time constraints, and the results reflect that. -

A simple screwdriver set with a 10mm socket and #1 (CR-V PH1) screwdriver bit ($10-15 typically) will fit the bill for the hardware if you do not already have those. You want both the socket and screwdriver to fit perfectly onto/into fasteners so that corners do not get rounded over on hardware. With the strings off, use the 10mm socket to unscrew the barrel nut on the head stock face of each tuner. Take this and the washer off the face of each tuner, being careful not to apply undue pressure and avoid scratching any hardware/finish. This barrel nut does the grunt work of the tuner, resisting the tension of the strings against the hole. Flip the guitar over and carefully remove each #1 screw from each tuner. These simply hold the tuner in place and keep them from rotating when loosening/tightening the barrel nut. Installation is the reverse process, screws in the back, then washers/barrel nuts on the front. Do not apply undue force to either, "finger tight" once they stop turning should be enough. That said, before you replace anything.... most tuners also have a screw (also a #1) on the knob peg. This provides resistance to the tuner to make it harder to wind (and keep it from unwinding on its own). I would recommend starting with those; tightening them a 1/4 turn (can leave strings on while doing) will add resistance to the winder and prevent them from de-tuning from string tension. Depending how loose those screws are (they could be really loose if the guitar noticeably de-tunes itself), you may also have more room to play with, but if they are bottoming out, do not over tighten them. Let the resistance on the tuner as you wind/unwind tension be your guide (rather than torque on that screw). I added the pic from Sweetwater and put a red square around that screw for reference.

-

Is interesting that you post this, since the FTC just recently filed a lawsuit against Live Nation and Ticketmaster for the exact same thing. Not only was the "ticket limit" per patron bogus, but spambots were scarfing up all the tickets to resell at a higher price. Here is a link to one of those reports if you hadn't seen it already (or can just search for one).

-

Does Sonar’s installation remove the TTS-1?

mettelus replied to Bass Guitar's topic in Cakewalk Sonar

Registering a dll with Windows (what that command does) and activating/registering a plugin with a vendor are two different things, so unless a VST is unlocked (no registration/activation required), that won't work. With BREVERB specifically, Overloud released an unlocked version for Cakewalk users during the great Gibson debacle. The issue with that one specifically was that every Command Center update overwrote the registration (reverting it to the Cakewalk-limited version), so if you wanted to continue using the Overloud released (unlocked) version, it had to be re-installed after every Cakewalk update (essentially, the latest install is what was "usable"). Is that the version of BREVERB you are referring to? -

Are there any reverbs/delays (time-based FX) active on the track in the project? The difference in length could be a reverb tail "playing through", as it were. Another thing to check would be an actual project export to desktop (rather than drag/drop) and then bring that file back in to compare versus the original.

-

This bump is a good opportunity to remind folks that if you do not already have this to download it (even if you don't intend to use it). ESPECIALLY if you bought it; the unlocked version (in the OP) is the only one you can install going forward since the activation servers are offline now.

-

+1. 20/20 did a special episode honoring him the other night and one of the first things they said was (paraphrased), "It seems that 'iconic' gets over-used, but in this case it is true." The interviews with others, his perception and reasoning for the Sundance Festival, and the profound impact that has had on others was phenomenal to watch all unto itself. I learned a lot from that episode even though I thought I already knew most of it. A weird "holy crap" moment for me was that my high school class was forced to read The Great Gatsby (not the best method to get students to appreciate literature); but when all was said and done, we watched Robert Redford's performance of it (night and day contrast for me as a kid). When 20/20 got to that I was thinking, "How on earth did I forget about that movie????"

-

Does Sonar’s installation remove the TTS-1?

mettelus replied to Bass Guitar's topic in Cakewalk Sonar

+1, an install shouldn't remove anything, but I have had TTS-1 unregister itself often enough (due to PC maintenance) that I keep a txt file on my desktop to re-register it on the fly (needs to be entered from a command prompt): regsvr32 "c:\program files\cakewalk\shared dxi\tts-1\tts-1.dll" -

Thanks for posting feedback. Over the years I have come to value feedback from users on this forum significantly higher than other sources.... not only do I trust them (far) more, but you can also interact with them (and get a response) for follow on questions. I have always appreciated that from this community, but it doesn't get stated outright often.

-

LOL... this one came up after playing that and is even funnier... turns out the camera man on skates in the first video plays sax (sax players don't wear makeup, I guess)!

-

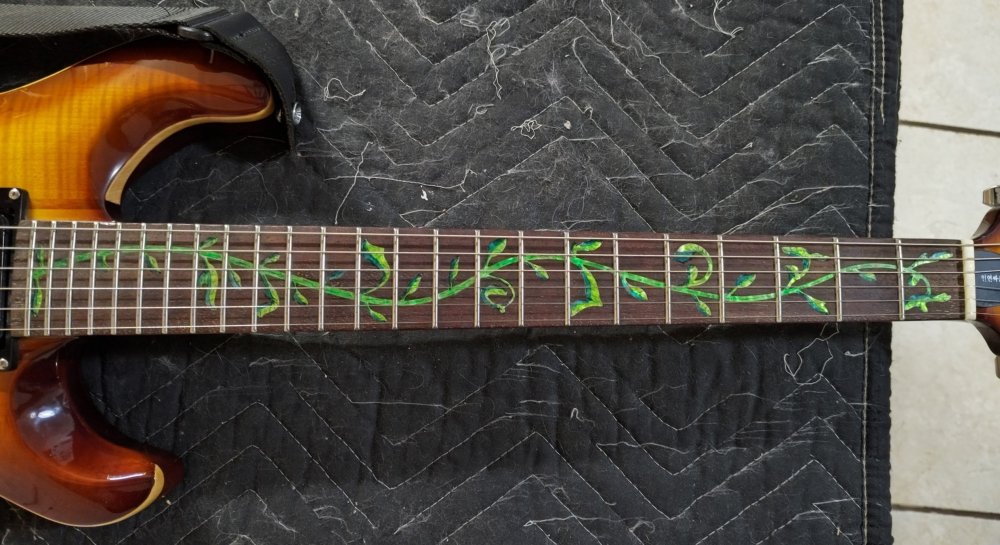

I had to dredge this post up because I couldn't remember when exactly I bought this guitar! I have had some major projects going on, so it has primarily been sitting in its box and only played once or twice a week, but was still on the "to do" list for final setup. The action on the upper frets was still higher than I prefer, but the neck profile has grown on me from what use I have done. I hadn't glued the nut back in, so loosened the strings to check the neck... straight as an arrow (zero relief)... I had a lot of height still on the bridge (can see in the above picture) so set the saddles visually to the fret radius after bottoming out the saddles for the E strings (lowest they would go). Updated: I actually went the "proper path" with the final setup (so replacing what was originally here). The high E was still 0.008" high and the low E was still 0.006" high at the first fret. I had done this because I was worried about the tremolo being bottomed out, but decided to set it up like is should be. I sanded the bottom edge of the nut to give me 0.020" clearance on the low E and 0.012" clearance on the high E at the first fret (like I normally would), then tested the tremolo and no issues. She is now fully set up and ready to go. This model (IP-300-TSB) has already gone back up in price (is $250 now), and tariffs might tear into it even more in the coming months; but for the $216 I paid for it, it may retain the "best bang for the buck" award forever. Again, the frets being properly done when it was made (let alone the wood used) made this guitar stand out as soon as I inspected it. I am going hold off on any electronic mods until I get a chance to fully test this for "what it is" first. It is a challenge to disengage "tinker mode" for me, but the reality is I often go into extreme overkill with things too! ******** Quick edit: Since the guitar is "physically" done, I happened to think of the vine and had a whole set of permanent markers I never used (and forgot why I even bought them!). I went with a three-tone green, and the yellowest of them is actually luminescent in the right light. I wasn't sure how much time I had with the amount of ink in the pens, so went for speed coloring it and didn't even remove the strings (just pushed them out of the way). In case anyone is interested, light coats always go first, then darker colors. After the fact, techniques like this can be lightened/blended with either fine sandpaper (600+, wet sanding preferred when possible) or even Brasso. I just wanted to get an initial coat of color on the vine and can touch it up during a string change at some point. The below pic is at night under incandescent lighting, so is not the best to show off the effect, but it gives an idea of what can be done with it.

-

That is definitely something to report to Cakewalk support, and it looks more like a font issue when I see that. Years ago I had Staff View go kerflooey and the underlying reason was the font that Staff View was dependent on for notation was missing from my machine. The proper font got posted (on the old forums) and that fixed it. If a font is missing from a machine, Windows (or a browser) will substitute its "best guess" as a replacement, but if that is totally off the mark, you will see things like what your screen capture is showing (in my case, Staff View was populated with Wingdings!). Only someone who can look at the installation package (bakers) would be able to see those details, so that is something to report back to Cakewalk directly.