-

Posts

7,019 -

Joined

-

Last visited

-

Days Won

38

Posts posted by msmcleod

-

-

If you want to keep cost down, the Shure SM58 sounds very similar to the SM7 / SM7b, albeit lacking some of the detail in the high end.

You could maybe compensate by using EQ, or a better solution might be to mix it with a condenser mic.-

1

1

-

-

3 hours ago, azslow3 said:

I don't think MIDI IO speedup is going to bring any improvement for the topic in this particular case.

That's good to hear

3 hours ago, azslow3 said:

3 hours ago, azslow3 said:...MIDI messages in question is reaction on parameter changes in Cakewalk. So something has to be changed during 10ms and AZ Controller has to find that change. In Cakewalk CS API that is always done by routine scanning, which by itself is going to take some time (primary in Cakewalk).

I don't think finding the change should take too long - assuming that you're not querying too many parameters, and the COM overhead isn't too high (it shouldn't be as by that point, you've already got the interface, and it's not inter-process). Cakewalk already knows the state, and all you're doing is a parameter look up on that state. There's no messages involved, just function calls and a big switch statement.

3 hours ago, azslow3 said:BTW I have already proposed on my forum that smooth changes in parameters should be inter/extrapolated on the hardware side (unlike in VST3, there is no ramp info possible in CS API, so I understand it is not easy to decide how to do this).

This was something I was going to suggest in my previous post. I built a couple of hardware projects that both eventually "failed" due to me relying on some sensible balance between the frequency of refresh rates, and what the hardware could realistically handle. In my case, my displays were i2C based and the i2C bus couldn't cope with the throughput of updates (I was also multiplexing on the i2C bus, which added further complications with higher rates). However, a lower refresh rate meant parameter changes weren't smooth enough. It's a pity, as it worked fine with a small number of knobs/displays, but just didn't scale up. In the end, I gave up and bought a Mackie C4

My suggestion for the OP however would be interpolation of the values in hardware. If continuous values are needed, it might need a phase-locked-loop based on a lower refresh rate - this could be implemented in software within the hardware's microcontroller.

-

Checkout my answer here:

-

You might find these useful:

Setting up ACT for plugins: https://youtu.be/UK24ySULnf0

Setting up ACT for surfaces: https://youtu.be/ObsW0t5FnzM -

GPU's are not general purpose devices, unlike CPU's.

They're very good at specific operations - none of which are particularly useful for audio processing.

They can be used for machine learning / bit-coin mining, but audio/DSP.... not so much (if at all).

-

3 minutes ago, Notes_Norton said:

I'm getting too many "VIdeos Unavailable" from Wibbles and others. I'm missing some good music. If you also post the name I can google the ones that don't work here.

I started getting this a month or so ago, mainly in Chromium based browsers. The issue is to do with how the browser deals with mixed content (i.e. by default, it now blocks mixed content).

The workaround is to enable mixed content for discuss.cakewalk.com:

https://www.howtogeek.com/443032/what-is-mixed-content-and-why-is-chrome-blocking-it/

-

1

1

-

-

I have to agree with @scook here - try to avoid 32 bit plugins if you can.

In saying that, if you must use 32 bit plugins, consider using JBridge as it has a few configuration options to get around the fact they were all written to use a much older OS.FWIW I've had no issues with the DSK plugins in the past when they were configured to use the JBridge wrapper.

As it stands, the only 32 bit plugins that I've got configured to use BitBridge are the legacy Cakewalk ones - all others are using JBridge. -

2 hours ago, norfolkmastering said:

Hi Mark

Thanks for checking this out.

I am using AZ Controller to configure and output the MIDI messages. Does that count as 'outside of Cakewalk'?

If 'yes' then please let me know what would be required to get the 10ms refresh operational.

Best regards

Robert

AFAIK, AZController uses Cakewalk to send it's MIDI messages, as it'll be using the control surface API. So whilst the calls to refresh can be increased, the frequency of how quickly MIDI messages can be sent out from AZController can't as long as it's doing it via Cakewalk.

What I think is required is for AZController to call midiOutShortMsg and midiOutLongMsg directly. This would require @azslow3 to make some modifications to AZController. You'd also need to ensure that the MIDI out device was NOT enabled in Cakewalk so ensure that AZController had full control over it. In other words, in Cakewalk, you'd configure your MIDI In device only, but the MIDI Out device would have to be set within AZController itself.

It's also worth noting that a refresh frequency of 10ms had an adverse affect on Cakewalk's performance.

I experienced a fair amount of dropouts unless all other applications were closed - just having a browser open would cause dropouts in Cakewalk. I suspect this would be improved if AZController had full control over sending MIDI messages, but given that all control surface functions are performed in the main thread, I'm not sure this would be a significant improvement unless perhaps AZController used a separate thread for sending MIDI messages. Any calls to Cakewalk must continue to be done in the main thread though, and doing this every 10ms will adversely affect performance.

-

2 hours ago, shane said:

This happens 100% of the time on my crashes, which are about once a day?

I always assumed it was a problem letting go of my MIDIMAN MIDISPORT 8x8 Drivers, and the only solution is to reboot.I use the MidiSport 8x8 (the old blue & yellow one), and I don't have issues with it causing CbB to crash not close properly.

-

8 hours ago, S K said:

Yeah I just don't tbh how/why people use mixers that aren't motorised (or touch sensitive - although I can understand this one more). Perhaps for the transport bit, and for controlling various VST parameters. But if mixing is your reason then really they need to be motorised as far as I know.

I've got an MCU + XT + C4 in my studio, but in my spare room I use the Korg nanoKONTROL Studio which doesn't have motorised faders.

For the most part, I don't find the lack of motorised faders much of an issue. Sure, it's a pain setting them up to initial values, but:- I try to keep the fader at zero as much as possible, using offset mode to get it to the right place.

- It's primary function for me is to record bus volume automation by riding the bus faders during mixdown. I find it very useful to be able to control 8 buses at once.

- For tweaking tracks, it's equally useful, but I use it less for that.

-

Definitely MCU - why? HUI is dead, and MCU replaced it... and as far as Cakewalk is concerned, MCU is supported whereas HUI is only very partially supported.

HUI was originally conceived by DigiDesign & Mackie for ProTools ( before AVID took over ), and came up with HUI:

A few years later, Mackie did a similar thing with eMagic for Logic and came up with this:

Presumably realising they couldn't keep coming up with completely new surfaces for each DAW, Mackie discontinued the HUI, and turned the eMagic Logic Control into the Mackie Control Universal ( same hardware, different ROM ).

The Mackie Control Universal works in three modes:- HUI ( for ProTools )

- Logic

- MCU ( for everything else )

They also brought out some extenders to add to the MCU ( which speak the same MCU language: The XT (an extra 8 faders), and the C4 ( lots of knobs for controlling plugins) :

These were given a new look in the MCU Pro series, but apart from some minor differences (e.g. USB interface & better faders), functionally they're exactly the same:

The MCU Pro and the MCU Pro Extender are still available. Unfortunately the C4 Pro isn't - except on eBay - a pity really, because the C4 rocks.-

1

1

-

FWIW, there's a pretty good relationship between most DAW manufacturers.

PreSonus have been great with regards to Cakewalk - the FaderPort range have Cakewalk/SONAR modes, however this does piggy back on the existing MackieControl support.

Steinberg & Cakewalk also have a good relationship.

With the AVID product though, it gets tricky. Writing a custom surface DLL from scratch takes a fair amount of time & investment.

The question AVID will be asking is, "How many more $30K surfaces would we sell if we did support Cakewalk?" For Cakewalk it would be, "How many more users would we get if we supported this $30K surface?"

The answer to both is most likely, "...not enough to justify the investment."

The solution might be for the OP to pay a developer to write a custom Control Surface DLL (or configure AZ Controller if this is feasible). However, from experience I can confidently say the majority of time developing a Control Surface DLL is spent in testing... and a developer will need access to one of those $30K control surfaces (and the physical space to set it up somewhere!).

-

2

2

-

-

It's the stereo mix of all the drum parts, as opposed to the individual outs of each kit part.

-

@norfolkmastering - I had a look at the refresh interval issue. It looks like some of the UI optimisations have had the effect of capping the refresh rate at 25ms. Although the timer controlling the refresh is in its own thread, the refresh and any MIDI messages that are requested to be sent out by the control surface are synchronised to the main thread.

I did manage to find a way around this to support a refresh of 10ms, however its worth noting that any MIDI messages sent back out via Cakewalk will still be capped at 25ms.The only way around this would be for your control surface DLL to deal with the MIDI messages outside of Cakewalk - either that, or not use MIDI at all for controlling the analog side.

-

I sounds like Spire is generating MIDI events which are being picked up by the subsequent recording.

You've two options:1. Disable MIDI output on Spire by going to the drop down menu at the top of the synth's dialog; or

2. On the track you're recording on, make sure your MIDI input is only set to your controller keyboard instead of "All Inputs".-

1

1

-

-

If you've got audio clips with automation, and you're changing tempo without stretching those clips to match the tempo, then you need to make sure your automation timebase is set at Absolute.

e.g.:

-

1

1

-

1

1

-

-

2 minutes ago, abacab said:

Those 2 do sound strange!

For PAD Man at the corner, LFO1 in the Mod matrix on Layer 2 is clearly bonkers.

For LED Birdland, it appears that the same thing is occurring...

On the flip side, I have listened to dozens and dozens of other patches that sound just fine! Maybe a support ticket is justified...

Yeah, I think I'll raise one.

Thanks for checking it out though - it's good to know I'm not the only one with the issue.-

1

1

-

-

4 hours ago, Mark Nicholson said:

Thank you Mark!

I have a related question. I recorded some of my voice and used MAnalyzer to look at it. Averaging over ~3 seconds gives me a spectrum like so:

Now, when I start equalizing, what is my goal? Do I want to see a flat spectrum? What would a good spectrum look like?

An analyzer may give you an indication as to where frequencies are dominant (or not), but that's really only any use when you know what you're looking for (i.e. you can hear something bad, but not sure where it might be). It can also be useful if two instruments aren't fitting well together, as you can identify where their common frequencies are.

But in all honesty, visual tools can lead you down a rabbit hole where you're cutting/boosting frequencies for no good reason at all, and in the end it just sounds awful.

My best advice is... use your ears, not your eyes!

-

1

1

-

1

1

-

-

50 minutes ago, abacab said:

I have the everything bundle. Which presets are you speaking of?

Two jumped out at me right away.

PAD Man at the corner

LED Birdland-

1

1

-

-

I upgraded and almost immediately reverted back to 2.9.8 as a few of the presets are totally messed up.

-

6 hours ago, Skelm said:

Thanks for this - I am not seeing a code, as the audio engine does not stop, there is just a short interruption in playback. Not sure why it isn't stopping playback as that article implies should be happening.

Cakewalk attempts to recover from dropouts during playback, depending on the DropoutMSec setting in your configuration file. This isn't the case for recording where dropouts aren't tolerated because you don't want glitches in your recording.

Plugin UI's should really be designed to be as lightweight as possible (CPU wise) rather than be focused on maximum eye-candy. -

3 hours ago, Skelm said:

Hi, changing the interface isn't really an option - it is also my mixing desk. Where do I check the dropout code?

https://www.cakewalk.com/Documentation?product=Cakewalk&language=3&help=AudioPerformance.24.html

-

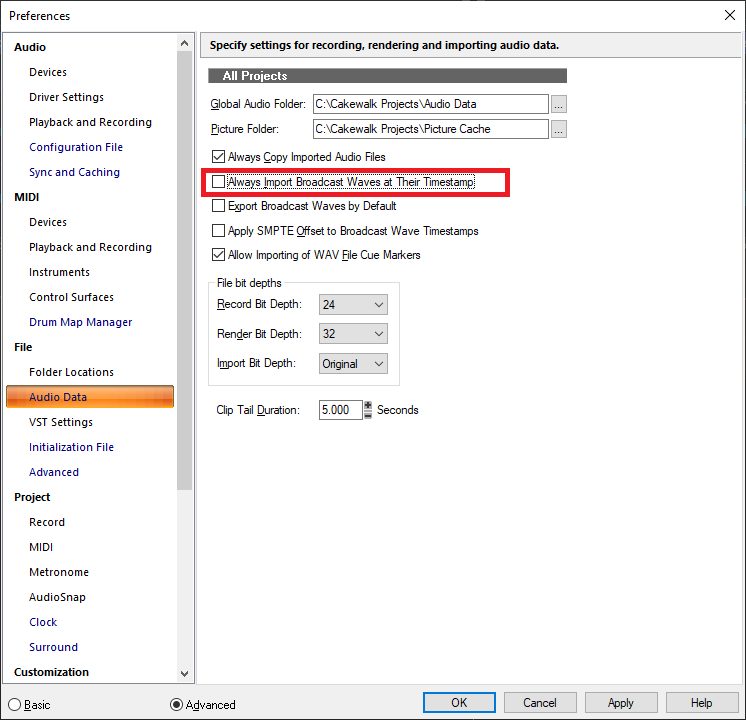

When importing audio, take note of this setting:

If it's checked, then the audio will be inserted at the timestamp embedded within the audio. This is useful if you've exported clips from another DAW that all start at different times, so this setting will preserve their start time.

If you want the audio to be inserted at drop location, leave this option unchecked.

Note that not all waveforms have time-stamps embedded, but if you do drag a wave file into your project and can't see it, it's likely this setting that has caused it to be inserted later on in the project.

-

2

2

-

-

Although the (+/=) key will toggle between the last edit filter and the clips, SHIFT + (+/=) will toggle between the clip gain automation and clips.

SHIFT + (+/=) doesn't alter the "last edit filter", so you can use it to access clip gain automation regardless of what the non-shift version has set as it's last filter.

Typically I use (+/=) to toggle between track volume and clips, and SHIFT + (+/=) to toggle between clip gain automation and clips.

-

2

2

-

Kawai K1 and Kawai K4 Instrument Definitions

in Tutorials

Posted

I stopped using my K1 as a controller because of the All Notes Off thing... I used MIDI-OX to filter them out for a while, but I got fed up with patching it up every time I booted up.

Pity really, as it was the only decent priced keyboard with channel aftertouch. I use an EMU X-Board now when I need aftertouch.

If I was still using the K1, nowadays I'd probably spend £15 / $20 on an arduino + MIDI shield and have it do the filtering for me.