-

Posts

2,172 -

Joined

-

Last visited

Everything posted by Glenn Stanton

-

coolio idea. what would be nice if perhaps as part of the plugin scan, it can generate a cached thumbnail (like 512x512 pixels so it's readable even on high res screens) and store it in a subdirectory of the plugin folder it was found in: C:\Program Files\<my plugin location folder>\thumbnails then hovering over the plugin name in the plugin browser, or plugin selection list, would (after a second or so) popup.

-

did you check to see if somehow they got added to the "excluded" plugins list?

-

Computer upgrade seems useless

Glenn Stanton replied to Cobus Prinsloo's topic in Cakewalk by BandLab

don't uninstall it, disable in your device manager. otherwise it will reinstall itself. RealTek drivers can be like a, erm, certain kind of painful itch that doesn't go away just because the redness disappears. just sayin' for a friend... ahem, -

still working for me. even with the .vst3 extension... ? maybe try a re-install and make sure you have proper permissions on the plugin folder as well as perhaps using run as admin in the install? sometimes user level installs into program file folders don't always behave as expected - MS at it's best trying to secure your desktop from you... ?

-

Davinci Resolve. free. get it now.

-

-

just offering ideas. i mainly use automation after the fact and mostly draw the things i want. so if i've got solos, etc, then i'm performing them and then as part of the mix or sound design, using FX and automation. if i have a live FX track, i can re-amp from the clean raw track. i think you have to stop recording to add the snapshot? so turn on the write auomation and then adjusting as you play? dunno.

-

My guitar recording is always early in the mix in ASIO

Glenn Stanton replied to Freshmint Melee's question in Q&A

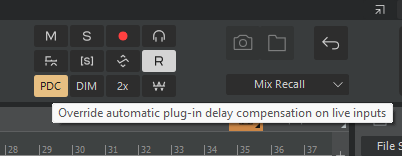

one option if your interface allows it, use direct monitoring and turn the echo off on the guitar track so you can listen to the other parts and hear your guitar in real time (presuming the 5.5ms round trip is still too much). if you're using FX on your guitar track while recording this can also cause the timing to be off depending on the FX and if you're using the PDC or not (which is the delay compensation to ensure all FX etc play at the same time - you can try to turn it off (press the PDC button).- 10 replies

-

- 1

-

-

- asio

- latency issue

-

(and 1 more)

Tagged with:

-

Is there any chance that Cakewalk Z3TA+ will be revived?

Glenn Stanton replied to Name's topic in Instruments & Effects

run the standalone as admin and then enter your serial number and activation codes. it should then run as a normal afterwards.- 26 replies

-

- cakewalk software

- cakewalk sonar

- (and 5 more)

-

my personal mic - an AT 3035 (discontinued https://www.audio-technica.com/en-us/at3035) - just works for my voice as does an SM58. for many people, i found my Behringer B-2 Pro (https://www.behringer.com/product.html?modelCode=0504-AAC) is a miracle for vocals. no idea why a $200 mic made by the evil B corp does it, but i've used it live as well and people singing (meaning people who can sing and thus should be heard in the best possible way) just comes across as natural. i have seen negative reviews by some people and likely they got the lemon model, but most reviews list it as really good.

-

i think one of the advantages of using the envelope vs snapshots - when you have write automation on, it will capture all the changes for that track (and creates a bunch of envelopes to do it) whereas the snapshot, if not mistaken, you need to select the controls you want to snapshot and it only creates the point on an otherwise open envelope. you can always smooth and edit the envelopes laters if you need distinct shifts in settings. and remove any extraneous ones as well.

-

loose contacts. solder joints inside which may have deteriorated over time.

-

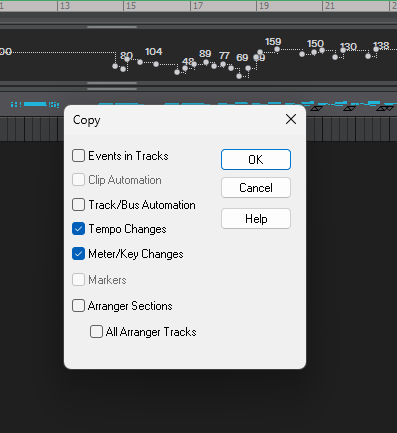

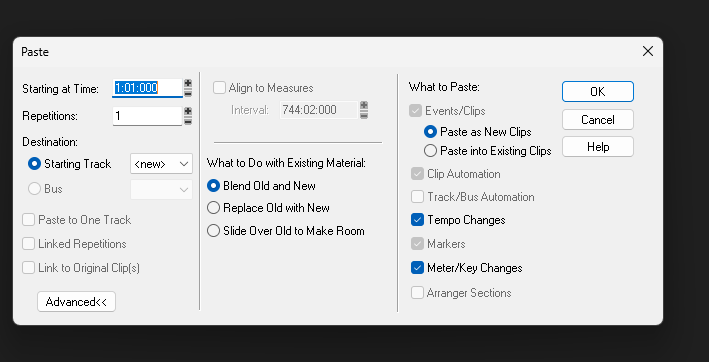

i usually just double click on the MIDI file to open it in Sonar (or CW) and select a track and edit -> copy special: then in a new project i'll insert it via edit -> paste special i'm sure there are other methods but i find this fast and reliable.

- 1 reply

-

- 1

-

-

Why this awkward roll out of Cakewalk Sonar ?

Glenn Stanton replied to Mannymac's topic in Cakewalk by BandLab

i dunno, i think killing any hope off first extends the misery indefinitely versus giving any possible glimpses of hope. the grueling endless darkness while at first seems like respite from the cycling of hope on, hope off, is much better. many corporations are good at this but then again, corporations are really just like collective evil from persons... -

generally, the automation write is the memory. otherwise you'd want to render which section with the EQ and FX before recording the next segment. or you'd create an envelope for each of the things you want to adjust. and the other option is to do the automation after you record the raw track giving you the ability to tweak and make transitions in a way the more static approach could not. i tend to record clean (one track) and printed FX (one track). almost inevitably i re-use the raw track into an aux track with FX and automation because i have some idea to shift some aspect which the printed track would not let me do. there is no "snapshot" per se. the envelope contains the "knowledge" if you're doing this as sound design then if may be a different workflow.

-

Did the Waves Central "Update" break Cakewalk by Bandlab?

Glenn Stanton replied to Kevin White's topic in Cakewalk by BandLab

honestly, i forgot to do all the prep stuff i did for the v14 from v12 updates and just ran the v15 updates (5 plugins (out of ~70) are still v11 or v14) when finished, i did a reboot (always after a massive updates on my system regardless) and then ran the plugin scans on Sonar, Next, Komplete Kontrol, Acoustica, RX, Ozone, Sound Forge etc. and weirdly not a single one reported a problem with the Waves stuff! LOL. Acoustica flagged the instruments as not being FX (like usual). one note: during a load of a Sonar project after the update, i go a toaster notification the settings for the H-Delay Stereo might not be set correctly - when i checked - they were set correctly but i think the new split channel on the Stereo version meant Sonar had some gaps from the previous version when reloading the project settings. i opened up a bunch more projects just to check and did not see this warning again and all settings were correct as set previously. -

Did the Waves Central "Update" break Cakewalk by Bandlab?

Glenn Stanton replied to Kevin White's topic in Cakewalk by BandLab

you probably want to run the clear cache function on the Waves central and do a fresh install. generally i've haven't had a significant issue with Waves for 5 years or more. and the support is usually fast to get back to you. -

there is the "tools editor" to add an application to the list of utilities. generally for myself - i check all the files before adding them - so noise, clicks etc. or use my RX etc plugins inline then render. i never found the editor tool approach work well for me.

-

a blues song about how relationships can teeter on the brink for the smallest of things and the effort to repair it. i was originally not going to make a new version of this song but then i was doing some experimentation on 6/4 vs 6/8 and ended up using the chord progression, and for some reason the song just reassembled itself. vocals as well. comments welcome. from my reimagined relics collection of old and new material. https://www.reverbnation.com/fossile/album/312545-relics BPM 140 Meter 6/4 Key Dm --------------------------------------------------------------------- Lyrics --------------------------------------------------------------------- four in the morning that's my pillow there fussing and fighting always then you say you don't care you tell me if i loved you then twice the fool am i i said why? baby, it'll be alright baby, it'll be alright baby, it'll be alright |it will be all right| out in the street night the rain in your hair fearing that look in your eye when you say you don't care you tell me if i loved you then twice the fool am i i said why? baby, it'll be alright baby, it'll be alright baby, it'll be alright |it will be all right| [solo] out in the street night the rain in your hair fearing that look in your eye when you say you don't care you tell me if i loved you then twice the fool am i i said why? baby, it'll be alright baby, it'll be alright baby, it'll be alright |it will be all right| [break] baby, it'll be alright baby, it'll be alright baby, it'll be alright

-

Is Auto-tune region FX compatible with Cakewalk and Sonar?

Glenn Stanton replied to Carlos Pérez's question in Q&A

couple of thoughts - you might exclude your CW from your A/V and make sure any cloud services are not conflicting with the app and any plugins which might use say OneDrive for caching etc. have 40 A/V instances running is not a great thing... if the Autotune isn't able to use ARA then region FX may be limited to the plugin handling of the separation files etc used to keep memory under control and the overhead within the DAW. -

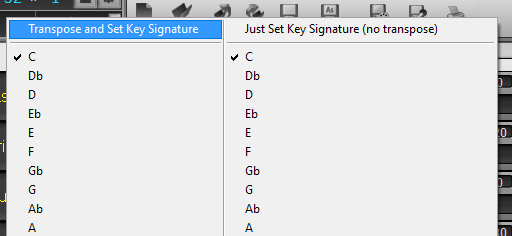

in BIAB - there are two distinct options for setting the key: one sets the key and changes the chords etc, and the other simple sets the key and leaves everything else intact. in the latter, it may influence some of a regenerated performance, in the former, it will definitely change things ? basically been this way forever (measured in BIAB years)...

-

pretty sure CW (and Sonar) don't support anything other than DX (or equiv for MIDI plugin), VST and VST3. i seem to remember some threads on supporting CLAP. in my setup, i have some apps which can use CLAP, and of course PT uses AAX - so if a plugin installer has those i usually just install them as well even if they're not used in CW. the PT AAX go into the stock AAX plugins folder, and the CLAP get installed into my C:\Program Files\VSTPlugins or some default to the Steinberg plugins folder. have not seen any LV2 plugins in my circle of plugins but it does look interesting: https://lv2plug.in/pages/why-lv2.html

-

i think she was comparing me in a relative way to actual singers who can sing LOL ? i think she got the idea of the description from some of the sesame street characters...