-

Posts

1,637 -

Joined

-

Last visited

-

Days Won

1

Everything posted by Mark Morgon-Shaw

-

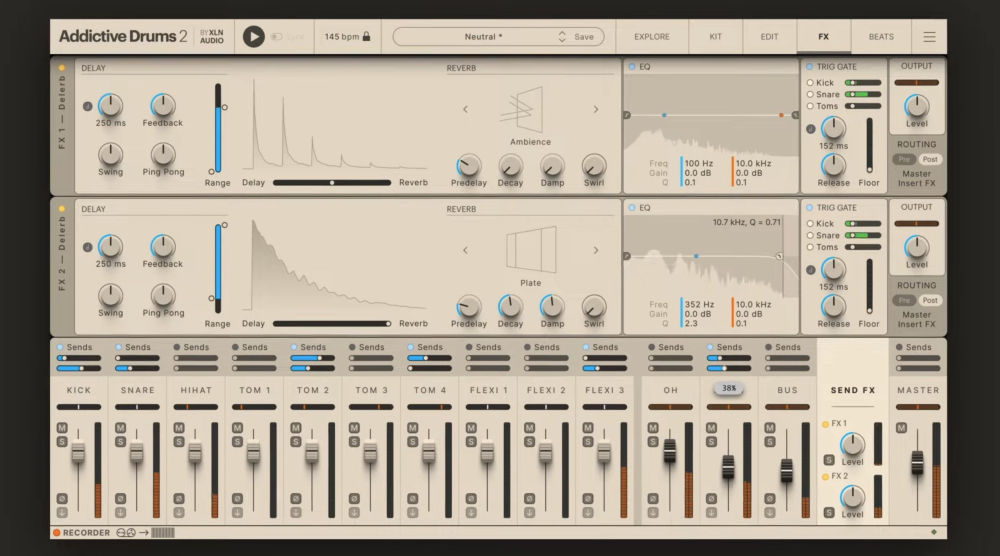

Today I was looking at the new update to Addictive Drums 2 - Another company that has moved away from their skeuomorphic GUI design of a decade ago , to something flatter and more modern looking just as Cakewalk Sonar has done with controversial results. To my eyes however the team XLN have managed to do this very nicely indeed, bringing the interface a fresh modern look without sacrificing ease of use and visual clarity. Although they've moved away from the 3D look, it does still retain some subtle depth in places which I think is much easier to work with over long periods versus a more 2D approach - this is more like something I wish the Bakers had done with Sonar and I hope it will improve at some point .

-

Don’t Wait for Inspiration. Instead, take action, and inspiration will often follow. Showing up and opening your DAW every day, regardless of whether you feel inspired will help. Embrace the process - Consistency is key to nurturing creativity.

-

Thanks Misha This thread is 5yrs old now, in the end I got the 12core Ryzen 3900x which has served me really well. I'm actually thinking about upgrading now. I won't go Intel because they've had huge probliems with their latest generation of chips . The new Sonar seems to have improved it's multi thread performance so at some point I will go for a 16core , probably the 9950X but I'll wait a while for it to come down in price. I've actually built 3 other PC's in that 5yrs for each of my kids as gaming rigs and went for AMD each time, partly so when I upgrade one of them can have my old CPU which will be faster then theirs. Back in the 90s I got the original Autotune plugin, and I had to upgade from a 486-DX4 100 to a Pentium75 just so I could run one instance of it.

-

I just got the new Cakewalk Sonar update and, WOW!

Mark Morgon-Shaw replied to RexRed's topic in Cakewalk Sonar

Clearly you have never worked in marketing -

And the arrangement is more important than the mix And the performances are just as important too. We could go on to the writing etc being more important than all of the above! So yeah, mastering is just the final few % difference. Honestly unless it's a commercial release, one of the many AI assisted mastering services will get you 80-90% of the way there.

-

I just got the new Cakewalk Sonar update and, WOW!

Mark Morgon-Shaw replied to RexRed's topic in Cakewalk Sonar

Weird that that has not been much fanfare, where are the magazine articles and media hype ? It's alomst like Cakewalk Sonar has slipped out through the back door. -

Thanks Mark Megatrax is a big site where a lot of production companies that make broadcast TV go to get their music so it's a good platform to get on if you are aiming for Sync placements. MLB wasn't the specfic target, the original brief was for Sport Songs with a Female empowerment vibe as it was in advance of the Women's World Cup ( Soccer ) at the time and they wanted to put an album together with that purpose in mind. Ours was one of 16 tracks on the album but was selected to be track one which normally gets the most exposure, and our track was also selected for the social media campaign when the album droppped which was cool but no World Cup placements materialised. Over a period of time it's gotten air on German TV, French TV, American Football on TNT and now MLB so it all worked out in the end. We haven't been paid any of the royalties for it yet as they take 1-2yrs to get processed and arrive at the respective performing rights organisations. Should make 4 figures for us both eventually but TV music is a lottery so will have to wait and see. https://www.instagram.com/p/Cq-tAkGtP7t/?utm_source=ig_web_copy_link&igsh=MzRlODBiNWFlZA==

-

Thanks Nigel !

-

I've never used the Waves products but I've owned a pair of Slate VSX headphones for almost 2yrs now. I do find them useful but I don't use them as a full replacement for working on my monitors. My room is acoustically treated/measured and then further honed with Arc Studio so it's a decent enviroment to mix in and know the mixes will translate pretty well once they leave my studio which is imperitive when writing tracks that will end up TV/Radio ultimately. Where VSX works well for me is the following : 1 - A reliable simulated mix enviroment to work with at night when other family members are sleeping 2 - A fast way of double checking mixes without needing to resort to taking it elsewhere like the car 3 - A way of shutting off oustide world out when working on a track that benefits from being able to crank it up in a more vibey sounding space I find it does the job well for all of these but I do agree they have been overhyped somewhat. I guess everyone's circumstances are different and mixing on my actual speakers is much more preferable but there are things I can't hear on them that I can pick up on VSX especially in the really low end as I don't have a sub but some of the emulations do. Everyone's workflow is different so whether you need a product like this depends on your needs. Personally I write about 100 tracks each year so I need to be able to get things done quickly for deadlines etc. I don't have the luxury of time to take my mixes other places and listen to them, or stop working because the kids are in bed so it's great for that. I don't find the 'illusion' massively convincing but it is better than just using normal headphones and mixes I make with them sound the way I thought they would when I play them on real speakers which isn't the case with standard cans. The one thing in them that does sound real is the Avantone Mixcube in Steven Slate's Studio and I think it's because it's a mono speaker so the illusion of it coming from a real speaker is much stronger. I have a real Mixcube and the emulation is virtually indistinguishable, so that's a really useful tool if you don't own one already as they cost around £250 to buy. I know some other guys that do work for sync music libraries and publishers too that swear by VSX but they're not working in properly treated rooms either and some of them have mobile rigs and are working in different locations as well and obviously it's a boon to be able to 'take the studio with you'. So I think they get more use out them than I do my set. Thje other good thing is they keep updating the software, so we have a systemwide app now that wasn't in it when I bought them originally and they've improved the room models by developing new techniques to measure them. There is a V5 of the software in beta testing which I hear will include a hearing test type setup so they are much better tailored to each persons ears as well as newer improved modelling that they figured out when they added some new rooms recently. As ever YMMV and choose wisely. Mark

-

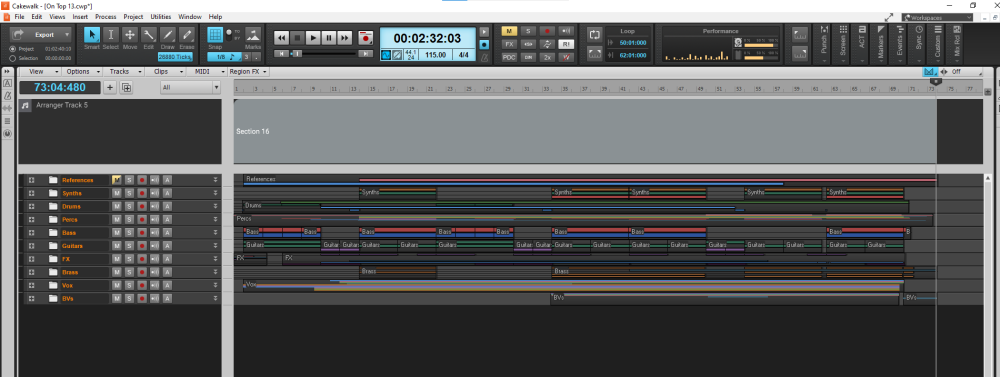

I mostly do instrumental music but a song I co-wrote with Canadian singer Michelle Lindley has been the MLB network this past few weeks. They seem to favour the instrumental version though as they can talk over it 😆 Our song is called We're On Top and you can listen to it here https://www.megatrax.com/tracks?q=We're On Top I wrote the core of it in CbB before sending stems for Michelle to track her vocals in Cubase, then she sent the vocal stems back via Dropbox and I finished the mix/master in CbB. It took 2 weeks, comprises of 60 tracks in total ....File stats say I spent 60hrs on it ( File Stats are in the notes section of the Browser for those who have never clicked ) Here is the a screenshot of the final project for those interested.

-

Seeking clarification on purchasing Sonar

Mark Morgon-Shaw replied to norfolkmastering's topic in Cakewalk Sonar

Like X-Sampler for example. -

I felt the same about X1 and stayed on 8.5 until CbB was realeased. I think it was 9 years and it never let me down. Feel like I am in the same boat again - Lots of nice improvements but no killer "must have " feature for my own workflow in the new Sonar. But the GUI is messy and harder to work with to my eyes, I find it slows me down so I prefer to stick with CbB. I hope they improve it though.

-

https://www.soundonsound.com/reviews/projectsam-symphobia-2 https://www.soundonsound.com/news/lumina-projectsam-now-shipping https://www.soundonsound.com/reviews/projectsam-symphobia-4-pandora

-

I prefer Symphobia over Albion One, they have a load of free stuff over at the Projectsam site so you can try selected instruments risk free. The interface is much better on Sympobia

-

Computer upgrade seems useless

Mark Morgon-Shaw replied to Cobus Prinsloo's topic in Cakewalk by BandLab

Network Interface Card -

Yes, I've tried all of them. I am a lifelong Mercury user since whichever version it first introduced with many years ago. Even before themes were introduced the basic GUI was closer looking to Mercury than anything else so it's kinda been the default since the dawn of the App. Mercury Classic is obviously the closest design but it's the flatness that bugs me not the colours. I don't like dark GUI's nor overly colourful ones. I feel no need to colour anything, I don't even use the console view for anything other than looking at buses .

-

You can do everything totally in the box, even the guitars.

-

How to use reference tracks witbout having to buy them?

Mark Morgon-Shaw replied to T Boog's topic in Cakewalk by BandLab

I use somewhere between 300- 400 reference tracks a year You can grab them from straight from Youtube at 256k which is perfectly fine for referencing purposes. Most of what what makes a great mix isn't in the super Hi-Fi stuff anyway ( i.e. a great mix will sound great on a crappy radio as well ) It's much more about arrangement , mix balancing , midrange translation, production tricks, sound design etc. than it is ultimate fidelity of the file. -

Computer upgrade seems useless

Mark Morgon-Shaw replied to Cobus Prinsloo's topic in Cakewalk by BandLab

No it won't do anything within the App to optimize your PC - It will just help you identify what's causing the issue On this system I have now it was the NIC causing clicks and pops. On the system before it was a sensor inside the case , and as soon as I disabled it the issues were gone. So...it's a case of looking at what it's telling you and doing some digging -

Best DAW for Seniors

- 14 replies

-

- 10

-

-

-

And yet...... it sounds subjectively better written, performed and produced than most of the stuff in the songs forum here. Probably because it's trained on professionally produced music. Personally I don't think we're going to see human music replaced by AI music, but it will be another genre alongside all the others. Most people follow the artists/bands they like because they're invested not only in the music but in the person/people too. AI could write the best song in the world but if there's no human story or personality behind it listeners can't get as invested - I've followed some my favourite bands since the 80s &90s through all the ups and downs, breakups, comebacks etc. It's all part of the rich tapestry of their story which we connect with on a human level. What I predict will happen is we'll get a boom of novelty AI generated songs which lots of people will think is cool for a little while but once the novelty wears off it will become a bit same old, same old and people will naturally gravitate back to the actual artists they admire. Like someone said above if anyone can do it then there's no real value. Things have value that are scarce. If you commodetise it then it loses it's appeal. In my little niche of the industry some folks are worried that people writing background music for TV might get replaced by AI. I'm not overly worried myself for a number of reasons, some of them musical, some of them practical , some ethical, and some of them legisaltive but basically for an AI to do what I do would not be that straightforward and even if it could there are changes that would need to be made to the legal copyright framework to allow it to happen. More than one Production Music company that I write for have instigated a " No AI generated material " policy because there is a risk of someone being sued if an AI model spits out a hook that's close to another copyrighted work for example. Overall I think it will have it's little day in the sun and then once the novelty wears off we'll be back to business as usual but hopefully with some better tools to help us - not replace us. Mark ( Sync Producer with music in over 1K TV shows )

- 83 replies

-

- 2

-

-

- ai

- artificial intelligence

-

(and 2 more)

Tagged with:

-

The first music software I used was Type A Tune on the Vic 20 in 1982 https://www.computinghistory.org.uk/det/20923/VC-20 Type-a-Tune/ However the the first MIDI sequencer to use the paradigm of displaying notes that move left to right was likely the Fairlight CMI (Computer Musical Instrument) Series III, introduced in the early 1980s. This was one of the first digital audio workstations (DAWs) that included a graphical user interface for sequencing.