-

Posts

8,649 -

Joined

-

Last visited

-

Days Won

30

Everything posted by Starship Krupa

-

It's official: CbB will not continue for long.

Starship Krupa replied to John Vere's topic in Cakewalk by BandLab

Yes. The first thing to do with any program when you save something and then when you reopen it it's corrupted is to check the integrity of your disk. Drives eventually go bad physically, some sooner than others, and while it's much less common than it was 25 years ago, the file system can get corrupted, too. The way to check is to go to My Computer, then right click on the drive and select Properties. From there, click on the Tools tab. Also, Windows Defender is good at what it does (although I have issues with how it does it), but if I'm having any mystery troubles, I also like to run Malwarebytes Free. Try those first, see if they help. -

question regarding the new update coming up

Starship Krupa replied to greg54's topic in Cakewalk by BandLab

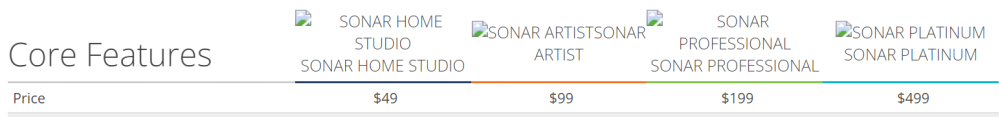

By crippled, do you mean that it didn't have all of the modules that Platinum did? CbB shipped with fewer modules than Platinum as well. It left out a couple of the compressors, such as the PC-2A. Can't remember the other one, maybe the Bus Compressor 4K or Channel Compressor 4K? It also had no Concrete Limiter. Artist, on the other hand, at least according to that chart, only had the Style Dials, with the Style Dials' "back ends," the PX-64 Percussion Strip, VX-64 Vocal Strip, and the TL-64 Tube Leveler probably hidden like they were in CbB (until we figured out how to unhide them). For people who were owners of Platinum and installed CbB, it would have looked and acted like exactly the same program, due to Platinum's extra goodies coming over seamlessly (in most cases). There were a LOT of extra goodies. One of the reasons that I started both the "Favorite Freeware" topics years ago was that CbB shipped with such a bare minimum of plug-ins. Especially in the area of instruments, it's lacking a bunch of Cakewalk-branded ones that even Professional came with. -

question regarding the new update coming up

Starship Krupa replied to greg54's topic in Cakewalk by BandLab

Thank you for letting me know this. I wonder why Cakewalk Inc. left those features off the comparison list (or did I just miss them, or were they added after the list was made?). Plug-in Load Balancing is a big plus for me. Still not quite the "many" that Byron referred to, but an important feature to me. I once experimented with a challenging project on my 11-year-old laptop, changed nothing but turning Plug-In Load Balancing off, and it couldn't even play it. The dithering would also be if I ever got projects to the point where I wanted to render them for conversion. ? I'm not sure why it stirred controversy when I said I thought CbB was similar to SONAR Professional feature-wise. I didn't say "identical." And even now that HIBI has kindly enlightened me, for feature comparison purposes, CbB still seems to me closer to Professional than to Platinum. Nobody outside of BandLab knows what features other than the new GUI Cakewalk Sonar is going to have. I'm sure it will still have Drum Replacer, VocalSync, Plug-In Load Balancing, and the Pow-r dithering options, but what about bundled plug-ins? One thing that would be great would be if the Sonitus Suite could get a makeover and conversion to VST3. Whatever comes with it, I really hope it's not locked to Sonar. I use multiple DAW's and I don't like to use plug-ins that are tied to only one of them (I make an exception for the almighty Quadcurve EQ). My attitude toward bundled plug-ins is similar to @JohnnyV's, I think. Given a $300 price difference, I would also opt to spend the money on other audio tools. -

question regarding the new update coming up

Starship Krupa replied to greg54's topic in Cakewalk by BandLab

Hah. You only think you do. Ever hear of The Mandela Effect? ? I never owned a version of SONAR in the X years, when I started it was still Cakewalk, then it became SONAR. I stopped using it around 2003 or so (SONAR 2.0 maybe?) and then didn't come back until CbB shipped, so I have nothing to go on but the old marketing materials on the website, old reviews, etc. When I first got CbB, I took a look at that page, with the comparative features and it looked to me like almost all of the things that separated Professional (sic) from Platinum were plug-ins, made either by Cakewalk or by 3rd parties. Since Drum Replacer looks and acts like a plug-in and shows up in Browser as one, I thought it was a plug-in, although the web page calls it a Core Feature, as it does half a dozen PC modules, which are plug-ins. After that, we wind up with the differences in "core features" amounting to VocalSync and Theme Editor. Much as I love Theme Editor, and will miss it if it's not going to be a part of Cakewalk Sonar, it's a separate program. Given that, if it's true, you might see why I came to the conclusion that CbB was closer to Professional than Platinum in features. I don't want to perpetuate misinformation if I can avoid it, so if I've got it wrong I want to know before I repeat anything that isn't factual. Someone just saying, "no, you're WRONG" without saying where I'm wrong doesn't help. What am I missing? This isn't just an academic question, the userbase is eventually going to be asked to start paying for Sonar, and it would be nice to know which version Cakewalk Sonar most resembles. Of course we haven't seen Sonar yet, so we don't know what features it will have that CbB doesn't. -

question regarding the new update coming up

Starship Krupa replied to greg54's topic in Cakewalk by BandLab

Could you please elaborate? Byron kindly filled me in on how the various versions were actually called "Home Studio," "Artist," "Studio," and "Platinum," and that there were "many more features, not just plug-ins" in Platinum. Are those the things that struck fear in you? If so, I hope that you'll accept my excuse that I was getting my information from the Cakewalk, Inc. website, which according to Byron, got one of the names of their own products wrong. I'm really surprised that nobody ever caught that or alerted them. I also can't figure out why, if there were many more non-plug-in features, that they would have a chart that showed only 3, one of which also functions as a plug-in and another that has nothing to do with audio processing. -

question regarding the new update coming up

Starship Krupa replied to greg54's topic in Cakewalk by BandLab

-

question regarding the new update coming up

Starship Krupa replied to greg54's topic in Cakewalk by BandLab

Thanks for setting me straight on that. I was misled by the very webpage you linked to, which calls the versions "Home Studio," "Artist," "Professional," and "Platinum." Did you ever point out their error to them back when the site was current? Do you see how I could have been misled into believing that there was a version of SONAR called "SONAR Professional?" Since your knowledge obviously eclipses my own, I beseech you to tell me what the "many more features" that were not plug-ins were. Please? The web page you linked to must be incorrect about this as well as the product names, because what it shows me is only three non-plug-in features that Profession..., sorry, "Studio" (sharp-eyed guy you are, how could their own website have gotten it wrong?) lacked in comparison to Platinum. Drum Replacer and Theme Editor, which were optional when CbB was first released (don't know if they still are), and VocalSync. On my systems, Drum Replacer installed as a plug-in when I tried installing it, so please forgive me for thinking of it as a plug-in. -

Lost my Microsoft GM driver with CbB 2023.09 - help please

Starship Krupa replied to John Speth's question in Q&A

So you consider suggestions to substitute a superior synth that you already have to be "noise?" Well, whatever. Using TTS-1 in Cakewalk will allow you to play back GM files and have them sound better than the way that has stopped working. -

Going by their avatar photo, I'd say that Eden is female....

- 28 replies

-

- cakewalkediting

- timing errors

-

(and 1 more)

Tagged with:

-

How to inactivate the automatic noise supressor? I can't record beatbox.

Starship Krupa replied to 天近藤's question in Q&A

What do you mean by "beatbox?" That word has more than one meaning in English. Is it a virtual instrument? A piece of hardware? Sounds made with the mouth? Cakewalk has no "automatic noise suppressor." Why do you think that such a thing is the problem? -

That's what I do, too. Inspector is only open for things that I can only do in Inspector, like use the arpeggiator, set clip colors, etc. I've always suspected that the Track Inspector is there because it can be nice for working on a laptop or other single-screen situation. Then there's the Craig Andertrick of having different strip modules in your Track Inspector and Console Views, but that's a pretty special case....

-

question regarding the new update coming up

Starship Krupa replied to greg54's topic in Cakewalk by BandLab

Oh how I wish that people would stop repeating figures like that. Cakewalk By BandLab, upon its first release was similar in feature set to SONAR Professional, a program that listed for $199.00 6 years ago. CbB was never "SONAR Platinum, but now FREE!" SONAR Platinum was a suite that bundled SONAR Professional with a huge pile of plug-ins. I think maybe half a dozen (if that) of the plug-ins that came with Platinum were included with CbB, and I believe a couple that Professional had that CbB doesn't. We can assign any personal value we want to CbB, but its closest antecedent sold for $199. If I were shopping for a DAW today, and had to choose between SONAR Platinum and SONAR Professional, I'd go with Professional, no question. I have all the plug-ins I need, and THEN some. It's a different scene now; many more companies are offering loss-leader freebie mixing and instrument plug-ins, many other people are using software tools that didn't exist back then to make free ones. I could comfortably do what I do with nothing but free software, it's just more fun to shop and get exactly what I want. ? -

It's official: CbB will not continue for long.

Starship Krupa replied to John Vere's topic in Cakewalk by BandLab

There's been no official statement to the effect that this is scheduled or foreseen. BandLab have kept the registration server for SONAR, which isn't even their product, alive for over 5 years since the demise of Cakewalk, Inc. And there's no end to that in sight either. Anything is possible, but based on the wisdom and good citizenship BandLab have displayed in the past 5 years, with zero, zip, nada, no unethical moves ever in regard to Cakewalk and/or the legacy SONAR userbase, I suspect that anyone who's using CbB now and wants to keep using it and not upgrade to Sonar will be able to do so for a reasonable time period, by which I mean "a matter of years." This is all speculation on my part. Past performance is no indication of future blah blah.... If by "at some point" you mean when/if BandLab divests the product line or goes under, well, anything's possible. As with any software, as well as food, water, and transportation, have a contingency plan. And as I've mentioned before, if for some reason you get caught out, you can always unplug your DAW from the Internet and set its clock back to a time when CbB was registered. ? -

It's official: CbB will not continue for long.

Starship Krupa replied to John Vere's topic in Cakewalk by BandLab

Did you contact official support? This forum is for peer support, no guarantee anyone is going to have an answer for you. That's what official support is for. The devs don't read and respond to every message, customer support is not their job. As far as support, their job is probably to answer email requests, period. -

WASAPI Exclusive: Push or Event?

Starship Krupa replied to Starship Krupa's topic in Cakewalk by BandLab

Okay, I Googled harder and found the answer to my own question. Cakewalk uses Event mode. "WASAPI has four different modes of operation. SONAR supports WASAPI Exclusive mode using event signaling, because this mode is best suited for audio programs." https://gaga.cakewalk.com/Documentation?product=SONAR X2&language=3&help=AudioPerformance.28.html Oddly, the documentation for CbB changes this to: "WASAPI has four different modes of operation. Cakewalk supports WASAPI Exclusive mode and WASAPI Shared mode." https://legacy.cakewalk.com/Documentation?product=Cakewalk&language=3&help=AudioPerformance.29.html This could be one for @Morten Saether. Maybe it could say "Sonar supports two different modes of WASAPI operation, WASAPI Exclusive mode and WASAPI Shared mode. Sonar uses event signaling for WASAPI Exclusive mode, which is best for audio programs." Or something. It's not like it comes up very often.... (apologies to the "it's not possible for DAW's to sound different from each other" crew ?) -

This is one for @Noel Borthwick. Does WASAPI Exclusive Event allow for lower latency (and/or jitter) than WASAPI Exclusive Push? Which method does Cakewalk use? Conditional question: if Cakewalk only allows Push, might there be benefits latency-wise (and/or jitter-wise) to have the option in Sonar to use Event? tl/dr: I was messing about with my music player apps, which offer different options for WASAPI Exclusive, so I looked it up. Event is said to offer lower latency on devices that support it (the DAC controls the flow of data, continuously "pulling" it from the app), and Push (where the app "pushes" it to the DAC) which is said to be more compatible with older devices but has higher latency. Another aspect is jitter. Event relies on the clock in the interface for timing whereas Push relies on the computer's clock. Interface clocks are known to be more stable, and audio quality can suffer when there's more jitter. This made me curious as to which of these Cakewalk uses, with the idea being if it uses Push, might having Event as an option for WASAPI Exclusive allow for lower latency (and/or jitter)? Optional, 'cause we wouldn't want to break anything. It could be like Thread Scheduling Model. Only for use on systems that support it. It works a treat with my laptop's Realtek.

-

I'm surprised that I didn't address this a couple of years ago. In my varied career (I'm like @bitflipper in that regard ?) I've worked for software companies both tiny and huge. Hardware/software integration, bundling deals, etc. were invariably the bailiwick of the marketing department. The marketing people would get together and schmooze, do lunch, hang out at NAMM, and either work out concrete deals, or just do what they could to convince their colleagues that mutual support would be beneficial to both companies. Anyone who's worked in "shrink wrap" (as we used to call it back when software shipped in boxes on CD or DVD ROM's, but it meant "consumer" rather than corporate developed-in-house software) can probably attest to this. Despite the many benefits of BandLab's stewardship of Cakewalk, this is one area that at least up to this point, doesn't seem to have been covered. Please correct me if I'm wrong, but CbB has always relied on the simple fact that it's free to do its marketing (along with the stalwart advocacy of many YouTubers). I recall one appearance by the team at the 2019 NAMM Show, but there was a long gap between NAMM Shows, and these days, fewer and fewer companies even participate. As it transitions to payware, along with the revenues I hope comes greater attention to marketing, in this and other areas. I, and probably anyone else who's worked in development for shrink-wrap, used to roll my eyes whenever a marketing person would approach, because they were known for sometimes having unrealistic ideas about what would be possible and desirable. In retrospect, though, I realize more of what value they actually did bring to the table. They are the "boots on the ground" out there researching what (they think) people want, what new features might be important to add, etc. What they say of course should always be....reviewed, but there's great value in having a dedicated person or staff to do that stuff. Even if the developers are all musicians or whatever, they may not be working in the latest genres, etc. There have recently on this forum been more requests for better controller integration. This may be due to more younger people adopting the software and wanting to use it with pad controllers and the like. I'm an older people and I want to get more into realtime control, don't have a pad controller yet but I will someday. I need to crack my knuckles and spend some time in the woodshed with Ableton Live and Matrix View. I have one control surface, a Korg nanoKONTROL 2, and it's been an absolute pain in the rear to get it working with Cakewalk, despite the fact that one of the developers uses one in his own studio. For any who might question whether "official" support by the hardware company is that important, it would be good to know that both Cakewalk and the other company have your back if you have issues. I don't worry about this with plug-ins, because the companies I spend money with either state CbB compatibility or are reputable and established enough that if anything goes wrong, either the Cakewalk engineers or the plug-in developer will fix it pronto.

-

TBF, though, a large percentage of regular forum participants (as opposed to lurkers) are people who got burned by your old bosses. I'll spot them a bit of FUD, although in their 6 years of driving the bus, BandLab have never shown anything other than exemplary behavior toward the userbase. Keeping the legacy registration servers lit for a company that went under 6 years ago? Something I used to point out way back in 2018 on the old forum when people would suggest that CbB would become a trojan data harvesting app, that BandLab would own all your music, ad nauseum. Such behavior would ruin a company's reputation forever. A lot of time, money and effort to spend for that result. I (and many others) have some projects that I wonder whether I'd finish if I had until the heat death of the solar system to do so. ? There are of course no plans to lock anybody out. BL have said that registered and validated installations of CbB will continue to function. And given their past behavior in regard to how you can still download and install SONAR, I don't expect that to change. CbB will be a legacy app just like SONAR. I'm certain that if someone contacted Cakewalk support and told them that they lost their BandLab username and password and now their old copy of CbB is in demo mode, whatever, they'll be taken care of. They do that for SONAR. And given that stuff like SONAR 8.5 still (mostly) works even though there are CbB users were not even born when it was discontinued, it should be decently immune to being broken by Windows updates for a good long while. I once pointed out that if BandLab vanishes in a puff of smoke, as a last resort, unplug your computer from the internet and set its clock back to whenever CbB was last validated. ? BTW, re: the original topic, BandLab/Cakewalk are claiming over 2M users in their marketing material for Cakewalk Sonar. As I pointed out back then, they have the means to know this.

-

Sucka free over here.

-

MCompressor from the MeldaProduction FreeFX bundle is the one that most helped me understand compression and how to set up a compressor. It's both a great learning tool and a great compressor. If you haven't yet purchased the upgrade for the FreeFX bundle (and if not, why for heaven's sake), it's very versatile without the upgrade.

-

Have you experienced or heard of an audio plug-in being or delivering malware or harming someone's computer? I'm curious because I've downloaded and tried every freeware plug-in I've heard about for the past 10 years (hundreds of them) and never had one turn out to be destructive. A number of these free plug-ins are ones I consider essential.

-

Lost my Microsoft GM driver with CbB 2023.09 - help please

Starship Krupa replied to John Speth's question in Q&A

Snark aside, I suspect that you'll have better luck using the included TTS-1 synth for playing back General MIDI. It should work, and it will definitely sound better. -

Are you saying that you bypass the modules, then close the ProChannel, then open ProChannel, after which the modules are not bypassed? If that's the case, have you checked the state of your Read Automation and Write Automation buttons on the tracks in question? When I first started using Cakewalk, I got into trouble because I had been making fader moves and such that were being written and read as automation when I didn't want them to, I just wanted the fader to go where I put it and stay there. So try it: if you disable automation read on the tracks, does it still happen?

-

My M Audio monitors died on me yesterday .

Starship Krupa replied to kennywtelejazz's topic in The Coffee House

So convert them from active to passive: wire them so that the amp part is out of the circuit. Get a nice little 35W stereo power amp to drive them.