-

Posts

8,664 -

Joined

-

Last visited

-

Days Won

30

Everything posted by Starship Krupa

-

Cakewalk by BandLab is free, Cakewalk Sonar requires purchasing a BandLab Backstage Pass membership. Make sure you've downloaded and installed the correct product.

-

Sounds pretty cool. Those fills and rolls and stuff are not easy to program from scratch. Impressive. I'm a drummer and the drums sound realistic enough for contemporary styles. So many live drum performances these days are gridded after being played that the lack of imperfections in the timing isn't as much of a giveaway as it used to be, and if it's going to be a loud, busy rock mix, the instruments that you do play live will help cover for that. If you haven't already done so, nudging velocities at random spots can help further the illusion of a live drummer playing. I'm not versed enough in post-hardcore to say for sure, but the one thing I might adjust would be to use a snare sound where you can hear more snare wire. That's just a personal thing, I do not like the pank-pank snare sound. I loved The Police, and Copeland's drumming, but that damned clanky steel snare detracted from their sound (to my ears). I play an Acrolite (aluminum) and tune it deeper and wetter, but I don't play post-hardcore either. Since your drums are canned, you can change out drum sounds at will. It all depends on how it sounds in the mix, though, and personal preferences and traditional sounds for different genres. What you have here should work fine.

-

Thanks to the MEGA sales of old, I have almost the entire Unfiltered Audio line, which is great stuff. Of the rest, SPL Vitalizer is great, elysia mpressor and alpha master are great, and Shadow Hills Mastering Compressor is very nice. Lindell TE-100 is....interesting, but in my post-Plugin Doctor life, less so now that I can duplicate its mojo-ridden curves with just about any parametric EQ. That leaves about 30 more that....have the best skeuomorphic graphics in the business? ? But I never paid for any just for the sake of getting them, I got 'em for free using $20 no minimum vouchers. 'cause what the heck. If someone else wants to mix on my system and can't get their head around the MeldaProduction UI, I can point them in the other direction as it were.

-

MeldaProduction Piano Day Sale (Exclusive) - Meldaway Grand for $35

Starship Krupa replied to cclarry's topic in Deals

Best sounding virtual grand I've ever played. If you want it, though, you might hold off until MSoundFactory LE comes up in an Eternal Madness or periodic 50% off sale. It comes with every MSoundFactory instrument, which includes Meldway Grand. The Monastery Grand that comes with the free MSoundFactory Player ain't no slouch either. -

No personal experience with it here. First question: do you mean BandLab the online and mobile device DAW or Cakewalk by BandLab, the Windows desktop DAW that is the focus of this forum? If you mean Cakewalk, there's no reason it wouldn't work. It requires sidechaining, which works splendidly in Cakewalk. I use Trackspacer, the product it seems to want to compete with and it works great in Cakewalk. As others have mentioned, it's always best practice to trial software before buying, if you can.

-

I agree with this (maybe not ALL of them). IME, the bugs usually come down to host-specific issues. My guess is that Saverio doesn't test in a wide variety of hosts before he ships. He probably doesn't have a QA staff, and his site has no invitation to become a beta tester. If nothing else, a beta program would allow him to check compatibility on a wider variety of hosts before releasing. I bought his latest offering, which claims to produce similar results to Oeksound Soothe, and it doesn't work in Cakewalk (yet). There's really no excuse for this; Cakewalk is a free DAW. And I'm sure he could easily get NFR copies of any DAW he wanted from the manufacturers. It took me a matter of minutes to find out that it crashed in Cakewalk. That said, he does seem very diligent about fixing these problems. Doing it before he starts selling the plug-in would be a great idea.

-

If I wanted to do this, I'd probably go with the second option, automate the reverb mix (or send if you are using the reverb as a send effect on more than one track) on a single track. Which is not to say that your first method isn't valid. It does seem like it would be more complex. Another way to do it might be to split your track into clips and put the clips in two different tracks as necessary, but I still think automating the reverb depth is the way to go.

-

Mod Filter is a DXi effect, so in order to use it in another host, the host must be able to use DXi effects. AFAIK, the only host other than Cakewalk that still supports them is REAPER. Live does not. Depending on what you're doing with it, there are several freeware motion filters. Check the Favorite Freeware FX topic in this forum. My favorite is Audiomodern's Filterstep. FKFX Influx is another one that can get some really crazy sounds. The MeldaProduction FreeFX Bundle contains more modulation plug-ins than I can remember at the moment, MComb is my favorite of the filter plug-ins. Moreover, the bundle is something I think nobody should be without. You'll see once you install it. Several of the plug-ins are IMO, best in class, despite being free. MCompressor will teach you more about how to set up a compressor. Although fully-functional as-is, the bundle can be upgraded for a fee. If you wait a few months for one of their 50% off everything sales and combine that discount with the $10 credit you get for signing up for their newsletter, you can get the upgrade for about $11. There are 37 plug-ins total in this bundle. Kilohearts Essentials is another free bundle that nobody should be without. Contains multiple modulation and filter plug-ins in addition to bread-and-butter FX and utilities. Over 30 great FX. About the bundles, although I own licenses for over 400 effect plug-ins, if I sat down at any DAW and wished to produce and/or mix any genre of music, give me those two bundles and I would not feel constrained. Crazy breakdown FX for EDM? Check. Great-sounding and easy-to-use dynamics and EQ? Check. Deep features and modulation options? Check. So there ya go, ask a question, get 75 new plug-ins for free. Also, doesn't Ableton Live come with some crazy modulation filters of its own? Lastly, you need not say goodbye to Cakewalk. It can still be useful even if it's no longer your primary DAW. More than one user on this forum (including industry legend Craig Anderton) likes to compose electronic or loop-based music in Live, then export the tracks to Cakewalk for final mixdown. Not an uncommon workflow. In comparison with Cakewalk's Console, you may find Ableton's mixer....less capable.

-

Converting Audio to Midi - having problems

Starship Krupa replied to Roy Slough's topic in Instruments & Effects

Are you 100% sure about needing a registered version of Melodyne for this to work? My understanding was that although CbB doesn't come with a license for Melodyne Essentials (as SONAR did), only a 30-day demo, the monophonic audio-to-MIDI conversion feature still works after the demo has expired. None of the other Melodyne features will work, only this one. The Cakewalk Reference Guide makes no reference to needing a registered version of Melodyne for this to work. I can't test this, because I do have licenses for Melodyne Essentials. Anyway, I agree that Drum Replacer is a better option for replacing drum sounds. -

Give Acon Digital Multiply a try in CbB and note the difference in sound between the VST2 and VST3 versions. Be sure to turn your monitors down, because the last time I tried it, the VST3 version seemed to be feeding back on itself. Then it jams the meter to full scale and goes silent. Mixcraft, which is otherwise known for being one of the most bug-free DAW's on the market, took a long time before most VST3's worked properly once they started supporting them. I stopped even trying to run them for a while. So "how they communicate with the host" seems to be pretty crucial.

-

Oops! Sorry. Pot=potentiometer=variable resistor. Most continuously adjustable controls are pots (with digital encoders next most common). It's used more for rotary controls. Volume pot, tone pot, treble pot, bias pot, pan pot. The straight line ones in analog mixing consoles (faders) are also potentiometers. Tiny ones meant for making precise adjustments are "trim pots."

-

Become known in the neighborhood as a person who's interested in fixing up old instruments! One time, my neighbor across the street had about 3/4 of an old CB700 drum kit out on his lawn, ready to be dumpstered. He knocked on my door and asked if I wanted to haul it off instead. No snare, one really ratty cymbal stand, no kick pedal, no throne, but it had a bee-yootiful Rogers "Big R" hi hat stand (same model Bonham preferred). Not knowing anything about putting together a drum kit, I figured I'd fix it up and donate it to a school or something. Another neighbor donated an ancient Japanese marching snare. I started setting it up, got various parts from a local used instrument store and Craig's List, some Zildijian ZBT cymbals....and started playing it. Got completely and thoroughly hooked on playing drums. Cut new bearing edges, rewrapped the shells.... Fixing up that kit turned me into a drummer! It's now my favorite instrument. I later got a sweet vintage Slingerland set on Craig's List and fixed that up as well. Kept the CB's as a gigging kit, but gave it to a friend a few months ago in preparation for moving. It's found a good home in his studio and will never see a dumpster.

-

You must have missed my post. That's exactly what I suggested.

-

Does Steinberg (Cubase) hears the drumbeat?

Starship Krupa replied to Misha's topic in Cakewalk by BandLab

I still keep my Mixcraft license current. I was a beta tester for the product before I mostly switched to CbB. At the time, Mixcraft was still lagging behind CbB as far as features, and CbB's mixer was love at first sight. It's straightforward and a great DAW for someone just starting with DAW's. Their commitment to shipping a quality bug-free product is amazing. Good forum, too. Given how much Mixcraft has caught up to the industry leaders, I see it as pretty close competition for Sonar. It has had some slick features that Sonar lacks for quite some time now, most notably the integrated samplers. Their Performance Panel is also better integrated than Matrix, allows for direct recording to cells, etc. Every plug-in gets a little meter that helps a lot with effect gain staging. Routing a MIDI track to multiple Vi's is dead easy. AFAIK, though, there's still no way to collapse take lanes, so if you're a multiple take monster, things can get cluttered pretty quickly. And the mixer, while it's made strides, is still no match for Console. -

Can somebody help me with the item in Smart Tool please

Starship Krupa replied to Misha's topic in Cakewalk by BandLab

Try the Ctrl key. BTW, when switching tools, if you use the F keys to do it you can hold down the function key (in this case F8) to switch to the tool you want, and if you don't release it until you want to switch back, it will automatically switch back to whatever tool you were using before (in this case the Smart Tool). -

-

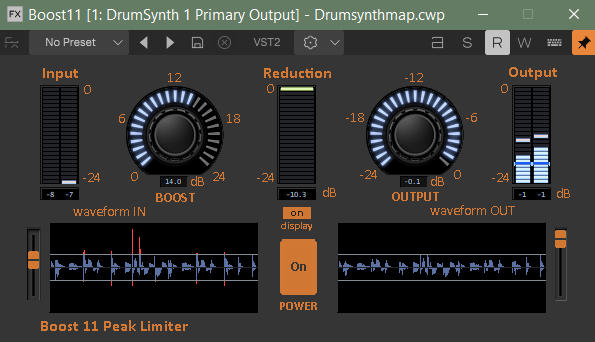

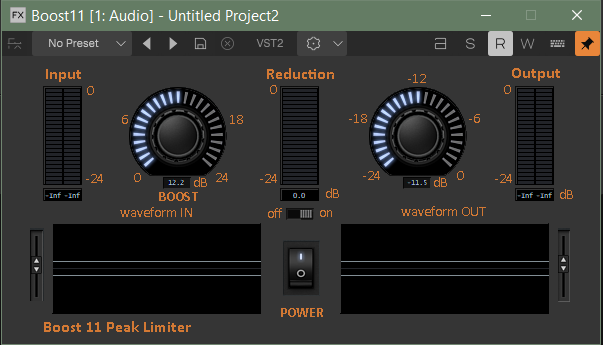

I finally sorted out what's going on with the graphics resources for the older Cakewalk plug-ins. My confusion came from having the same plug-ins installed in two different locations. These plug-ins just pull their bitmaps from the \resources folder inside the folder the plug-ins' .dll file resides in. You just have to take care that your plug-in is actually loading from that location. Without further ado, here's a work in progress, Cakewalk Sonar-ized Boost 11:

-

Does Music Theory REALLY Kill Creativity?

Starship Krupa replied to Old Joad's topic in The Coffee House

I think the term "underlying principles" would be a better one, but "Theory" is the one we're stuck with. I have rock 'n' roller friends who write songs, have made albums, etc., who claim that they don't know "theory." Then I point out to them that they can hear "bad" notes and tell when a chord doesn't fit. And that's "theory." "Theory" is just a way of writing that down and being able to talk about it. It's the nuts and bolts of what we can already hear, what we know instinctively. It's the same as what native English speakers are taught in English class. Breaking down sentence structures and all that. People learn to speak English "by ear," then we learn the theory later. Even in literature class, with themes and the like, if someone tells a long story, it will have a theme, a protagonist, etc. without the storyteller being consciously aware that they're doing that. For me it's not a matter of whether learning theory "does" hinder creativity, but rather "can" it. I have also known some musicians who had a hard time improvising because they were thinking too hard about whether what they were going to play fit whatever rules they had learned. How exactly you're supposed to play across changes and so forth. When I write lyrics, even if I start out trying to just free verse it, it always ends up having a metre and rhyme scheme. I put this down to learning those things in English class in high school. It's nuts, there were decades between when I learned that and when I started to write lyrics, but it's there. Does it hinder my creativity? Maybe. Some part of my brain is sorting out the words and fitting them into a metre and rhyme scheme. It goes the other way, too. I can improvise like crazy on keyboards because I know the scales better. Once I figure out what key the song is in, I just rip modally, playing whatever feels good. Just let go and don't play any bum notes. Harder on guitar because after all these decades, I still haven't memorized the fretboard higher than fret 5, and haven't committed the scales to muscle memory. -

Great Guitars...That Suck to Own

Starship Krupa replied to kennywtelejazz's topic in The Coffee House

How I hate to hear this. That is the death of a business district, people who repair things are rare enough without having to face things like this. Curious, though, did you ever visit 3 Tracks when it was thriving? Keith is a great repair guy (and the sweetest dude) who learned his chops doing final QA and setup at the National factory in San Luis Obispo. He had a lot of cool old oddball guitars and amps in the store along with the classic vintage and bargain newer stuff. Just the kind of store that I love to browse. -

Great Guitars...That Suck to Own

Starship Krupa replied to kennywtelejazz's topic in The Coffee House

I'll not get deeply into it because forum rules about politics. I'll just say that I call myself a "redneck liberal," which means that while I look for progressive solutions, I also have a low tolerance for nonsense, whether it comes in from the right or the left. And district attorneys who don't do their job is nonsense. -

A lot of good advice here. I'll add my .02. A big mistake I made when I was learning full barre chords was that I thought I had to treat my index finger like a capo, holding down all 6 strings. Obviously, once you think about it, you don't need to fret behind the strings that are already fretted by your other fingers. So for instance, with an E shape barre, you only really need to use your index on the low E and the high B and E. This lets you curve your index finger, which is much stronger than when it's flat. Using a bit of @Byron Dickens pulling back with your arm muscles becomes more effective, too. I'm now at the point where I can play without planting my thumb. Of course I do use my thumb, but my hand doesn't cramp up from having to use my finger muscles and wrist tendons to clamp so hard. The other thing is, yes, take it to a pro and have it set up properly for your playing style. A good luthier will watch you play for a couple of minutes in order to see how hard you pick and strum. People who play harder need higher action, people with a light touch on the right hand can drop it lower. I'm not a basher, so my guitars are all light action. I've found that nut height is often overlooked in favor of bridge height. A good test is to capo at the 1st fret. If the guitar becomes much easier to play, especially campfire chords, then your nut is higher than it needs to be. Make sure your setup person pays attention to this. Last, the book that helped me the most in understanding guitar setup and repair was Dan Erlewine's. I recommend it for any guitarist, even those who don't want to do their own setups. Just understanding what's going on is valuable for communicating with your guitar tech. And there are some things, like setting intonation on an electric when you change string gauges, that IMO, every guitarist should know how to do anyway. As mentioned by others in this topic, if a guitar sounds good it IS good, no matter how or where it was built and how much it cost. Case in point: an anonymous neighbor of mine dropped off a CHEAP old Asian-made classical on my porch, complete with musty-smelling gig bag. It's so cheap that the headstock decal is a foil sticker. The frets were actually pointy on top and serrated. The bridge is held on with a pair of wood screws whose pointy tips I can feel underneath. The kind of sub-beginner instrument that used to put people off from learning guitar. But it actually sounded okay, so I leveled and polished the frets, put on a set of really nice tuners that a friend had laying around, took the bridge down, and it's an amazing guitar. Stays in tune, doesn't have the cardboard box tone you'd expect, records really well, being a gut string, it's easier to play than my steel strings. And rescue guitars are like rescue doggies: they return the love and are eager to please. If you think I'm kidding about that, I am not at all. I grew up reading Chitty-Chitty Bang-Bang and watching The Love Bug, and have experienced it enough times to know that it's for real. My favorite electric is a Squier Affinity Telecaster (absolute bottom of the line) that when I bought it for $15, was literally in pieces. Some dip5hit had obviously tried to smash it on stage, but y'know, if you try to smash a Tele on stage, what you end up with is a stage with holes in it. The neck and bridge and control plate had all been taken off the body and the bridge pickup was dead. Tuners were missing. Multiple chunks of the thick poly finish missing from the edges. I put it back together, put some Schallers on it that I had laying around, a Mighty-Mite AlNiCo in the bridge, laid some Krazy Glue in the edge dings to smooth them out, and good lord. I'm not lying, I have at least four friends (I've lost track of the actual number, to tell the truth) who have told me "if you ever want to get rid of that guitar, tell me immediately." And they are all guys who are into really nice US-made vintage guitars. When people pick it up and play it, they get this look in their eyes....

-

Great Guitars...That Suck to Own

Starship Krupa replied to kennywtelejazz's topic in The Coffee House

I was recently stunned to learn through a mutual friend that Keith Kurciewski, who is a good friend of mine (used to live around the block from me in Alameda before he got a job with National and then started his own store), is shutting down 3Tracks and has already moved back to California. Who the hell moves from OR to CA? Then when I found out the reason, that St. Johns has become plagued with crime, I was even more stunned. I drove up to PDX 8 years ago to be there and help him out at the store's grand opening, and I though St. John's was a beautiful, charming area that was up and coming, with him moving in and a record store opening across the street. Now he tells me that the record store had to close due to multiple instances of vandalism and burglary, and he's shutting down 3 Tracks! I don't know if you remember, but several years back, there was a break-in attempt at his store that his amp repair guy (my former housemate and protege) fended off by brandishing a socket wrench. It made the local nightly TV news. Who did you find to do the work on your Gibby? Is St. John's the district you're referring to? People are placing the blame for this stuff on the local DA being as useless as bewbies on a boar hog. Me, I bought cheap guitars from Craig's List and learned how to do my own refrets. -

More like "oh for the love of Pete, look how many freakin' plug-ins I have." ? At this point, I don't buy mixing plug-ins unless it's to toss Saverio a few bucks. His VHS is the only one of his I use regularly, just for the EQ curve, not the room emulation. The only effect plug-ins that I'm interested in at this point are sound design-y ones. There are ones in the MeldaProduction MComplete bundle that I haven't even tried yet.