-

Posts

1,274 -

Joined

-

Last visited

-

Days Won

2

Everything posted by Amberwolf

-

Is there a way to copy a volume automation into a velocity automation?

Amberwolf replied to Keith Young's question in Q&A

You could use a velocity-scaling plugin, and reassign the copied volume (CC7, etc) to it's appropriate parameter. Unfortunatley the cakewalk velocity MFX isn't automatable. I cant remember if Variorum's MFX are automatable (the "compressor" modifies velocity, so it should be able to do what you're after if it is). I think there's one by NiallMoody for velocity scaling, but don't recall if it's automatable. Might be others. -

Not many big guys like that named Annabelle....

-

Does melodyne do it in realtime?

-

I have an ancient CD demo set of the stuff in the Ancient bundle, I think it was for gigasampler; partial ranges of various instruments. I converted them to wave files so I could test them out, not having GS, and used a few bits in The Skaergaard Intrusion (newer, better mix) and The Tomorrow Option (older, needs serious mix work) as various backing sounds. Probably in other stuff too, over the years, but those are the only ones I know for sure as I set out specifically to use some of those kinds of instruments, and they were the only good samples I had.

-

I've never learned to really play anything, including my own stuff, because my brain doesn't work like that, but I think the first thing I "learned" while poking around at a cheap "toy" keyboard way back when (1980s) was an accidental recreation of the main part of the "conversation" between the humans and aliens in Close Encounters of the 3rd Kind. The next one I think was the basic end theme notes of Buckaroo Banzai, again, an accident that I recognized the initial note pattern and kept poking until I figured out the next and the next. At some point I figured out the first few notes of the main Star Wars theme, but that's all. Beyond that I don't think there's much I could just intentionally sit there and play, beyond the variations of this one "theme" I've played mostly on piano for a couple of decades; it's never the same twice, just variating / improvising on a chord pattern and some notes. Oddly, it's the one piece I've never really turned into a recorded "finished" song.... (I'm the only one in my family taht was not given music lessons, but I'm the only one that actually "plays" any instrument (if you can call it that) or creates any form of music, and am the one with the widest artistic interests / implementations by far (my younger brother is the only other artistic one, but he doens't ever play his guitars even though he actually knows how, and won't help me with the stuff I make (or listen to it, most of the time))).

-

I haven't done that specific effect with it yet, but the way I have done something similar (for just one track's sound) was to slice up the audio clip into very short lengths (shorter and shorter slices closer to the "stop"), and use the clip properties pitch change in the notes and cents sections to adjust by ear each slice downward in pitch. I don't have plugins that can do this sort of thing, except in multiple steps with different ones, so it was actually easier to do it that way.

-

You'd need to post a higher resolution image of that symbol to be able to translate it. Most likely it is what the driver name reported to sonar is, even if it isn't the name you see in windows (but that should be the same).

-

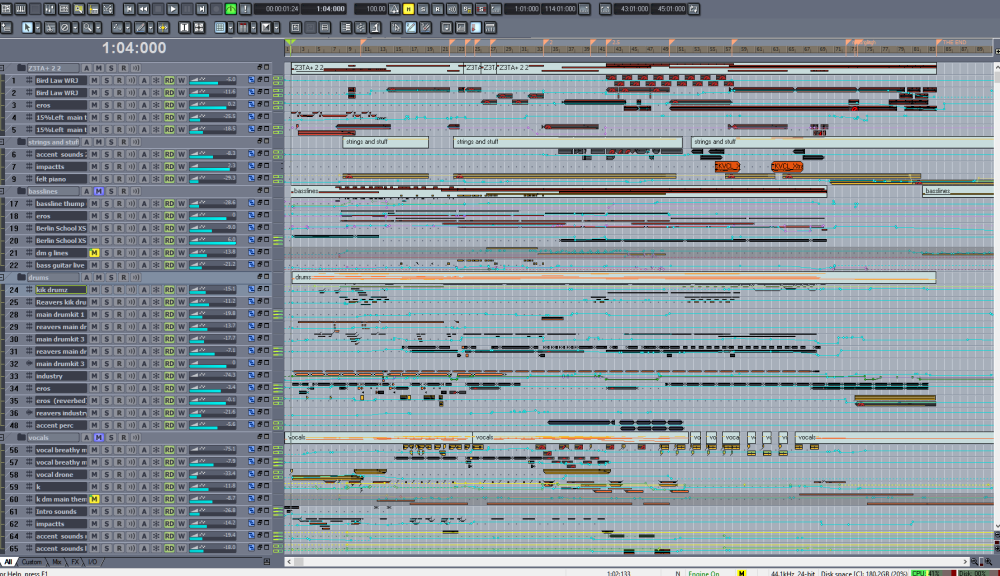

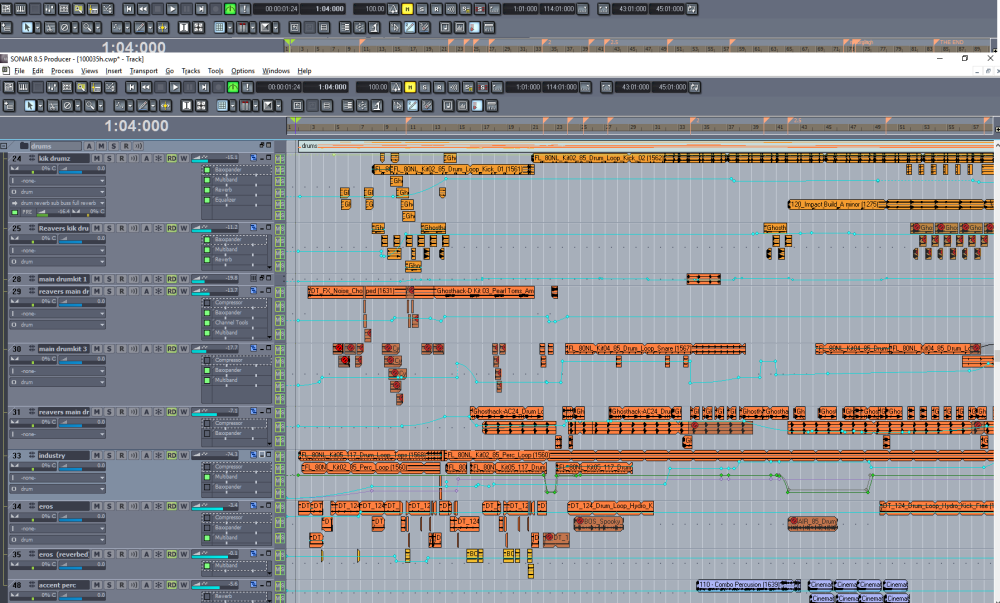

If you mean right at the beginning, the "booms", and the deep vocalizations under the other ones, those are modified mostly from Ghosthack sounds out of their Ultimate Cinematic Bundles and their Origin bundle. The booms I split into two or three "notes" and pithced themn up or down relative to each other to get that effect. A couple of them are layered for "chords" but most are single. I just used the ALT+/- function to change the pitches, nothing fancy then crossfaded the parts. Well, I hardly ever get the chance to discuss things technically; and things like "likes" or other emoticon/reactions vs technical discussion doesn't tell me what I did right (just that there must be some part of it that is), when I can get feedback about what I did wrong I can at least modify to eliminate those problems, so given the little discussion I manage to generate about any of this stuff (and the very few listens it gets), it's "easier" to modify things to eliminate reported problems and then just go from there. If there's something I happen to really like or want or need that nobody likes about it, well, I just keep those bits anyway. Well, I am still learning which sounds I have, I have honestly probably not even heard half of them yet. :lol: I tend to experiment a lot, and try differnet sounds just to see (hear) what they're like, and sometimes I'll even change the direction I was going with something because it's "better" with the new sound. Previously I mostly used SessionDrummer with various kits, some modified from the ones it comes with, and some "built" by replacing those sounds with other wavefiles cut out of various orchestral percussion samples. Sometiems I'd play notes on a yamaha tabletop drumpad thing, soemtiems just draw them in, someitimes use modified midi patterns from various places. At some point I'll probably integrate these approaches, but I'm still learning how to do it with the wave files, and it honestly takes much less time than with the midi, to get the final sound I am after. I'm sure the DAW could handle it....unless it's *really* ancient. My Win10 DAW laptop is more than a decade old, uses some old i5 CPU I think, and internally still uses a spinny harddisk and it can easily handle these projects--it's only at about 25% CPU most of the time, and the disk loading doesn't seem to be noticeable. At most I might be using a quarter of the 16GB ram for stuff like these. (and there are a bunch of hidden empty tracks that still have active fx in the bins, part of the template but not used in this project). When I use stuff with a dozen synths (mostly z3ta2, sessiondrummer, truepiano, dimension, rapture, a few sfz's, etc) then it's a lot more heavily loaded for CPU, and Ram, but it'll still do those too. I probably have at least two fx in every track bin, some several, and each bus probably has two or three. Lots of Multband Compressors and EQs (both sonitus), delays, reverbs, etc. The brain part of it, well, it's kinda like a jigsaw puzzle, or tetris, or something, maybe. Not sure (I dont really play games, no time), but it isn't too tough going clip by clip, often just starting with a simple rollout of a loop to get something to work with, then cutting out all the bits that don't fit as I go along. Eventually it looks like what you see (or even more chopped up, if I don't end up with any looped bits left, just the sounds from them). Sane thing for non-percussion parts if I start with loops for those, but can be more complex as I have to chop up to indiviidual notes and pitch shift them to the notes I want if they're not already, and with vocalizations and stuff I may have to slice up many teensy bits to create pitch bends, blurred together with reverb and delay. (same for electric guitar parts, but blurred by the amp/signal chain). Sure...I hope that someday doing so will generate a discussion of techniques, and i'll learn stuff too. OHBB is the Old Home Bulletin Board, dedicated to discussing all the facets of a show called Haibane Renmei, and ES is Endless Sphere, generally an Electric Vehicle forum, mostly discussing electric bicycles, scooters, and motorcycles, with a few watercraft, aircraft, and cars / other large-EVs. I've been part of those forums for a long time (since back when Cakewalk discussions were via newsgroups (NNTP) rather than forums. )

-

Crows...always remind me of Haibane Renmei, which has a different subject, but is a very good little short show. In that, someitmes the crows are annoying, sometimes they appear to be trying to save someone, sometimes they appear they might be doing the opposite. (open to interpretation). There's an entire forum dedicated to discussing all the facets of the show....

-

VST Plugins That Have General MIDI 2 Sound Sets?

Amberwolf replied to Annabelle's topic in Cakewalk by BandLab

I'd guess at bad caps or regulation, and would check for the same things as suggested for Byron's. It's possible that something else less repairable failed, but it's worth checking the power first. If you're willing to poke at it, then like I suggested for byron you can make a thread and let me know and I can walk you thru checking things. -

VST Plugins That Have General MIDI 2 Sound Sets?

Amberwolf replied to Annabelle's topic in Cakewalk by BandLab

It's possible, but not likely. Most likely only the regulator itself would have been destroyed. If it has multiple parallel regulators (like one for 12v, one for 5v, etc.) then those are cheap and easy to replace. It might have a custom-designed SMPS and that would be harder to repair, but as long as the voltages it outputs are known, it could be bypassed and a separate regulation systme could be installed cheaply enough. So, if you still have it, it is probably repairable. You can check it for the 7805 (5v) 7812 (12v) etc., or an LM317 (depends on design around it), all are fingernail-sized 3-pin regulators with a big silver tab that's usually bolted to a heatsink, with that number engraved on the front of the black plastic. Unfortunately while there *are* standards, it is up to individual engineers to follow them. Even within a single company, not every device follows the same "standards", and it has 'always" been that way, in any stuff I've seen from any decade. Some devices using the "standard barrel" plug don't even use it for DC--IIRC my ancient Gadget Labs Wave8*24 uses an 18V AC output wallwart and all the DC regulation is inside it. (or maybe that was the MOTU Midi Mixer 7s?)...but the same exact "DC Barrel" plug is used on my Yamaha stuff for 12Vdc on one of them, 10Vdc on another, 9VDC on another, and I don't even remember all the other ones.... -

VST Plugins That Have General MIDI 2 Sound Sets?

Amberwolf replied to Annabelle's topic in Cakewalk by BandLab

Is the external power supply just a standard AC cord to the wall, or is it a "wallwart" or other external brick that converts wall power to AC or DC low voltage? If the latter, it could be just that wallwart / brick, in that you might see a voltage, but it might not be the right one (too high or too low), or it might not be filtered enough and have too much AC left (for the heavier transformer type supplies, not really the same kind of issue in switching / SMPS types, that dont' have the heavy big transformer in them). This latter type of failure is usually bad capacitors, and can easily be fixed if the problem hasn't blown up the regulators or other parts. Very often bad caps are visibly faulty, with swelling / doming of the tops, or actual ruptures along the score marks at the top, or blown out the base with the rubber plug no longer in the cylinder, etc. (badcaps.net and other places have good images) If the former, then any problem it has would be internal, but the same problems tend to happen. If you're willing to open it up and poke around, you can look for the caps and see if that's the issue. it's also possible that an internal fuse has blown. If you're not sure what to look for, you can start a thread for it, and let me know it's there, and post pics of what you see and I can guide you on what to check. I've had both of those problems with my Ensoniq ASR88's internal PSU over the years; the fuse blew because the caps failed and caused excessive currents. Thankfully their regulator design isolated the input from the electronics, so it didn't blow anything up outside the PSU. (and many many variations of the same problems in many many other devices over the decades) -

VST Plugins That Have General MIDI 2 Sound Sets?

Amberwolf replied to Annabelle's topic in Cakewalk by BandLab

What failed (or what were the symptoms)? The most common problems I run across in old electronics are power supply issues, bad capacitors (which all have a limited lifespan) that lead to regulator failures.... So...it might be fixable. -

AI and the future state of music creation

Amberwolf replied to Mr. Torture's topic in The Coffee House

It might end up more like "The future will have those who are used by AI and....that's it." -

Instead of updating, I recommend reverting to the last version that worked for you. You can do this from Device manager.

-

Thanks for listening! So could I, which is why I hoped others might. I didn't specifically set out to make this the end titles, orignally, I was just doing what I often do--play wiht sounds, and then see where htey take me. At some point I felt what it was becoming, and then guided it further that direction. I have one other song that felt like an end titles track, called Patterns of Behavior, though it doesnt' have anything else to be a part of, and I don't recall that I ever thought about making anything else for it. (maybe I will now that I'm doing the Drywater, Mars stuff, once I have more of a clue to what I'm doing). PoB was just an exploration of some sounds, and of layering guitar parts together by playing them one string at a time (since I can't actually play a guitar, I lay it on my lap and hold down a string at various frets to make notes I want to hear, then record the next string, then slice that all up and layer them together to get the kinds of things you hear in PoB, so they probably don't sound like a real guitar being played, even though they are). Do you mean the bass at the last secttion? Or the earlier sections? (or all?) I have been working on a "template" and bussing and fx chains to get me a sound I like that gets the least negative feeedback from listeners. 😊 So I have many tracks that feed one of two different bass busses (that feeds a percussion/bass bus that mixes all that stuff together with a single sonitus multiband compressor on it) and there are sends to a subbass track (that goes right to the master) and another send to Basshighs bus that is just the upper two bands of the MBC (others are muted), , for synths that sound terrible if you take all that out, or lose the character of the bassline, etc. Bass1 is just the low-end bass, dry. Bass2 includes a reverb. Some synths get sends to one of these, some only go to one of the other busses, some synths with internal fx that do what I want within the patch are left alone and fed to a "dry" buss that just does MB compression / limiting to mix them all together. I also use this bus for some of the samples / "loops" that I hack up into bits that already have the fx baked into them and don't need more. Percussion is treated similarly, and there is a separate percsub bus that feeds the subbass bus too. In this one, the bass is almost entirely clips (wave "loops"), that are then processed thru the busses above. In this one, it's not any specific kit. It's a bunch of different bits from different one-shot samples and some "loops" hacked up for the pieces and sounds I wanted. Some loops are used nearly whole, or at least sections of them are. I'll use sounds from any source if they give me the result I want, including stuff I record from random environmental encounters (like sometimes accidentally making something percussive sounding in the kitchen, or working in the yard, or building some bike project, etc). I also often just randomly poke thru my terabyte of sound files to seee if antyhing just randomly sounds like it might fit--some small but significant percentage of the time it does, more or less, and then just has to be trimmed / shuffled, etc to fit the thing I'm working on at the time. In this one, there are percussion sounds and sections from Ghosthack, FunctionLoops, BlackOctopus, KV, Cymatics, and stuff I don't even remember. Some of them I've modified extensively (usually with time stretching or compression, or pitch shifting, sometimes with clip FX, etc). Some of them I built for other songs and remembered the sound, so pirated them from that one's audio folder, but don't remember wehther I created them by playing from Session Drummer / etc., or from some other wave file source, etc. I think the snares are mostly from FunctionLoops, but a few are from this year's BlackOctopus Spooky free sound sets. The main kick is from FL, I think from the Neon Waves 90's or 80s pack. At least a couple are from last year's Ghosthack Advent Calendar freebies. At the beginning there are various percussions from a BOS Deep Trance pack. I get bored with snares pretty easily; any percussion that just does the same thing, when I know how I could mix it up to still fit, is unlikely to stick around in stuff I do these days. (I used to just "drum machine" along...plenty of my stuff on Soundclick and Bandcamp needs serious overhaul). But snares really stand out and quckly get on my nerves when they are repetitive in places where they shouldn't be--sometimes that's a timing thing but more often it's a sound thing. I've been told more than once that kick and snare ought to just sit there in the song and keep time, drivng the song along...but for me that's boring and I want them to change with the rest of what's happening, I want each thing to drive the changes in the others, if htat makes any sense. (I didn't really know how to do this before, but I am learning....play with enough toys and you can begin to make your own!). I layer all the different things together to get rhythms I want, cut things up, mute pieces or hits, bounce some of these to new clips, rearrange, repeat the process in different sections, etc. Sometimes a clip has almost what I want, but I have to mute out some of the hits. By themselves you could clearly hear this happening, but in the mix of eveyrhting it's tough or impossible to tell. I used to sit there and fade each little cut in and out but now I only do that for things that click or pop--anything else is hidden in the mix and doesn't matter. Sometimes it has the right sounds, but in the wrong order, or there's some sound I just don't want and hagve to "trim" out of it without channging the rest of it, and have to surgically cut out that sond and replace it with bits from elsewhere in there or from other similar sounds I have that are separate (occasionally a loop set will also come with all the individual sounds but not very often). Then these are all processed thru my percussion sends and busses. This is just hte percussion tracks; it's not as complex as for instance the main Drywater, Mars theme track, or Intercept. This is the whole project, minus the empty tracks that are hidden, and the busses (not room for them on the screen).

-

That's me....always looking to do impossible things, because they aren't really--just that I never really have the tools or skills to do the stuff myself that I want to do; I know that everything I want to do in any of my bajillion ideas / technologies / projects / arts etc is possible, if I could find the right people to work on them all with me. (to gather a team of people to implement the ideas I get, that I can't do myself...it's that BNL song "If I had a million dollars".....) Oh, I have ideas, and am by necessity very self directed...but just because I like what I make doesn't mean it can't be better (by far, in most cases), and if it is better, maybe I could get more people to listen to it. (doesn't do much good to make art that only I ever see, hear, or touch, although i wouldn't stop even if it was guaranteed that was the case). I don't really have a specific thing I expect...because if I knew what it was I wouldn't need anyone to tell me. 😊 Part of what I hope to gain is insight into how others think (because the way I think is so wierd compared to everyone else that it is hard to communicate; I have to use so many words that almost no one actually reads them all and without reading and comprehending them all, my thoughts are not gotten across to them...if I say less then readers don't know what I'm talking about and give less than useful or even useless feedback, etc). I guess that I prefer to find a community of people with generally higher standards of behavior, information, etc., that I can interact with and learn from, and be the closest thing to friends with that is possible for me...and then get feedback from *them* on what I might be "doing wrong" so I can fix it. I have a couple of places like that that (ES, OHBB) I've been part of for many years...but in neither place are there enough people interested enough to listen, and even less to comment in any way, and almost no one to discuss technically / etc with for this kind of thing, and those that do don't pop in very often. VI-control forums is a place specifically for the kind of thing I'd like to discuss, but after my initial thread, and a few replies, I've gotten almost no interaction there with music feedback stuff, probably because I'm not and never will be a composer for published works (movies, tv, games, etc) like so many of those there are, so what I do just isn't good enough or interesting enough for them. Maybe I'm just that lonely old person that walks into a store to shop, and stops each person and yammers on and on about things no one else cares about, because I have so many thoughts in my head and only myself to discuss them with.....

-

I mostly mentioned my problem because it means I am not hearing your whole song (even for the parts I could listen to), so the feedback i give might not be "valid" in that the things I have an issue with are unlikely to be those that others would.

-

Thank you for posting this; it is also what I wish I could get more of. I'm afraid I can't provide much helpful feedback other than that the guitar/drum sets off my tinnitus too much so I couldn't get past the first few seconds, or anywhere else I skipped into it. (there are a bunch of things I want to do in my own music that I can't because of this problem....) Beyond that, it sounds like the vocals are muffled buried in the other sounds, but that could just be how the song is supposed to be. Keep in mind that my tinnitus is severe and so there are numerous vocal range and higher frequencies I literally can't hear anymore, so maybe the vocal sounds that stand out are simply inaudble to me because of that. (some of mine are meant to have things this way, most aren't and there's a bunch of things I should someday go back and remix if I didn't always keep having so many new ideas for new ones I have to work out first).

-

Thank you for listening! --you're the only one so far. Odd is probably a good description of me, too, though I am poorly put together. Since it is the end titles, I am trying to tie it in to the "whole show", so there are some of the different things in there that are sonic references to other parts in the Drywater, Mars series; I would like to put more in but have not yet figured out how / where. Some of the structure of this one is directly from other parts in the series, as well, trying to make all of these tie together as if they were all pieces from the same "show". Some of it is unique to the end titles themselves, sonically and structurally, so it isn't just a rehash of the others. My only experience with all this is having watched lots of scifi shows, bad and good, and the "feel" of how the really good shows all have music that ties things together like this. I considered trying to read up on what others do for this, but decided to just dive in and try it all out, and see what comes out. More fun, less copying what everyone else already did, and this way its' "all" from my twisted broken mind. Then I figure I can learn from listener feedback and either make new versions or simply whole new tracks. (what little there has been here and on Vi-Control has been helpful, if minimal; I can't seem to get anyone to actually have a discussion with me, vs momentary passing comments that while useful aren't as helpful as ongoing Q&A type discussions would be). This track, and the previous Not Just To Breathe With You (part 5 of DM) have directly come out of some of that feedback. (I also have a couple of other experiements but neither of them have gone anywhere yet, and probably won't, theyre more like sonic scratchpads). The "skyboat" is pretty much a really rough representation of what I was trying to get; there's a better description of it in the main series thread or in my EndlessSphere studio thread, I forget which. I'd like to make individual covers for each track that show the basic idea of each one (as the first does for the main title track), but have not had time to fight with the various tools to get what I want--I've been working on the music of the series almost constantly since making the first one last month, whenever I am home and awake enough to do it, and not serving the needs of the Schmoobean. The hard part is trying ot get the inspirations into the tracks whiel they are still in my brain and haven't leaked out somewhere else yet. Even more I would like to make videos for each one that show at least snippets of what would be going on for each one....but there just isn't enough time. I have visions in my eyes for what I want...and can barely type up waht I am imagining to have a verbal storyboard...

-

Walk-Away and End Titles (Drywater, Mars Part 6) (initial version) Every show has to have cool end-title music as the heroes walk away at the end, and continue playing under the end titles, right? From the Drywater, Mars series, also collected here in one thread. https://amberwolf.bandcamp.com/track/walk-away-and-end-titles-drywater-mars-part-6

-

Hmm. Nothing in either thread.... Well, I'll post the newest track for the "show" in this thread and make a new one too, in case it helps, I guess. Walk-Away and End Titles (Drywater, Mars Part 6) (initial version) Every show has to have cool end-title music as the heroes walk away at the end, and continue playing under the end titles, right? https://amberwolf.bandcamp.com/track/walk-away-and-end-titles-drywater-mars-part-6

-

Does the DXI plugin no longer recognize it?

Amberwolf replied to happen135's topic in Instruments & Effects

Can't be completely true, as I am using Windows10 64 bit and my ancient 32-bit Sonitus DX plugins and the old DXi synths like Cyclone, Psyn, etc. all still work perfectly fine (in my ancient pre-gibson SONAR). (I never used TTS1 so I don't have it on here to test but it would probably work too). -

If you can tolerate the latency, you can use input monitoring on the track to pass all input of that track while recording thru the fx bin. But it still records it clean onto the track.

-

Does the DXI plugin no longer recognize it?

Amberwolf replied to happen135's topic in Instruments & Effects

DX and DXi are not detected and found by the plugin manager; they must be installed correctly by the installer itself. Some of them can be manually registered with Windows by using regsvr32. If you do a websearch on that phrase you can probably find the instructions to do this. However, if you are using a 64 bit version of Sonar / CbB, it might not be able to use the 32bit plugins. (I don't have either on my machine to test. A while back I had this type of problem with Splat, IIRC, not being able to see 64bit stuff using hte 32 bit version, and not bieng able to see 32bit stuff in the 64 bit version).