-

Posts

2,178 -

Joined

-

Last visited

Everything posted by Glenn Stanton

-

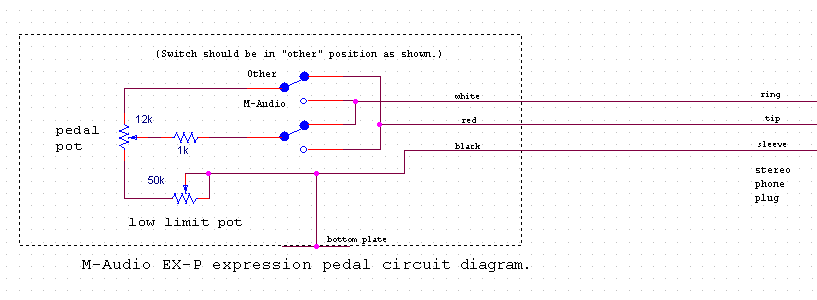

what Glenn heard: "use some electronic cleaner spray to remove the built up gunk (open the back up)" (10 min) and "if it's still failing - check the resistance settings (open the back up)" (5 min) and "if it's still failing, buy a new pedal" (24-48 hours)?

-

as John said and perhaps make yourself a template with everything you need on it first. then use that to create the individual projects. then removed extraneous tracks and synths etc later (or never). maybe start with a bunch frozen then as you expand you work, unfreeze it. regardless, composition and performance (except Jazz) are usually two distinct processes. so consider getting your composition mainly right first using something like Musescore etc, then implement the performances.

-

depends. for effects - create presets for each use. and if you need the same effect across all movements, then this should solve that. presumably volume and expression would be different for each movement? i mean volume and other envelopes can be copied but is that the intent? maybe save the effects (unless you're doing sound designs...) until after you have the movements orchestrated.. worst case, if making the master audio isn't the right way for you, then maybe just export the MIDI from each movement and construct the master from there (like you originally intended), and the connecting bits and then apply you effects across the VI.

-

no real experience with the Focusrite but they have an excellent reputation - when connecting hardware - grounding? ground potential can cause of lot of issues.

-

generally the 1820 and extender are routed to patch panels which for the XLR are XLR patch bay, and the 1820 TRS are normalized for the inserts on a another patch bay for interconnecting mixer, effects, etc. no issues with the front XLR.

-

Behringer AD8000 https://www.amazon.com/BEHRINGER-ULTRAGAIN-PRO-8-DIGITAL-ADA8000/dp/B000GEPC44 connects to my UMC1820 via the optical connectors. i have a cheap optical splitter to feed my (ancient) JVC RX-7000V for 5.1.

-

you will have "movement" projects. then you export those as audio. you will have a "master" project where you align each movement into a track. blend as needed by adding some additional audio or MIDI. then export the entire thing to your stereo / multi-channel output. you're likely composing this a movement at a time, so do the same for the performance and add the blending bits where there is a need.

-

ok, so this sounds like a fix might be needed to accommodate MIDI type 0 - create the only the required number of MIDI tracks and set the respective channels. ? and/or get the vendors to stop using channel 10 (or any other channel in a single channel type 0 other than say channel 1). although in the latter case, there may be dependencies by vendors where their products require it for proper operation due to legacy code... also sounds like an intermediate project should be used to convert the MIDI files or simple expand them into clips, rather than dragging them into the main project, maybe create a blank project, drag your clips into it, then copy & paste the desired MIDI clips into the main project.

-

Groove clip looping not working ( for me!)

Glenn Stanton replied to RICHARD HUTCHINS's topic in Cakewalk by BandLab

actually they're about 4 billion transistors. it's a miracle they ever work... -

FR: Absolute Time Offset for MIDI Tracks (by ms)

Glenn Stanton replied to apt's topic in Feedback Loop

and by renaming a project template (CWT) (or even the project CWP) as .CWX, you can load it... but i don't see any MIDI (or any audio either) coming through when importing it as a track template. maybe i'm missing a step in the process, but it seems like the MIDI (and audio) are stripped out during the track template loading process. -

thanks. i get the same behaviour - the kick produces 8 empty tracks + 1 with the notes. and definitely has the type 0 flag. the hats produce the expected number of separate tracks and is type 1. the kick MIDI only has the 1 track marker, and i'm not seeing in Hex editor what is causing the additional tracks. then again i'm not a MIDI format expert by any means... ? MIDI files from DRUMTRAX do the same thing as the Goran files, whereas Groove Monkee MIDI imports as expected. type 0 single track, type 1 however many tracks. @msmcleod any thoughts on why some MIDI files are causing the extra track inserts? COU_192 is DRUMTRAX (generates extra tracks) COU_192.MID

-

@John Vere did a whole video on inexpensive IO units

-

Groove clip looping not working ( for me!)

Glenn Stanton replied to RICHARD HUTCHINS's topic in Cakewalk by BandLab

typically the audio is going to be the variable tempo. unless someone really went out of their way to humanize any MIDI sequences. you may be entering the world of "audio snap" ? or the world of "remix" where you convert the instrument audio to MIDI, quantize it etc and use virtual instruments to recreate it, then tweak the vocal and solo parts over that... -

Sonar 8.5 Producer: Is VST3 Supported?

Glenn Stanton replied to Annabelle's topic in Cakewalk by BandLab

@msmcleod could a VST3 preset be used with a VST2 plugin? perhaps if the controls for each are effectively the same but i'm not use if it's using an internal id for a given control or just the control name. -

the challenge is the "takes" in physical form are not in parallel. they are part of the long WAV file, and takes are simply time marked sections of that file which are then shown to you in parallel. so trying to tweak the takes "in time" are generally not easily done - either through programming or physically altering the file -- which is the option Max shared. the other option is not to use take lanes and record each take to a separate track. if using this option, you can end up with a lot of tracks - so i like to export the instrumental part (wher the arrangement is mainly settled) and then use that in a separate projects for vocals only (i may do the same for tactic soloing as well). then once you've comped things, export the tracks and insert them into your main project.

-

no worries. just keep in mind that once you step beyond the first few recordings and now you want the higher quality, or acoustic mic etc, there are many inexpensive IO units which will give you audio + MIDI etc greatly expanding your options.

-

How do I get those fancy Start Screen pictures for my own templates?

Glenn Stanton replied to Starship Krupa's question in Q&A

after adding the image, save the file as a CWP (normal), then go to the folder (i have one called "templates") and make a copy of it. then renamed it "mytemplate".cwt (where "mytemplate" is whatever you want to call it - use 00, 01, 02 in front of the template name to make them sort to the top -- e.g. "00 record template" "01 mix template" "02 master template" etc) so: create or edit the new or existing template.CWP file insert the image into the notes section of a NORMAL project save as CWP copy & paste the CWP (so you can go back and edit it again later without starting over) rename the copied CWP to the template name (usually remove the word Copy) and extension of CWT copy the CWT into the \common\project templates and the \core\project template folder if you need to revise the template, then follow steps 1-5 and ignore step 2 (you already did) -

Sonar - strange happenings...

Glenn Stanton replied to Salvatore Sorice's topic in Cakewalk by BandLab

check for the Windows and OS related updates as well. the supposedly backward compatible redistributables can be the cause. like today for me - new KB updates on the OS - so a quick run of the redistributables and rescan VST to be sure. everthing seems to be running normal. i just get into the habit of doing this to avoid finding out i have issues when i'm working on a live project... -

maybe your device has died? maybe you need to get the latest drivers from Presonus and reinstall it? maybe there are some troubleshooting steps on the Presonus site? if it's not showing up in the Windows device manager, then you need to fix that first. https://www.fmicassets.com/Damroot/Original/10001/OM_2777700106_AudioBox-Go_EN.pdf https://www.presonus.com/en-US/interfaces/usb-audio-interfaces/audiobox-series/2777700106.html