-

Posts

2,816 -

Joined

-

Last visited

-

Days Won

8

Everything posted by Lord Tim

-

I do not want Melodyne, but CbB keeps wanting to download it.

Lord Tim replied to Philip Jones's topic in Cakewalk by BandLab

That's a good point too - often I'll get a vocalist to go out and sing the part, dramatically over tune it so it's completely locked in and then get them to sing along with it as a guide. More often than not I won't need to even touch the proper vocal - having the tuned guide there is enough of a nudge to rein in any rogue pitchiness. -

For MIDI clips, you'd add a Velocity envelope to them, since volume, modulation, etc. are all track based. For audio clips, you can add a clip gain to it. Have a look over near where you see the track name, there's dropdown which says Clips. Set that to whatever automation you want to add, then set it back to Clips when you're done. There's mouse/keyboard modifiers to do this without using the edit filter, but that's the first thing to look at and get used to - you'll find most envelope things are based around the Edit Filter dropdown.

-

I do not want Melodyne, but CbB keeps wanting to download it.

Lord Tim replied to Philip Jones's topic in Cakewalk by BandLab

^^ yeah, this is something I appreciate pitch correction for - it lets me get better performances out of the singer. Instead of worrying about absolutely nailing the pitch, the singer can worry most about nailing an incredible performance. I can fix a slightly flat note, but I can't fix a less than stellar delivery, and if you're having to do 20 takes to get the pitching right, you might be having to throw out some gold to get there. Nobody hears how many takes you've done, they only hear the final performance you've kept. In a perfect world you wouldn't need to use this stuff - throw Celine Dion or John Farnham out in front of a mic and never think about vocal tuning again - but for the rest of us, it's just another tool, like a compressor to even out dynamics, to get the best possible final take out of an artist. -

The way I could see it working is we add another option to the envelope types. Currently, we have Jump, Linear, Fast Curve and Slow Curve. I'd say in that menu, add Bezier as another option, which then adds a handle in the envelope part between the 2 outside nodes, similar to my middle node idea, and you'll be able to move it around and the curve type on either side changes dynamically to whatever is needed. Other than this new "phantom node" we're using for a curve handle in my example, this doesn't sound like a crazy amount of work to add in morphing between each envelope curve type ** Not sure if this is the best solution out there, but it's the closest I could think of to what we have at the moment, while adding this to it. ** famous last words by someone who isn't on the dev team. HAHA! Apologies to any Baker reading this!

-

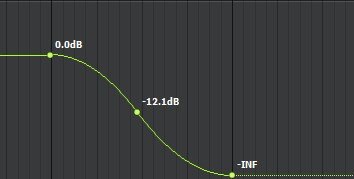

No, this isn't available and is a good request. At least on envelopes, this is kind of doable with a bit of trickery, until it's implemented. You'd do it like this: 1. Add an envelope to a track or clip. 2. Set a node at the start of when you want a fade, and one at the end. 3. Adjust the node positions as necessary (eg: the start node being 100%, the end node being 0% so you're doing a fade to silence). Make sure the envelope shape between each node is linear. 4. In the middle point of that envelope between the start and end nodes, add one more node. 5. Right click on the part between the start and middle node and change it to Slow Curve, then right click on the part between the middle and end nodes and change it to Fast Curve. You'll end up with something that looks like this: And you should be able to drag the middle node to the left or right along the envelope line to somewhat change the way the S-curve looks. You may need to alter what part is Slow or Fast on each side of the envelope to make it work, though. A little clunky, but it works if you don't fiddle with it too much after you set it up, and works great for song fade-outs especially. As yet, there's no way to do that with clip fades using the fade tool, so I'd do a Clip Envelope for those instead of using the fade handles. But yes, I'd love a proper native solution.

-

Midi Effects not working for certain instruments

Lord Tim replied to Michael Kleiner's topic in Instruments & Effects

Yeah, agreed! Looks like we were actually on the same page with this all along. -

Have a look at the track header (where the volume, pan, record, mute, solo, etc.) is - you'll see the Edit Filter dropdown menu. It'll say Audio Transients if it's showing AudioSnap clips, change it back to Clips. If you've moved some transients around on these clips, you'll need to bounce them to new clips, but if it's just altering what you're seeing, then changing the Edit Filter from Audio Transients back to Clips will do the trick.

-

All drivers are there, but Sonar won't record...

Lord Tim replied to berdnyk's topic in Cakewalk by BandLab

Absolutely do not use ASIO4ALL, in fact, if it's installed on your computer it's a good idea to uninstall it entirely because it can interfere with your correct drivers. You need to have the manufacturer's ASIO drivers installed for your MOTU, and have ASIO selected for your Driver Model in Preferences > Playback and Recording, and only the MOTO ASIO inputs and outputs checked in Devices > Input Drivers / Output Drivers. If this is all correct and it still doesn't play, it's likely something else on your system has taken control of the driver, or is using a different sample rate that's conflicting with Cakewalk's. But let's verify that this is all correct first before you do anything else, it'll help us give you better suggestions to sort out your problem. -

It looks like you're not using the Focusrite ASIO drivers, and you're using WASAPI or WDM instead. With Cakewalk closed, make sure you have the ASIO drivers installed first (found HERE), then restart. Then, once Cakewalk is open, go to Preferences > Audio > Playback and Recording, and choose ASIO as the Driver Mode. Press Apply. This should automatically change the related settings in preferences, but to check, go to Audio > Driver Settings, and make sure it lists Focusrite USB ASIO in the Playback and Recording Timing Master dropdowns. If, after all of that, you're still getting an error, something else on your system has taken control of your driver and is throwing the error. Chrome is a big culprit here, and any other things like OBS and other recording packages.

-

I think the "cool factor" is more going to come from marketing rather than production, honestly. If [insert current popular music artist star] starts playing 1920s flapper music, you better believe that every TikTok will have that as background music for the next year. if you're chasing a trend, you're likely already 6 months behind it and the kids already think you're boring as last month's overused meme. This is primarily why I say "do what you think sounds good and be honest to yourself" - tailoring for a market isn't necessarily a bad thing at all, if you love what you're making, you'd kind of be doing that anyway, right? But artists live and die by their PR these days. The music is, sadly, background jingles for their merchandise business.

-

Midi Effects not working for certain instruments

Lord Tim replied to Michael Kleiner's topic in Instruments & Effects

Yeah, weird! This is what I'm seeing/hearing: Exactly the same MIDI data notes-wise, using BBCSO Core, Violins 1, on the Legato articulation. The only difference is in the first clip, all of the note velocities are set to 127, and on the second one, I'm playing MUCH softer. You can definitely hear how the notes slur more on the second example. There's no loudness change - you'd use expression or volume for that - but velocity is definitely doing something for me. I didn't try the Long articulation, but I'd suggest that a lot of instruments and articulations would just kind of ignore velocity unless there was a reason to use it, like the Legato articulation does. From memory, some of the woodwind Legato ones work similar, but strings is where I'm remembering seeing it most without getting in and doing some proper tests. COMPLETELY UNRELATED OBSERVATION: Have any of you guys noticed the legato violins are slightly flat on some notes, especially around the 4th octave? We're tuned to Eb as a band so we tend to write in E (flat) a lot, and Eb4 is awful. Argh. It's driving me crazy! -

Midi Effects not working for certain instruments

Lord Tim replied to Michael Kleiner's topic in Instruments & Effects

OK that's weird, that definitely works for me. I wonder what we have set up that's different to each other? -

Midi Effects not working for certain instruments

Lord Tim replied to Michael Kleiner's topic in Instruments & Effects

@abacab When you say "doesn't respond" do you mean like what we were saying in that it won't work like a continuous controller, or do you mean it has no effect at all? In BBCSO Core, try out Violins 1 Legato with both a heavy touch and a light touch and you should get very different sounds playing them in a legato style. They won't *change* velocity if you draw in velocity automation over the duration of the note, of course, since it doesn't work like that, but it should be doing something for the initial note if you play it louder or quieter. -

It can be done, but you definitely have to make sure you have a decent quality audio interface with rock solid ASIO drivers (especially if you're running this on an i3) because if you're planning to run live reverbs and that kind of thing in real-time with very low latency delay, any weak part of the chain can make this fall over. What audio interface are you using?

-

Midi Effects not working for certain instruments

Lord Tim replied to Michael Kleiner's topic in Instruments & Effects

Velocity works with BBC SO Core from memory (not in front of the machine at the moment), but I'd probably tend to automate expression and volume on the MIDI track rather than velocity for dynamics control anyway, personally. I do know for sure that legato strings especially respond to lighter touch and it adds in slides between notes, so I'd be surprised if other instruments and other editions of BBC don't respect velocity. -

Not sure what the issue is, but unless there's a very good reason, always install and run Cakewalk as a standard user or you'll run into all kinds of weird permission problems later, whether your user account is standard or admin privileges.

-

[Solved] Post Crash audio tracks missing wave data graphics

Lord Tim replied to sean72's topic in Cakewalk by BandLab

Find your Picture Cache (which is typically C:\Cakewalk Projects\Picture Cache but you'll find its location in Preferences > Audio Data > Picture Folder) and, while Cakewalk is closed, delete all of the contents. The next time you open Cakewalk and load your project, it'll rebuild all of the waveform pictures. Note: it'll do this for all of your projects, but honestly it's pretty quick and it's not a bad idea to give your cache a clean every now and then anyway. -

*shudder* I hope not!

-

Look at all of us old-timers here! We'll be talking about knowing when an update is about to drop because our knee joints start aching, and how back in our day we walked through 10 miles of snow on the way to and from our computers just to record 1 track of MIDI (even more exceptional for me because I grew up in the desert - see what I have to go through?!). Now if you'll excuse me, I need to eat my steamed prunes and change my absorbent undergarments. (When you play fast guitar at this age, it's always a risk ?)

-

People will label you (either fairly or not) whether you like it or not. Sometimes it's frustrating, for example we were signed to a German label in the mid 2000s who specialised in flowery overly orchestrated power metal, complete with elves, dragons, castles, mighty steel, soaring in the skyyyyy like an eeeeaaagggllleee ohhhhhhhhhhh kind of bands, so we would get a heap of reviews that just wrote us off as another Rhapsody of Fire or Hammerfall or something when we were nothing of the sort. Any time we played anything that wasn't what was expected, it would be "Oh they're a power metal band that's dipping their toe in X genre" when in fact power metal was just a small part of the sound, as was thrash, as was melodic rock, extreme metal, prog, 80s pop, etc. etc. I think the people who get what we do understand we're any and all of those things because we just play what we like. Some people say we're that "Iron Maiden sounding band" or "yeah like Dio" or whatever - dudes, go for it. If that's what you hear, that's what you hear. I don't ever really feel the pressure with being labeled anymore - is what I'm doing honest to my vision of the band? Then let it be. (Sneaky Beatles reference there also! HAHA) Even if your biggest influence is prominent in your sound, you're going to do it your way, and people will eventually get it. I think a good place to start looking for modern takes on 70s prog is some of the spin-off projects of Dream Theater actually, Transatlantic or Liquid Tension Experiment, or maybe something like Spock's Beard. If the question is, does this stuff sound "dated" ? Then my answer is "to who?" and "but do you like it?" If you're doing it for the right reasons, people will pick up on that, as opposed to trying to mold yourself into something you're not just to keep some critics happy.

-

The answer is in the post immediately above yours.

-

Yeah, we really tried to keep this one simple but classy (even if YouTube absolutely destroyed the quality - glad I spent all of that money on a cinema camera only for it to look like it was filmed on a potato ?) It's kind of funny doing clips like this, the normal stuff I do is much more elaborate mostly, like the one we did for my band: Or this one for a punk/alt band called Raising Ravens: Having 2 people stand in the bush while I walked around them with a steadicam was a (literal ?) walk in the park in comparison!

-

Any way to link the input echo and record arm controls?

Lord Tim replied to sean72's topic in Cakewalk by BandLab

If it's a safeguard, it shouldn't hang the window, it should just be unavailable. I've reported this one as a bug. -

How do the Pros Route Tracks, Busses, Masters

Lord Tim replied to Scott Kendrick's topic in Production Techniques

OK, first I'll say that Mark and Glenn nailed it - this is exactly the process I use too. Basically a time-saving thing to stop boring repetitive tasks getting in the way of making creative decisions. As far as the whys go when setting up what to use in your last question, it all depends on the application. If I know I'm going to be making stems for whatever reason (sending out for a remix, or a mastering engineer has requested them aside from a stereo master, or I want a bit more control over certain elements later, eg: different orchestral sections that I'm dropping into another mix) I'll try to make stuff as modular as possible, each group with its own self-contained effects. This isn't great as far as CPU efficiency goes, and can actually be a bit annoying if you want to do any global changes quickly, but it pays off later with what it spits out. For a great example of that, if you go to Help > Check For Updates in Cakewalk, grab the demo projects. There's one in there by me called "Time to Fly" that I did in this modular way, and it includes a PDF where I talk about the methodology of how and why I did it that way. Using shared global effects when you spit out stems can give you unpredictable results, so keeping things self-contained is a good safeguard. Typically, though, I will have shared effects, busses and aux tracks when I do a mix, because the bulk of the time I'm going to be doing everything in the single process (including mastering... which is a big can of worms and there's definite cons to this. I've found a great process that works for me but I'd almost always recommend against doing this, honestly) Why use busses instead of aux tracks, or vice versa? Ehh.... Honestly, both more or less work the same with a few caveats. On the similarities side, if you wanted to set up a bunch of aux tracks with reverbs, delays, etc. on there, it's not a real lot different to just setting up busses, other than it's sometimes nicer to have them either hidden away down in the bus pane, or up where you can easily navigate to them in the track pane. That's really personal preference if you use them in that way. My preference for this is any global effects (general reverb, overall delays, doubler/slapback, etc) live in the bus pane, because everything in general is going there. On the other hand, if I have a specific effect for a section, for example, a drum reverb, I'll tend to put that up near the drum tracks, usually in the same folder, so if I want to mute the drums, that also kills all of the reverbs and anything associated with that as well, or if I just want to listen to the drum reverb, it's not like a global thing where I'm hearing echoes of other tracks in there as well. The other thing I tend to use aux tracks for more than busses is subgroups. Again, the "Time to Fly" demo project is a great example of that. I'll have drum parts mixed down in sections (eg: kick in / kick out / kick trigger > kicks > drum master, snare top / snare bottom / snare trigger > snares > drum master, etc.) so if I just want to add reverb to a snare or adjust the overall EQ of a kick, I'm working on the composite sound rather than fiddling around with 3 or 4 tracks per element. Backing vocals is another big thing I use aux tracks for - having each harmony layer comprised of 6 or so voices, all mixed down to a single harmony layer, and then all of those layers being mixed down to an overall choirs master track makes balancing the mix much easier than 42 discrete vocal tracks. I used to do this kind of submixing thing with busses, which worked, but with aux tracks I can put all of these things in the one folder and hide it away unless I need to change anything, or I can put the source tracks there and just leave the masters out in a main mix folder. Much cleaner and more focused experience. The other really big bonus of aux tracks is you can record them off and archive the source tracks, so if your machine is starting to creak under the weight of your 200 track orchestral synth layout, set each section to its own aux master, record each section's aux, and archive the source tracks to take the VSTs offline. That's a lot more tricky using busses for the same thing. It just means if you want to do any changes, you'll have to delete the aux recording and unarchive the source and repeat the process. It could mean the different between a project that plays in real time or not though. But to answer specific questions: It depends. If I want stuff to sound like it's in the same space, I'll use a global reverb bus for that. On the other hand, if I have a specific sound I want for an instrument (or similar groups of instruments) I'll use a different bus or aux for that, eg: I'd like a fairly open reverb sound for vocals, synths, maybe guitars, etc. but I'd want a much more dense reverb for drums to add thickness to them. There's also no reason you can't combine both - I might like a big gated AMS NonLin style reverb on my snare for a big 80s sound, but I might also send part of that to the global reverb because it adds a nice decay and air to it. Sure, mine do often. The send levels are typically wildly different, though. OK, this is where how you set up the project comes into play. If you've done it in a modular way, I'd typically send that to that section's master bus. So for example, if I had drums set up with a dedicated aux for drum reverb, I won't normally send the reverb out and the drums out separately to the project master bus directly, but I'll send it to a drum master bus first and then onwards to the project master. That makes sure if I turn the drums up or down or process them further (say adding a nice spanky compressor on the drums or parallel processing or whatever) then I'm not changing the balance of the drum reverb because it's all feeding into the same drum master bus. (Note: I'm saying "bus" here but that can mean bus or aux, depending on how you prefer to work) For any of my global effects busses, I tend to send them straight to the main project master bus. I recommend a project master rather than sending to hardware outs directly because you have a lot more control over levels, any additional final effects, etc. It's a big topic! Honestly, a lot of it will come down to how you prefer to work and the intended final delivery as to how you do it. But the real key is consistency. If you can work out a template for each scenario where you'll have tracks and routing pre-prepared for everything, it's far less boring grunt work setting stuff up and you can just rely almost on muscle memory as to how you record and where the tracks are, so it takes out a bunch of obstacles between your brain and the speakers, really. -

If nothing else, I've learned there's so many different names for flares than I could ever have imagined! HAHA! Cheers for the great comments, guys!