-

Posts

3,371 -

Joined

-

Last visited

-

Days Won

22

Everything posted by bitflipper

-

Not me. However, there is some validity to the concept of LRC panning. The main message is you shouldn't be afraid to be aggressive in your panorama. A 10% adjustment may sound great while you're mixing, especially if you're using headphones. But out in the car or on your living room hi-fi it's going to be too subtle to hear. Instead of a nice wide mix it's semi-mono mush. I prefer a modified LRC approach, which is to place almost everything into one of 5 positions: L100, L50, C, R50 and R100. Footnote: people recommending LRC are assuming all your tracks are mono. While mono tracks are easier to mix and make for wider-sounding mixes, in practice you're probably going to have to work in some stereo tracks as well. That can be especially challenging if you're creating music entirely from synths and samplers, which all too often insist on being stereo, dammit!. A mix made from all stereo tracks can counter-intuitively sound more like wide mono if you don't handle panning properly. Fortunately, Cakewalk comes with a tool for panning stereo properly called Channel Tools. Even better, grab the panner from Boz Digital Labs.

-

I haven't used references in years. Best reference is to listen to lots of well-made records on your monitors. Over just a few hours, you will subconsciously acquire a sense of what a well-made record sounds like on your speakers. It's a real phenomenon. I had originally read about it in a book on psychoacoustics, but can't remember now which book it was. I believe it's a real thing. It could even explain why some people insist that you have to "break in" your speakers, which I believe is nonsense.

-

Try switching to a different USB port.

-

And here I thought *I* was the biggest tightwad around here. You skinflints probably wouldn't be interested, but if you're willing to part with five whole bucks, Iron Pack #5 is the best of the collection, imo.

-

Surely that video can't be that old, can it? I mean, I was in my 50's when I first saw it...oh.

-

...with nothing but the drum beat. This was harder than I expected.

-

When I was in my teens and twenties, I took pride in my John Kay impersonation. When I sang "I like to dream..." all the shiny was stripped from my throat after the "I". Now my voice sounds like that all the time. Some say it has "character", when they're trying to be charitable. Wish I'd been kinder to my vocal cords in the early days.

-

The overarching theme here is that Melda could be your one-stop solution because they offer GOBS of plugins - but not all of them are necessarily best-in-class. Anyone looking for a single vendor to switch from Waves to will be frustrated. Everybody knows my opinion of Waves the company, but I have to admit that nobody else offers the same breadth of products with consistently high quality. Some have the quality but not the breadth (e.g. FabFilter), some have breadth but not the quality (e.g. Plug & Mix). You really have to take the buffet approach when building your toolkit. Semi-related rant...a lot of users moan about Melda's UIs. Voxengo often gets called "ugly". Many also complain about ValhallaDSP's utilitarian look. To those whiners I say man up and invest the time to learn what these products can do. Nobody's gonna critique your mix by saying "I can hear that you used some ugly plugins". /rant

-

That was a good demo, Simeon. Unfortunately, I was not moved to make the purchase. Those variable slides are great and something my current nylon libraries don't do. But I couldn't justify $69 for just that one feature. There also doesn't seem to be polyphonic legato. I also don't like that fret noises, legato and sustains are all separate nki's rather than being keyswitched articulations. The instrument does sound really good and the vibrato sounds natural. But I'll be sticking with Renaxxance for now.

-

How many of these have you used? I was surprise at how many of them I've owned or used (Kustom, Shure, Electrovoice, Echolette, Selmer, WEM, Bose). I just kept thinking that somebody's garage had a lot more room in it for awhile.

-

- 1

-

-

I'm partial to nonstandard percussion, so I actually use these a lot. Stomps make an interesting substitute (or layer) for conventional bass drums. I like to layer finger snaps and handclaps and run them through a delay.

- 1 reply

-

- 5

-

-

-

That stuff makes my throat hurt. Right after the Waves debacle, there were a whole cluster of videos on the theme of "how to dump Waves". This one was pretty extensive.

-

Just yesterday I was thinking "sure these 6 trombones sound pretty epic, but how much better would it be if I added 60 more?"

-

That's exactly the kind of thing I wanted to know. Thanks Simeon. I'll be checking out your video. Slides and legato transitions are key to all string libraries' believability. Most of the time, I resort to fiddly scroll wheel tweaks to implement them. If a sampled guitar includes slides, they are usually pre-recorded samples with a fixed duration and span, which can sound quite authentic but are only useful at specific intervals and note durations. That's why my go-to solo violin is Audio Modeling's modeled SWAM Violin: being synthesized rather than sampled, it allows you to define your own transitions. For Kontakt libraries, this requires clever scripting and doesn't always sound natural. OTS' Slide Acoustic and Slide Lap Steel do a pretty good job of this, but at the cost of being fiddly and time-consuming to program. I'm waiting for a true modeled guitar to come along. In the meantime, my own secret trick is that many of the "guitars" in my recordings are actually Zebra2.

-

And you are correct. However, the master bus isn't the last place it goes. It's second-to-last. From there the audio is handed off to the audio interface via its driver, and is labeled in the dropdown list of routing destinations with the name that Windows gave it. On my system, for example, it's called "Speakers (Saffire Audio)" because that's what Windows calls my Focusrite Saffire Pro interface. Like Steve says above, it wouldn't matter whether you sent the reference track to the master or to the outside world directly -- if, and only if, there is no processing being done on the master bus. Usually, there is. And you want to bypass that so that the DAW is having zero affect on the sound of your reference.

-

This one might be pretty good, but it's hard to tell as there are no detailed walkthroughs. They've tried to make the interface simple by not using keyswitches and limiting the number of articulations, but I'd need to see a real demo to know if they've succeeded or just dumbed it down too much. Either way, it'd be hard to separate me from my longtime go-to nylon guitar, Renaxxance from Indiginus. It doesn't have as many articulations and options as the OTS Nylon, but for the price of the OTS product you could buy Renaxxance plus two more instruments.

-

Anyone know if the "New" Iron Pack 7 is an update to the old "Iron Pack 7"? I'll gladly give them another 3 bucks if they've added content.

-

Ah, the true Golden Era. Young folks complain that they'll never be able to own a home, jealous that their grandparents bought theirs for less than the price of a pickup truck today. Little do they know, that wasn't the best perc us boomers enjoyed.

-

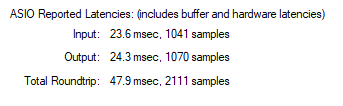

It seems I was mistaken about UM2 not supporting direct monitoring. Sorry about that. Odd that the manufacturer didn't think to mention it, but made a big deal of it when advertising its more expensive siblings. AFAIK, Cakewalk has no built-in loopback test. It's something you'd only ever do once, after installing a new interface or switching drivers. However, the DAW does query the interface's driver so that it knows how much compensation to apply. If you're using ASIO, you can see the reported roundtrip latency in the Driver Settings panel of Preferences, under "ASIO Reported Latencies". btw, the only way to guarantee an accurate round-trip latency measurement is via a loopback test, where you send audio out from your computer, route it back through the interface and record it.

-

Becanful when buying the Sonah ... Crashes a lot!

bitflipper replied to Sheens's topic in The Coffee House

Sure. They just don't venture down into the basement often. -

Now he may actually be a ghost from a wishing well.

-

Becanful when buying the Sonah ... Crashes a lot!

bitflipper replied to Sheens's topic in The Coffee House

Since we're strolling down memory lane, anybody remember Sickvision? At first I defended the fellow, assuming that he was not a native English speaker. I was wrong. He's from Boston. (btw, lest you think I made up that quote for the sake of humor, the original thread is here.) -

Becanful when buying the Sonah ... Crashes a lot!

bitflipper replied to Sheens's topic in The Coffee House

You guys do realize that newer forumites will not get any of these references, right? -

Good answer! The only place you'll ever see a square square wave is in the icon silkscreened onto your synthesizer next to the waveform selector.

-

There's always a mystery to tug at your brain, which is one of the reasons ours is the best hobby. I commend you for answering your own questions through experimentation. Most folks just ask a question on some forum, accept whatever explanation is offered and incorporate it as an eternal fact from then on. As for what the volume slider does, it sets the starting value for CC7 for the track. It does not have anything to do with velocities. You can observe the action of the slider by setting it to something other than "(101)". If your soft synth respects CC7, you can watch its volume control move as you move the volume slider in the track header. You can also see that if the volume slider is first set to, say, 112, and you then add a volume automation envelope to the track, its initial value will also be 112. "(101)" isn't a real value. It just indicates that the DAW will not be forcing an initial value for CC7. Do all synths respect CC7 for volume? No. It's entirely up to the developer how or whether they want to implement any continuous controller. Do all synths adjust volume based on velocity? No. Again, it's at the developer's discretion. Because volume often does go up with velocity, I suspect that's why people might conflate volume and velocity.