-

Posts

2,176 -

Joined

-

Last visited

Everything posted by Glenn Stanton

-

how far will you go when everything that matters is gone? comments welcome.

-

in W11 the default is "C:\ProgramData" which is different than W10 which has "C:\Program Data" - either someone in MS oops or they found having a space in the directory name was always a bad choice and caused more issues than not...

-

the audio part of the secret are the anti-aliasing filters used. for the digital side: power, clocks, etc all contribute to how good (or not) a AD/DA unit "sounds". poor clock stability, under-powered, cheap opamps and filtering components all make a difference. and much of it is measurable. check out a DIY equipment forum where some folks get really in depth on the components, actual o-scope views, log traces, etc etc.

-

sure - share screenshots of your laptop and desktop display settings. also any video display information would help as well.

-

one of my favorites as a kid (14?) was to take an old single tube photo preamp (from my old non-turning portable record player) and just plug the guitar straight in and then output to the amp (whatever one tube or solid state never really mattered at the time) - using the volume control to get just ther right distortion - from just light distort to that "modern" metal screaming distortion! the key was to add a volume pot in front of the input to limit the guitar level and a 10K resistor in series for the input load.

-

Removing all VST2plugs that have a VST3

Glenn Stanton replied to Pathfinder's topic in Cakewalk by BandLab

no idea. so i just make it a hard selection and ignore it. presumably it's because of whatever matching criteria is used is different - names, CRC, uuid, etc etc which don't align because the vendor gets creative and wants to make a splash with their VST3 version... -

Removing all VST2plugs that have a VST3

Glenn Stanton replied to Pathfinder's topic in Cakewalk by BandLab

i just go into my plugins manager and exclude VST2 for which i have a VST3. takes a few minutes. then if i need the VST2 version for some reason (seldom) then it's right there, re-enable it and ready to go. no messing with installations, paths, and updates fro mthe vendor work as expected and very few times does it ever affect the excluded setting. -

sure 192Khz 32-bit poop emoji ?

-

Problem with Toontrack EZ Bass, midi volume resetting to 9%

Glenn Stanton replied to Leander's topic in Instruments & Effects

you may have an event triggering the volume change or an envelope controlling it. i had a similar issue with sforzando and kontakt in my recording template until i foudn i had saved an event during testing which triggered the volume drop. -

that's because the people who were creating the larger ones we see today were also killed in a tragic accident... coincidence? you have to wonder...

-

i've always used an SM58 and sometimes an SM57 w/ pop filter. then about 12 years ago? i bought a Behringer B-2 Pro. $200. i've used this with many really good vocalists both recording and live and it is just awesome. esp given the price. now i have heard a few people with lemons but most folks who try it a surprised by how good it is for vocals. multiple patterns (mono, figure-8, and cardiod), 10db pad (never used it myself). my other favorite is the Audio-Technia 3035 - but it's more "neutral" than the dynamics or the B-2 so it's good for cases where you need really flat (at least it seems flatter). right now i have a Slate VMS ML-1 sitting next to me and it's ok -- but i really like the filters to mimic other mics so i use those more than recording with the mic itself.

-

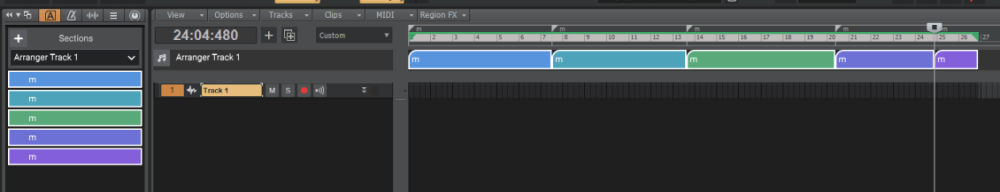

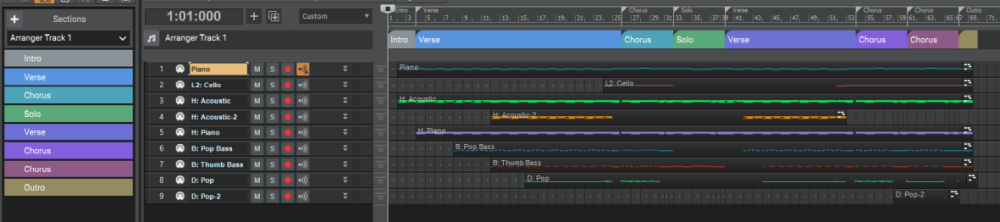

Creating Sections from Markers

Glenn Stanton replied to Sridhar Raghavan's topic in Cakewalk by BandLab

works ok for me. just make sure you have a selection in the timeline across all the markers you're trying to create the sections from. picks up the marker names from an imported MIDI file: -

depends on your meaning - if you set the tempo of the project lower it will play back with longer timing between sounds or extend continuous sounds. if yo uwant the audio itself to play slower regardless of tempo. there are some plugins that can mimic slower audio, and there is stretching clips and lower tempo as well. e.g. https://www.pluginboutique.com/product/3-Studio-Tools/72-Utility/5026-SlowMo you could also play the audio in your media player - most have 1/4, 1/3, 1/2 1.5x, 2x etc speed controls if you're jsut listening to it.

-

[SOLVED] Guitar Rig 6 - Cakewalk Stereo Interleave Issue

Glenn Stanton replied to sadicus's question in Q&A

i think there are some plugins which can split based on frequency - some on ranges (like the Waves Studio Rack) and others more like an EQ (multiband, dynamic, static). e.g. https://www.waves.com/plugins/studiorack https://www.eventideaudio.com/plug-ins/spliteq/ https://www.kvraudio.com/product/thesplit-by-thezhe https://bedroomproducersblog.com/2021/11/03/sound-fingers-dub-spl4/ https://www.gearnews.com/effect-grid-plug-in-split-audio-into-9-frequency-ranges-to-process-independently/ https://integraudio.com/7-best-frequency-splitter-plugin/ -

Looking for a good Horns stab VST pluggin

Glenn Stanton replied to tdehan's topic in Instruments & Effects

a fairly nice low cost one i got recently is the Session Horns from NI, but for free the Bolland Brass Section soundfonts in Sforzando work nicely. https://www.musical-artifacts.com/artifacts?formats=sf2&tags=bolland https://musical-artifacts.com/artifacts?formats=sf2&tags=brass+section -

Cakewalk Can't Find ASIO Device Repeatedly

Glenn Stanton replied to UCG Musician's topic in Cakewalk by BandLab

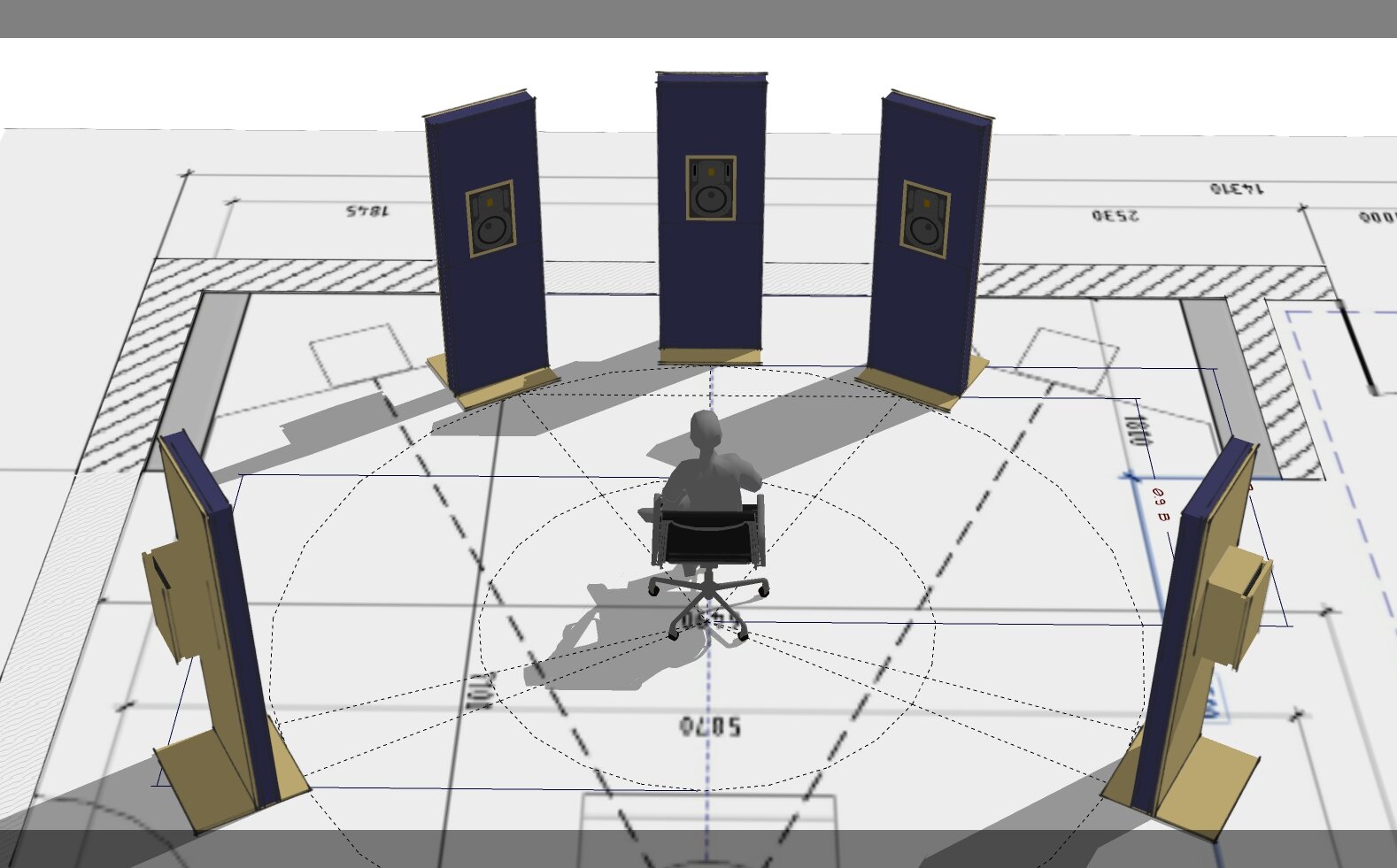

so, using for 5.1 scenario - requires 6 channels of 8 alt channels & leaving the 2 mains for stereo (if desired). not sure why you could configure it for "5.1" mode when it's a multiple output device ? when i use my UMC1820 - well in my case - i have optical out to my 5.1 JVC receiver to run the "5.1" setup and multiple outs for 2.1, 2.0, and so on. but could easly run 6 channels for 5.1 if i was doing mixes on a larger system. it seems like factory settings and simply patching the outputs to the appropriate speakers/amps etc would get your working. -

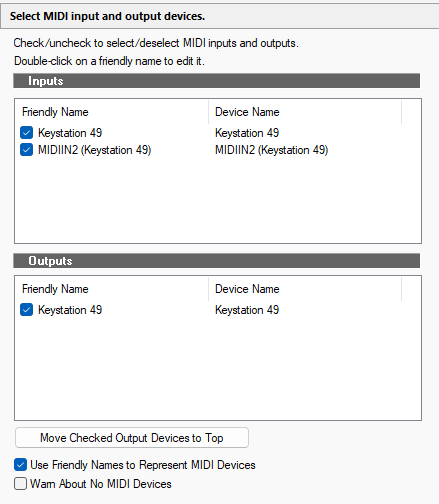

Produce sound from keyboard to PC speaker

Glenn Stanton replied to William Chan's topic in Instruments & Effects

usually you get 2 MIDI devices - the "instrument" MIDI and the "controller" MIDI. for me most times the MIDIIN2 and MIDIOUT2 (not shown in thie example) is my "controller" MIDI which uses certain keys and button as a means to control something in the DAW. my "instrument" MIDI is the main one which is where my note keys, pedal etc are all processed in the DAW for the VI and generally recording the MIDI performance. -

Produce sound from keyboard to PC speaker

Glenn Stanton replied to William Chan's topic in Instruments & Effects

maybe some divide and conquer steps - in my experience those cable MIDI devices have a lot of variation in their ability to do even simple things all the time... 0. what version of cakewalk are you using? 1. remove the MIDI cable device from the MIDI out list. hit apply. (this means you're using the keyboard as a MIDI controller input only. this should also drop the mapping you have set up. 2. uncheck zero controllers. hit apply. while it's likely we'll turn this on, let's check this option. test. -

it would be important to know when it stopped working for you. or if it never actually worked and this is something new you're trying out. i'm guessing it's not Cakewalk incorrectly transferring the information since you can go from CW to EZK (and i tried it with EZB as well) w/o any issue. it's only when you are using an intermediary VST to broker it that you're running into the issue. maybe reach out on the BC forum to see if there are issues in other DAW as well as generally not supporting this approach.

-

as your internal organs liquify from 5hz @ 140db, we'd hear the splash of guts on the ground... ?

-

if you're room isn't treated and your ears are not "calibrated" to it (i.e. you mix something on your speakers and take it elsewhere, and it sounds correct), and if your ears are not "calibrated" to the headphones (i.e. you mix something on your headphones and take it elsewhere, and it sounds correct) then you cannot really trust either one. with the panels up, did you do any acoustic measurements to get a sense of the listening position response? and then adjust positions etc to get it flatter? with the headphones, are you using any software (e.g. sound reference etc) to flatten the headphone response? did you calibrate the playback levels of the speakers and headphones? say both to 75db? loudness contours of our ears result in hearing things differently at different pressure levels.