-

Posts

3,523 -

Joined

-

Last visited

Posts posted by David Baay

-

-

How are the instruments assigned to outputs within the synth? I'm not familiar with Acoustica, but TTS-1 has 4 outputs. If all instruments are assigned to output 1 (the default), it wont be possible to mute that output if some track driving another instrument using the same output is soloed; the solo will override the mute, and keep the output active.

Ideally each instrument in a multi-timbral synth should be assigned to a dedicated output with a separate Synth track (i.e. an audio track with synth output assigned as input) hosting each output. This also allows you to to apply different automation, FX and Send destinations/levels to each instrument.

-

Late to the party. Very cool, Noel. Listening to the performances now; as we '70s kid would say... Eeeeexcelent, Man!

Cheers,

Dave

-

6 hours ago, ExittheLemming said:

...human players don't deviate from the grid 'randomly' i.e. they play with a perceptible rubato (rushing ahead and behind the beat where appropriate for the desired feel) that sounds human and makes the music breathe over a discernible but fluid pulse...

Yes, as demonstrated in OP's other thread on the subject. Linked here for the benefit of future searchers:

-

I suggest you capture a copy of AUD.INI before and after re-configuring after successful launch with default AUD.INI, and see what all has changed. Then try reverting changes one at a time and relaunching to find the culprit.

-

Audionsnap has Extract and Apply Groove functions that work with both audio and MIDI. But I still don't think this is going to get the OP what he's looking for in terms of a 'flowing intro'.

-

That's why I prefer to zoom on the Now time. Since I usually want to zoom on or near the start of a note, snapping the Now time to the nearest beat and/or tabbing to the transient, and zooming gets me that spot centered in the tracks pane without having to take care where my cursor is. And if the exact spot I want goes a little off screen at high zoom levels, it's a simple matter to scroll left or right.

-

I'm not saying it's a solution. I'm confirming it's got issues, but that it can work in the situation I described, which is why I didn't immediately see a problem with it after switching my zoom options.

-

Note the first reference said 'center in the tracks pane'. So, for example, if the timeline is showing measures 1 through 16 to start, start zooming somewhere right of bar 9.

-

I found that I can reproduce that if I start by zooming with the cursor left of center in the tracks pane. Start by zooming on something right of center, and zooming anywhere will work as expected after that.

EDIT: Also, of you zoom out to point that 1:01:000 becomes visible, the cycle starts over, and you have to again start zooming right of center.

-

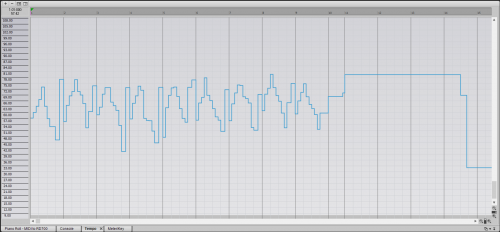

Following on my previous post, here's what a tempo map for a rubato performance looks like. The recording was real time without a click, and the tempo map was made by setting every eighth triplet using Set Measure/Beat At Now. Note how that tempos increase and decrease in a periodic way (what goes up must come down). The flat line is where the piece moves into a steady tempo, and I could just set a fixed tempo for those several measures until it changes again to follow a quickie rubato ending I threw in. You can hear it here:

-

Based on your description, I'm guessing the intro needs to be played with rubato - varying tempo significantly both from bar to bar, and within bars. You're not likely to get the desired effect just by randomizing note timings against a fixed tempo grid, and it's very difficult to 'program' natural-feeling rubato. Ideally you should find someone who plays keys to record it as MIDI, but if you want to try 'programming' it, I suggest you start by drawing tempo changes in the Tempo view.

-

29 minutes ago, Chris Jones said:

...you put your curser on it to zoom out...

Make sure Mouse Wheel Zoom in the Track View Options menu is set to zoom At Cursor rather than At Now time. Personally, I prefer to set the Now time where I want to zoom, and then zoom on the Now time.

-

2

2

-

-

He's talking about the Virtual Controller that you select as an input to a MIDI or Instrument track. As such, there would be no way for it to animate according to the output of the track on playback. But position sensitive velocity output should be possible to implement.

-

What audio interface? Possibly the output section of your interface or amp/monitoring system is having issue. If meter activity on the Master bus is uninterrupted, it's probably something in the analog output path.

-

What audio interface? And are you monitoring the audio output of DeepMind through Cakewalk with input echo enabled on the audio track or direct monitoring through the interface?

If using onboard sound that does not have native ASIO drivers, it's best to set WASAPI Exclusive driver mode in audio preferences. Then make sure the Source in the Soundcard's mixer app is Line In, rather than What U Hear or Stereo Mix.

If you monitor through Cakewalk, what you hear will be what's recorded. If you get audio engine dropouts, either while recording or on playback, you will need to increase the buffer setting in audio preferences.

-

No MIDI input

in Q&A

2 hours ago, MitchNC said:When I create an instrument and MIDI track, I get no MIDI input activity on the meters.

For future reference, MIDI meters are always output meters, even when the MIDI track is armed to record. My first guess would have been that input echo was not enabled so there would be no output from live input and thus no meter activity.

-

Yes, I can confirm grayed out and non-responsive Mix Recall controls in 19.01.

-

I may change my user name back to brundlefly here at some point, but figured people would recognize the avatar.

-

1

1

-

1

1

-

-

Nice one, Daryl. Thoroughly enjoyed it. A great lyric combined with charmingly whacky musical elements and flawless performances and production quality that made for effortless listening all the way through.

-

While I continue to be happy to answer any and all questions, including those that that have been answered many times before, I would encourage new users to Google keywords against site:forum.cakewalk.com to find archived discussions and answers. There's a lot to be learned there. Add the user name of a frequent flyer like scook, anderton or (dare I suggest) brundlefly to get targeted answers.

Examples:

missing dropped skipped midi notes site:forum.cakewalk.com

metronome not working site:forum.cakewalk.com

pan panning problem scook site:forum.cakewalk.com

pops crackles distorted playback site:forum.cakewalk.com

-

2

2

-

2

2

-

-

Survey says: Tasty Tones, Tunes and Technique 👍

-

13 hours ago, Chris Jones said:

But lately I've been using the daw the sample a keyboard and after editing and bouncing the samples and cleaning the audio file folder of unneeded files so that I just have my cleaned samples.

I've had to deal with that in the past where I wanted multi-samples named with the note number and velocity used to drive a hardware synth. I didn't bother searching at the time, but I'm guessing there are freeware file-naming apps that could help automate the renaming.

-

Yes, that's still true; the OP just wants more control over the naming of that file.

-

My favorite genre for listening is jazz, but I don't have the training or chops to play real jazz. I also love fusion, and a lot of what I do could be classified as such, though typically with less virtuosic performances, and less adventurous changes. I don't really listen to ambient, chill-out, trance, lounge or what used to be called new age, but much of what I create leans in one of those directions. Like most around here, I grew up listening to - and still listen to - a lot of guitar-based pop and rock, both soft and hard, but I'm not a guitarist, and I don't sing or write lyrics, so I'm influenced by that listening, but it's not what I do.

Volume Automation Default When Adding A Track

in Q&A

Posted

Shift+A shows automation lanes, and will create a Volume lane by default as a convenience since that's the most often used type of automation. If you want something else, you can change the type using the drop-down in the lane header or by right-clicking the envelope, and choosing Assign. If you don't want any automaiton on the lane, don't Shift+A to show automation lanes in the first place.