-

Posts

2,300 -

Joined

-

Last visited

Reputation

1,372 ExcellentAbout Glenn Stanton

- Birthday June 8

Recent Profile Visitors

6,964 profile views

-

What happened to the Split Instrument/ Instrument track Icons?

Glenn Stanton replied to Bass Guitar's topic in Cakewalk Sonar

as a note: i also never use instrument tracks, always a dedicated MIDI and a dedicated audio for my VI. simply a matter of the way i organize my templates and my workflow. i'm sure if the instrument tracks work for folks, good. my preference is to keep them separate. makes aligning my record and mix templates much simpler, and if i'm "cheating", i can readily render my VI and remove the MIDI tracks and VI and drop right in to mixing. -

What happened to the Split Instrument/ Instrument track Icons?

Glenn Stanton replied to Bass Guitar's topic in Cakewalk Sonar

hmmm in your Sonar one, and i see keyboards on the Instrument, and keyboard and MIDI icons on each respective split. i think we need to have another icon for Instrument similar to the old one then with the keyboard AND MIDI combined like those of yore... -

What happened to the Split Instrument/ Instrument track Icons?

Glenn Stanton replied to Bass Guitar's topic in Cakewalk Sonar

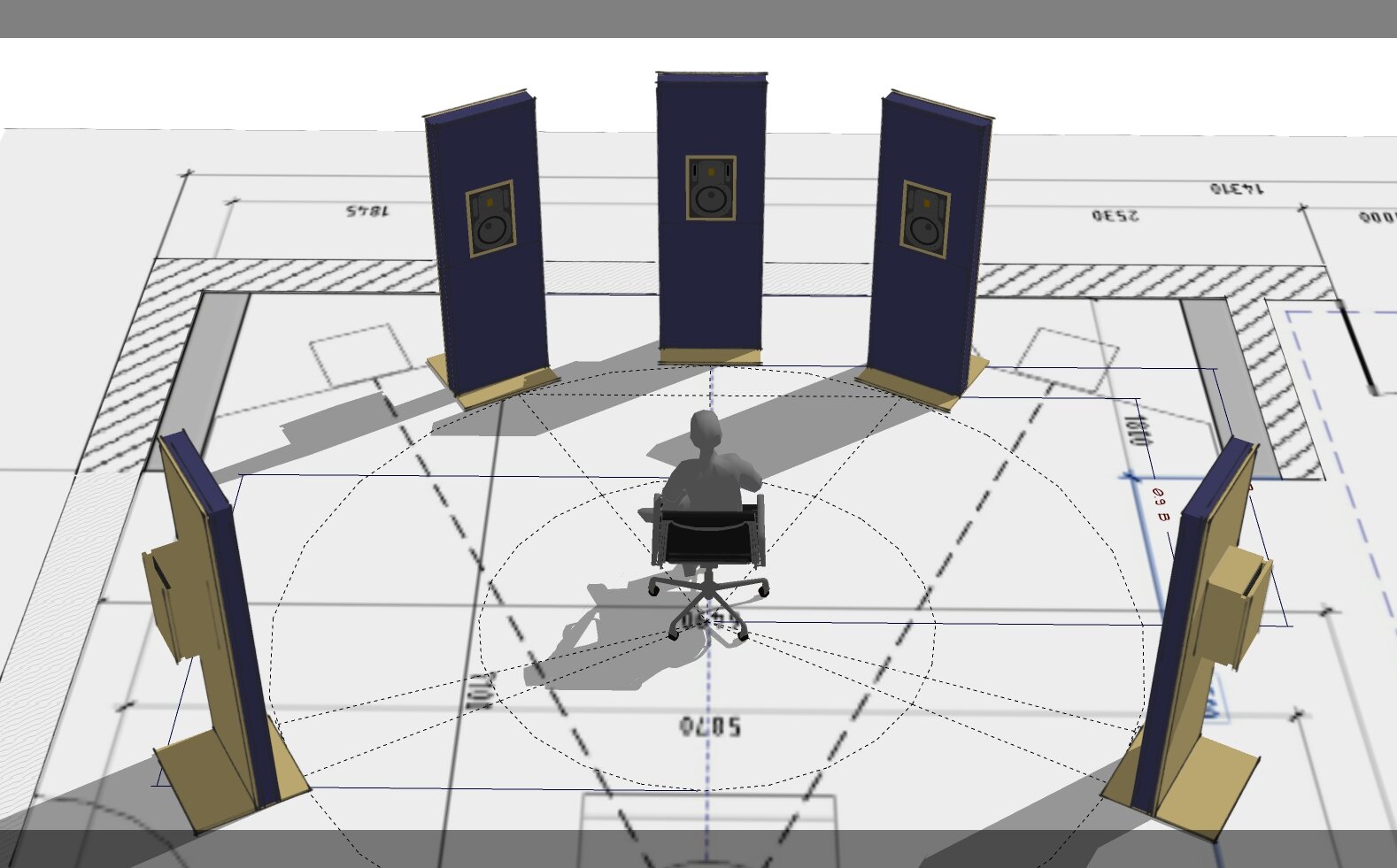

when i set up a project like you show, an Instrument track (i.e. contains both MIDI and the VI audio output) the instrument tracks all have the piano icon, and when i split that or simply insert synth, i get both a piano and midi icon (per track respectively). are you thinking the Instrument track should resemble the older MIDI+keyboard icon rather than simply the keyboard icon? fwiw, i never use Instrument tracks, just MIDI or audio, this way my templates are organized as "audio outs or record clips" in the top section, and all the MIDI tracks sit all together. i do this A) to avoid confusion, B) i can render and delete all the MIDI and VI and save as a mix project, and C) my record and mix templates are essentially all the same except the mix template has no MIDI tracks. -

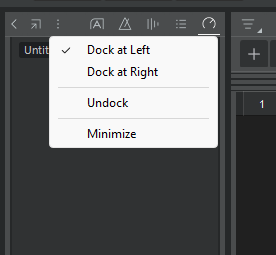

as far as i can tell, the inspector strip is it's own child window. it has it's own docked left/right, undocked etc view options but if you're running multiple monitors (for example) i would presume you could undock both the console view and the inspector and place them into the preferred monitor...

-

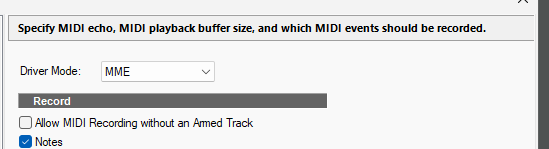

is the issue that you have multiple MIDI devices sending DAW control signals? in my setup, i have one device which (in addtion to all the other MIDI info) is the controller of DAW commands (Start, stop, etc) and the other simply provides note, velocity, modulation etc and volume information. i guess technically i could use the non-controller to send commands as well but i just don't). if it's things like program changes, presumably that is based on the channel rather than the controller MIDI.

-

-

if you don't need the graphics, this EQ is excellent: https://www.wavefoundry.net/post/antelope-audio-releases-free-eq-plugin-veq-mg4 and free now...

-

yeah, i have a number of custom workspaces which give me different tool sets and views so i can jump into whatever i'm working on - viewing just tracks, view just busses, viewing tracks and console, viewing busses and console, record, mix, and compose. when i open a project, i just select my custom workspace and get to work. don't care what it was when i last exited. at most 0.5 seconds to switch it. 🙂 i'll switch workspaces probably 8-10 times during a given work session. i find it easier than using screensets...

-

workspaces span projects. in mine, if i last used "mix" (custom) and then open up a recording project, the workspace will still be "mix"

-

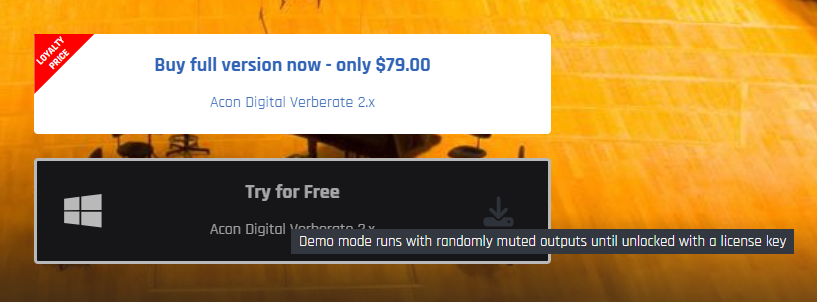

thanks! i have that one already myself. i thought you meant the main product. LOL

-

Finding a project template using the Quick Start menu

Glenn Stanton replied to norfolkmastering's topic in Cakewalk Sonar

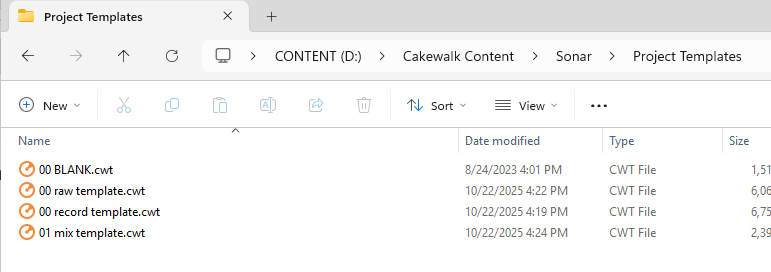

you need to put them into your DRIVE:\Cakewalk Content\Sonar\Project Templates folder. on my system that is the D: drive. -

-

Using Sonar for recording and playback

Glenn Stanton replied to Forlænget Spilletid's topic in Cakewalk Sonar

if you set the pan to -3db center when centering, you should see the levels match.