-

Posts

199 -

Joined

-

Last visited

Posts posted by bvideo

-

-

On 8/10/2023 at 11:50 AM, Simon Payne said:

Solved it Cakewalk. Make your plugin chainer apply the vsts in parallel as apposed to series. They can then properly distribute the processing over multiple cores and threads. Until then Blule Cats Patchwork dose the job.

Perhaps you are thinking of processing the plugins in parallel while streaming audio data through the chain. Maybe a good name for it would be "plug-in load balancing". That name is already in use, however, in Cakewalk. It's the name of a feature that processes the plug-ins in parallel to distribute the load across multiple cores when possible.?

-

There are differing degrees of multiprocessing. One special case is "plug-in load balancing", where the chain of plugins on a single track is handled by multiple processors, one per plugin. I was wondering if you tried disabling that. I'm sure one of my projects had a problem with it, but only with certain plugins and certain settings thereof. I asked you about buffer size because plug-in load balancing is disabled when the buffer is smaller than 256 (by default). So I am still curious if you have tried those things.

Aside from plugins and audio drivers, the core of Cakewalk & Sonar is shared by dozens (hundreds? Thousands? ???) of users, not many reporting playback or rendering corruption. That's why I'm asking you these questions about a corner case I believe is there, and has been there since plug-in load balancing was introduced. (My early report).

-

Hi Henrizzle,

"... disabled multiprocessing ..." How exactly? Did you do that in the BIOS? Did you disable "plug-in load balancing"? Did you set CPU affinity to just one processor? Something else? (I ask with curiosity because of a prior experience of mine.)

Also, do you have "64-bit Double Precision Engine" disabled or enabled? Do you use an audio buffer size of 256 or greater or smaller? Do either of these changes make a difference?

Does Sonar after September 2016 do the same?

To reach Cakewalk support directly, see "How do I report a problem with a Cakewalk product?". It says "please contact us at support@cakewalk.com."

-

If it's onedrive, your network connection is important. Wifi? Some wifi hardware can need too much CPU at times. The latency monitor tool might be able to help zero in on the problem.

-

My first modem was 110 baud.

-

Also try enabling 64-bit double precision. Also, an audio buffer size below 256 will disable plugin load balancing.

-

That "server busy" comes from drag and drop, so if you dragged from the desktop to the program icon (about double-clicking the icon, not sure, maybe the same), it indicates that the program has not yet accepted the handoff after some time period. It may eventually.

So a question is, does Cakewalk normally take a long time to start up on your system? Or maybe is your system especially busy, or just restarting, when you try to open that way?

-

1 hour ago, Colin Nicholls said:

If we take away one of the DAW s you haven't chosen, does it make sense to switch to a different one?

Monty Hall / three doors (doahs) scenario?

-

1

1

-

-

5 minutes ago, Starship Krupa said:

It's an absolutely objectively proven fact that whenever someone posts this link to a forum, Joseph Fourier turns over in his grave. Even just the times I've done it would be enough for the other residents of the cemetery to call him "Whirlin' Joe."

Anyway, I'm not trying to prove that all DAW's do or don't sound the same bla bla bla. I was hoping that by saying so and by using deliberately imperfect, subjective testing methods that I would avoid that sort of thing, but whatever.

My goodness, how far off topic this thread has gone!?

-

1

1

-

-

Does the arpeggiator give options for sync to beat? Or does it simply clock each sequence starting when that key is pressed? If the latter, then the arp is just reflecting how much out of sync your keyboard technique is. For on-the-fly playing on an arp, it seems like it might be preferable to have an auto-quantizer enabled.

-

Here's a page of comparisons of some measurable qualities of how various DAWs do sample rate conversion (SRC). It's one proof that all DAWs are not alike. Of course SRC is only one technology of audio processing, and depending on the project and other DAW optimizations, it may or may not play a part in outcomes.

SRC can be invoked when a project is rendered at a sample rate that is different from some of its source files. It is also used by some DAWs and plugins in an oversampling stage to improve results of audio processing that are sensitive to aliasing in high frequencies.

Maybe not all the audio outcomes shown in the various graphs on that page are audible. It looks like some of them should be. The audio material used in the tests is not the least bit musical. But it is convincing to me that DAWs are different.

Just for fun, you can note that some older versions of Sonar can be compared against the X3 version.

-

1

1

-

-

12 hours ago, Starship Krupa said:

I just did an experiment using 4 different DAW's.

...

According to Sound Forge, For instance the integrated LUFS ranged from 17.12 to 20.02. Maximum true peak ranged from -0.76 to -3.90dB. The length of the rendered audio files ranged from 18 seconds to 25 seconds, due to the way each DAW handles silent lead-ins and lead-outs that are selected.

...

If LUFS is a calculation over the whole file, the ones with silence will come out different from the ones without, even if the sound part were identical.

-

1

1

-

-

On 3/26/2023 at 10:39 AM, ancjava said:

... moments of silence (moresoe - those moments of silence appear also in exported wav file)...

If exporting the wav file is done by non-realtime render or bounce, moments of silence are not likely caused by CPU exhaustion or anything about the sound card.

But what, I don't know.

maybe: Is there a master bus plugin that is not authorized on your home system?

-

1

1

-

-

Did you get such a discount for your Teac 3340S? I still have mine, but it badly needs service. I think it was expensive, and still can get high prices used. It has playback through the record heads so you could lay down more tracks in sync with the existing tracks. That only became obsolete when computer-based recording became readily available (Cakewalk 1.0?). Occasionally I use mine for capturing old family recordings, such as from 1958 or so.

-

That cable looks really wrong anyway. The USB won't connect to your computer. Is there a link for that ?

-

You can try changing your 64-bit on/off, and plugin load balancing on/off. And depending where the rendering mistakes are, you could pre-bounce some tracks to reduce the load so you could then use real-time render, or conversely, to eliminate rendering the misbehaving tracks in fast render. Or do all the tracks misbehave?

-

A misconfigured all-notes-off sequence.?

-

There is a chance that if you look deep into the Kontact piano, you might find an effect that is sensitive to tempo change. In any case, can you tell which track or bus produces the hiccup?

-

I had some too. Reported it a few times way back. Also, try with/without 64-bit rendering.

-

Your wheel events are recorded on 5 different channels, so it shows up 5 times in the piano roll view.

-

That Wdf01000.sys is a "driver framework", so it could be used by any non-class-compliant device on your computer. Since it is a "gaming" computer, there may be a device built in that uses a driver tuned to gaming. Another possibility is that your anti-malware software has provided some alternate or stacked drivers, e.g. for keyboard and mouse (mine does), though wdf01000.sys is unlikely from anti-malware software.

So mainly have a look at the driver stack for keyboard and mouse. Control panel -> device manager -> Keyboards (or) Mice and other pointing devices (double click or right click) -> properties -> Driver -> Driver Details and see if wdf01000.sys is there.

-

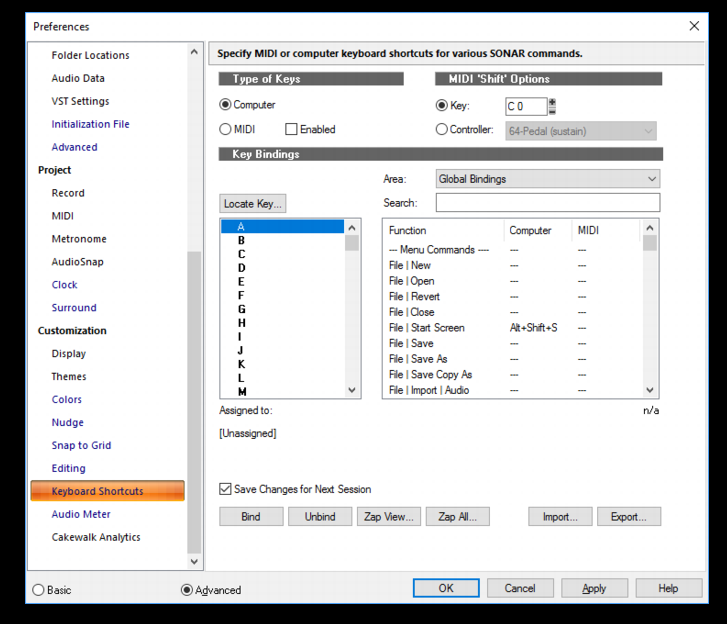

Check for the MIDI 'shift' key configuration.

QuoteYou can designate a MIDI Key or Controller (usually a pedal) to act as a key binding shift key. That way you can require that the key binding shift key be depressed before any command is triggered, so you only lose one note or pedal from its regular function.

Check in the upper right of this "preferences" subwindow.

-

2

2

-

-

On 2/28/2023 at 3:04 AM, azslow3 said:

I have not done the test, but one "theoretical" note. Let say you have 100Hz sin wave. That means its "turnaround" is 10ms. If the interface input to output latency is 5ms, recording input and output simultaneously should produce 180° phase shift. I mean visual shift between waveforms depends from the frequency and the interface RTL.

This is the special case of "phase" corresponding with a polarity flip. It can only happen with a purely symmetrical wave and only with a 180° time shift as determined by the period of that wave's fundamental frequency. So it would be possible to construct a case where transmitting a sine wave through a time delay of exactly 1/2 the wave's period would appear as polarity reversal.

(edit: some pointless material removed)

-

The drivers for USB or DIN (usually connected by USB, only much older equipment was connected by serial or printer port) can be viewed by going through the device manager and finding the item associated with that device and inspecting the driver stack. Most likely, they all use the Windows "class compliant" drivers, but it's worth checking. Variations are more likely within the h/w dongle or, in your case, the synth, which, by the way still has to pass the DIN output through another interface (which is also USB connected?)

Audio drivers, particularly ASIO, are the ones that suffer from some manufacturers' efforts.

Clips with audio playing showing as flat lines in track view

in Cakewalk by BandLab

Posted

Depending on the version of Sonar you have, there may be a zooming widget between track headers and track data. Check the manual, and see if your zoom is way down low.