-

Posts

66 -

Joined

-

Last visited

Posts posted by MediaGary

-

-

On 10/6/2020 at 6:14 AM, Will_Kaydo said:

.... For this to work successfully, you have to uninstall all previous instances of any Asio drivers installed on your machine. Some hardware Asio drivers clash with this. ...

Wow, thanks for that tip!

Because my test machine had been used for *many* tests along the way, I had to un-install the ASIO drivers for the Klark-Teknik KT-USB, Midas MR18, Dante Via, and the Echo Pre8 before starting to get some forward momentum.

The initial testing over the network was ... uninspiring. I managed a best case of a 12-millisecond difference (~576 samples) between the networked audio and the 'native' Echo Pre8 audio arriving in Cakewalk. In comparison, when I had the Audient ADAT coming in the Cakewalk along with the MADI audio from the Midas M32, merged via the RME HDSP 9632 and RME MADI drivers, the difference was around 0.4-milliseconds (~20 samples)

Since this is just an exercise in what is possible in networking with free products like AudioGridder, it's no big deal, but certainly reveals how much it'll take to eventually make this a production-friendly solution.

-

1

1

-

-

On 5/14/2019 at 4:02 AM, msmcleod said:

So it looks like there is a way to get ASIOLink to combine more than one ASIO interface into one:

- Open one instance of ASIOLink, and set the ASIO driver to be your second ASIO interface, setting the output to Network (just use the local network IP) & enable network.

- Open a second instance of ASIOLink, set the ASIO driver to be your primary ASIO interface, set the output to ASIOLink's ASIO driver and enable network input using your local network IP.

Thanks for showing us the way on this. I'm stumped right now:

I have ASIO Link Pro 2.4.4.2 running in two Win 10 (Home and Pro) computers in my "Test Lab" before I migrate to the Production Studio...

- Computer-A = ASIO Echo Audio Firewire (Echo Pre8) with ASIO Link Pro and CbB

- Computer-B = ASIO RME HDSP 9652 clock source ADAT with ADAT-1 /ADAT-2 from two different Audient ASP800's.

- AES/EBU master clock is from a Midas M32 to an Aardvark Sync DA that uses 3x BNC 75-ohm coax word-clock connections (2x ASP800 1x Echo Pre8)

- Network is 10GbE Ethernet ; Computer-A=192.168.1.121 Computer-B=192.168.1.123

I have verified that I have 'Received Audio Data' on 'Network In' coming from Comp-B to Comp-A. Network in a Comp-A is patched to 'ASIO Driver Out Mix' Signals from both the ASP800's and the Echo Pre8 show up as expected.

When I start CbB the first error message is that 'Analog Out 1-2' is in use by another application. The next error/problem message is that all of the 16 outputs of the Echo Pre8 are unavailable and muted. Another problem is that within CbB 'Preferences>>Devices' all the of Echo Pre8 check boxes work normally, but all the ASIO Link Pro check boxes for 'Input Drivers' and 'Output Drivers' are greyed out and inoperable.

I can't shake that feeling that I've missed something obvious, so I'm appealing here for ideas and diagnostic directions to take.

-

On 9/11/2020 at 3:02 AM, lmu2002 said:

...The new pcie 4.0 offers even faster max read/write speeds (3GB-5GB per second), twice the speed of pcie 3.0. However, the full speed in both cases is available only on the bigger drives (1TB and over). And also, most likely only the first m.2 slot runs at the maximum speed (via cpu instead of chipset) which also favours the idea of just one disc.

To give a perspective regarding DAW environment, if you have a 5min song with 50 full length audio tracks in 192KHz/24bit, your project is less than 10GB in size. ...

I did an experiment a couple months ago that may be relevant to this discussion:

The tested device was a 500GB Gen4 NVMe drive running the ATTO Benchmark in both a direct-to-CPU PCIe slot, and a switched PCIe slot. The result was a ~1-percent difference in the throughput. Link is below:

https://www.gearslutz.com/board/showpost.php?p=14878090&postcount=1827

Also, outside of the space requirements for the audio, it's important to keep both the overall speed capability and the user experience in view. There was a pretty careful test done to compare the sample loading performance of an NVMe drive versus a conventional SATA SSD. The differences in the loading/usage experience is far smaller than the speed difference between the two technologies. I'd like to see other tests of this kind, particularly in the context of CbB. Link is below:

https://docs.google.com/document/d/1wL8XYGgd_O9fomMrK1EpSnZJeQwhVOAn91e82byj8s4/edit

Lastly, keep in mind that 50 mono tracks at 192kHz needs less than 40MBytes/sec to playback.

-

1

1

-

1

1

-

-

Yes, I heartily recommend that you watch the Task Manager to see how your RAM usage goes.

While I was in "go-big-or-go-home" mode, I built my new AMD machine with 128GB RAM because I have ambitions of running my Vienna Ensemble Pro and some other very impressive libraries that I've purchased. Along with that, I have the intent to run AudioGridder in the AMD machine as a VST/VSTi server to my venerable 2010 Mac Pro.

So far, the high water mark for my RAM usage has been when I had DaVinci Resolve and CbB open concurrently. Even that was a total of less than 12GB. The biggest usage of the RAM is the 24GB that it's using for PrimoCache. I gained experience with PrimoCache running in the Win10 partition of the Mac and was very pleased.

Even though the boot drive in the new machine is a Gen4 NVMe, and my Picture Cache for CbB lives there, on a whim I ran the Primo Cache trial and was amazed at this one thing: I opened a CbB project that had not been touched since Feb 2020, so the Picture Cache would need to be completely regenerated. The project is 34 mono tracks, 2 hours long. Because of PrimoCache, the Picture Cache was generated within 15 seconds. It was truly impressive to see all those CPU cores lit up, and to get of sense of what had to be some high-rate data movement.

You can't directly observe the data rate of disk I/O in PrimoCache, but a benchmark like ATTO shows peak speeds over 22GBytes/sec. That's not a typo...it's 22,000MBytes/sec writing, and around 17GBytes/sec peak reading. That's the benefit of the 'L1' RAM cache in PrimoCache. The latency of RAM is about 20-50 nanoseconds, so it's 1/1000-th the wait time of even an NVMe which has latency numbers in the 20-100 microsecond range. The other lovely benefit of the PrimoCache implementation is that the 'L2' cache essentially makes the 'L1' RAM cache non-volatile, because it replicates the L1 RAM cache during operation, and restores it during reboot. I have 200GB of L2 cache apportioned on a Gen3 NVMe.

Unfortunately, that's the *only* circus trick that makes all that RAM worthwhile if I'm not yet building an orchestral soundtrack for the next blockbuster. (Will we ever go to the movies again?)

So, back to my original premise, spend some time watching the telemetry of the tools within Win10 so you don't over-invest. I think half of the RAM I have may wind up in the new machine that I will soon build for my son.

-

Cary, North Carolina.

-

Many people never get past the inflammatory headlines and thread titles, so I'm posting the descriptive article that won't simply cut/paste from the a source website.

The source website displays a large portion of a book called:

Ballroom, Boogie, Shimmy Sham, Shake

A Social and Popular Dance Reader

Edited by Julie Malnig (C) 2009

Pages displayed by permission of University of Illinois PressThe specific chapter that mentions Cakewalk starts on page-55. That chapter is :

"Just Like Being at the Zoo" / Primitivity and Ragtime Dance

by Nadine George-GravesI think the fair-use practices will allow me to type out and paste this portion of the chapter here. I'll remove it if there's any issue, but the web link is below the re-typed part that I've provided here. I added paragraph breaks for ease of reading, as the original page-56 has no breaks in it.

The Cakewalk was the most popular black social dance to influence the social dancing of the ragtime era. In fact, many early rags are Cakewalks, and the Cakewalk's syncopated rhythms directly led to ragtime musics's style. The Cakewalk had its roots on the Southern plantation when slaves would get together and hold contests in which the winners would receive a cake often provided by the master. The style of dancing had many influences, including African competitive, Seminole dancing in which couples paraded solemnly, and European dancing and promenading that the slaves witnessed in the big house.

The Cakewalk was a mockery of these European styles, but when the slaves performed for the whites, their masters often mistook the playful derision for quaint approximations of their dances. In this couple dance, dancers stood side by side, linked arms at the elbow, leaned back and pranced about high-stepping and putting on airs (figure 3.1). The men usually wore suits with tails, top hats, canes, and bow ties. The women wore long dresses, heels, and often carried a parasol.

Cakewalks were a regular feature in minstrel shows and black vaudeville, and because of the influence these traveling shows had on popular dancing, the Cakewalk quickly made its way to Northern dance halls. The Cakewalk led the way for Southern black dances to gain popularity in the North, thereby playing a seminal role in the creation of ragtime dance.

-

2

2

-

-

I have run Sonar and now CbB on Win7/Bootcamp and then Win10 natively in my 2010 Mac Pro for many years without problems. There are lots of possibilities of what could be the root cause of your symptoms.

Start with the basics;

- Let us know what MacBook model year and configuration [RAM/SSD-space/USB interface and any converters] you're running

- Document which release/maintenance level of Win10 you're running

- Run LatencyMon to establish its real-time friendly profile

- See if there's a way to manage SpeedStep CPU clock changes (always a source of pain)

- See if the behavior is different with the native audio function

As you do that, the community here will chime in to help you navigate into calmer waters. There are plenty of insightful people here, so you came to the right place.

-

On 5/24/2020 at 4:00 AM, Bill Phillips said:

@MediaGary, are you using a 100% display scale factor?

Yes, that's 100%. It's only reasonable/possible to do that when the physical display is large enough.

I know I'm repeating myself, but I can't remember where/which forum: The pixels-per-inch (PPI) that my eyes can manage is around 81 PPI at my working distance of about 43 inches from the screen. That's the same PPI that my 28-inch HD-only screens had.

I liked the 3-screen HD arrangement with track view in the center, VST's on the left and console/busses on the right. However, there was never enough horizontal space to manage the number of busses I typically use unless I went to the narrow view, which is inconvenient. The price of 4k screens held me back until I got a Craigslist deal on the LG OLED 55-inch screen.

On an unrelated note, check out how I changed the registry to show the title bar color of inactive windows as deep burgundy while the active window bars are medium green. It helps enormously when there are so many windows.

-

1

1

-

-

-

There are a couple of "moving parts" in the considerations regarding displays (monitors) and video cards.

First is your viewing distance. Based on that, the optimal size of the display is readily determined. As an example, I am comfortable working 42-inches away from my 55-inch diagonal display that sits behind my mixer. At my other working position, I'm comfortable 30-inches away from 34-inches away from my 32-inch display. Both are at 4k (3840x2160).

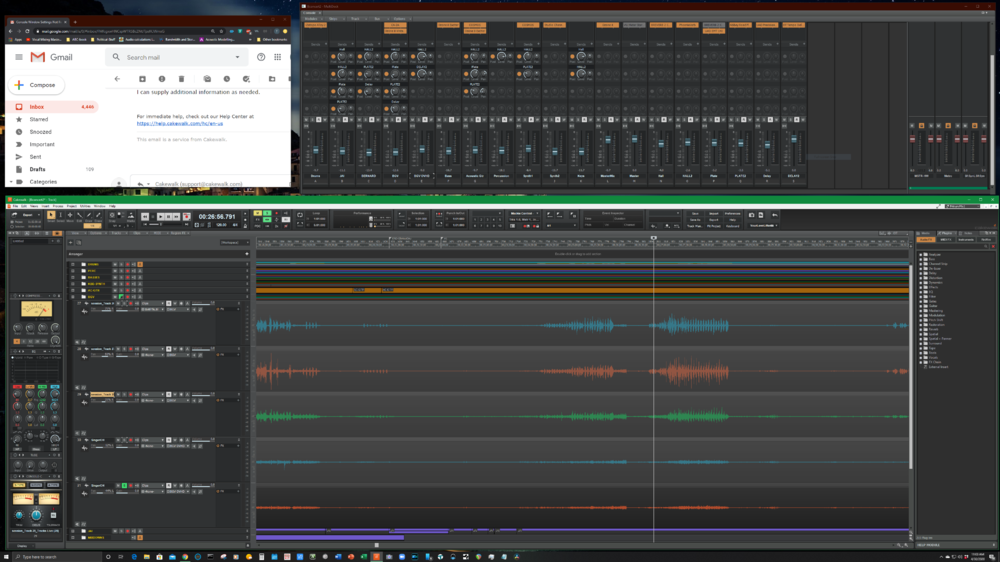

The top 25-percent of the 55-inch display is above my comfortable viewing angle, so in CbB I put my track view in the lower 75-percent and use the top for the console view of busses. I added a screenshot of what I do; although the area for the VST plugins is covered with a Gmail window.

This website linked below will allow you to play with some calculated values so you can use your current experience with the relationship between pixel density (PPI), resolutions and viewing distance to make a more precise estimate of what would give you a happier computing experience.

[https://www.designcompaniesranked.com/resources/is-this-retina/]

If you use a TV as a computer display, the TV must support 4:4:4 chroma subsampling at 60Hz refresh at UHD to give satisfactory text. That was a big/rare deal a few years back, but is much more common on TV's today. Computer displays always do that just fine.

-

1

1

-

-

32 minutes ago, StudioNSFW said:

My understanding (Potientially inaccurate) is the CbB and other DAWS don't "Thread out" the way Video post does. ..

One example in CbB is doing Drum Replacement. I had to do it for the kick and snare of a 1.5 hour recording that had 30 mono channels.

For each run of Replacer, CbB loads all 32 logical cores at a total load of 72% very evenly. Mixdowns so far have maxed out at around 12% total load, again looking very even (ThreadSchedulingModel=2). There's some possibility that the UAD-2 (Oxide, Reflection Engine, LA-2A, EMT140) is affecting the max achievable CPU load, but everything else involved is either NVMe Gen4 or SATA-3 SSD. I've attached the power meter to my AMD machine, and will be getting to the video work soon enough.

Everything is being done at 48k; my round-trip latency through the Midas M32 with these four ASIO buffer sizes is...

32 samples = 3.02ms /// 64 samples = 4.35ms /// 128 samples = 7.02ms /// 256 samples = 12.35ms

-

2

2

-

-

14 hours ago, Jon White said:

.., if the claim is true, those Ryzens a track to race on. They seem to use more power to get the job done, too, and create more heat, according to articles I've read.

...

Power consumption is proportionate to total heat output. It's the concentration of watts per square mm that determines the temperature. Consider a 15-watt soldering iron as an example of low wattage and high temperatures.

Idle current in the Ryzen processors certainly shows "high" current because the temperatures are higher than comparable new Intel processors. My new AMD 3950X system disturbingly idles around 51C (138F). However, the whole system is drawing around 105 watts from the wall socket in that condition. Although much older, the physics lesson is that my 12-core Mac Pro with dual 3.33GHz processors idled at around 39C (102F) but the system is drawing 230 watts from the wall while idling.

Video renders in my AMD machine push the processor to 82C (180F) while the older Mac never crossed the 70C (158F) mark while drawing 480 watts from the wall during the render.

I have some challenging video renders to do this afternoon, and if ambition strikes, I'll hook up my power meter to the AMD machine to see what it draws at full cry. I expect that its maximum draw will still be less than the older machine's idle state, while offering over 3x the total processing capability. It won't be a perfect comparison, in that the Mac has a GTX 1070 (180W TDP) video card, while the AMD has a 5700 XT (225W TDP) video card, but it should be instructive nevertheless.

When comparing the newer 9th generation Intel processors to the AMD Ryzen series, one has to be careful of the numbers because the TDP ratings are developed in wildly different ways by each chipmaker. The typical outcome is that the Intel power consumption if significantly higher at equal performance capabilities to the Ryzen series.

-

2

2

-

-

Okay, I'll make a try at understanding the root of the problem:

A good place to start is to run LatencyMon [www.resplendence.com] to check that your machine happy with its combination of video card, network (wired/wireless) and other driver interactions.

-

Yesterday, I received the new 2.30 BIOS for my ASRock X570 Creator motherboard. The 2.30 isn't posted on the ASRock website, so I had to directly request it from ASRock Tech Support.

The 2.30 BIOS fixed the problem of the UAD-2 PCIe 'Failed to Start (Code 10)' problem. It's working fine now.

-

1

1

-

-

Perhaps something from the [vb-audio.com] product line will do what you want; Virtual Cable, or Voicemeeter, or Voicemeeter Banana. Take a look.

-

I did a trial connection with a friend using www.audiomovers.com VST plugin. I put it on a bus in CbB. The destination link is given to the person on the other side who can have it as a VST simply in a browser window. Our visual connection was Zoom so a cut/paste in the Chat window was used for passing the URL to him.

I've also configured vMix for a variety of uses (sort of like OBS) but never tried to integrate AudioMovers with it.

Give a fuller description of what you want to accomplish and I'll see if I can provide some useful input.

-

Any progress on this? I just ordered a Lynx AES16e-50 and would like to benefit from any experience out there.

-

Thanks for your interest in this topic.

It might be instructive for me to remove the RME MADI PCIe card, leaving only the UAD-2 card in place to see if it's properly detected. I doubt it would change anything but it is good diagnostic hygiene. I'll try that tonight.

I know for sure that:

- The UAD-2 card uses only one PCIe lane

- Firewire cards only use one PCIe lane

- The RME MADI card is PCIe 1.1...also uses only one lane; and the other PCIe x1 cards are likely PCIe 2.0 or lower.

- The PCIe x1 slots on the ASRock Creator are PCIe 2.0 specification

- Th e UAD-2 Solo, Duo and Quad cards negotiate addresses differently in the PCIe slot compared to the Octo which reportedly works fine everywhere (AMD+Intel)

- The M.2_2 slot in this board will disappear from the boot menu candidates when Thunderbolt is enabled

- The M.2_2 slot can be concurrently used with the PCIe Slot-6 if Thunderbolt is disabled

As for other factors, I may get to them "soon". For now the most interesting problem to solve is setting the "Record Latency Adjustment / Manual Offset" value in the Sync and Caching page of Preferences. The RME MADI card only knows its digital behavior and the driver reports I/O Latency values to CbB . The driver can't see the 44-sample ADA delay through the M32 that I measured using Oblique RTL Utility.

Since the Reported Input Latency (in samples) is about 1/2 the size of the Reported Output Latency, I'm wondering if I can determine the correct value of Manual Offset without having to do an experiment with click sounds and a microphone next to my monitor speaker (how crass).

I'll report back after I do the "UAD-2 only" test.

UPDATE:

The UAD-2 only test showed that the machine would not recognize the card and even the Device Manager refuses to open the dialog window. Moreover, the LG OLED UHD display screen has disturbances and flickers. That implies that something bad is happening on the PCIe buses or within RAM timings that's common to the slot that serves the AMD 5700 XT video card.

I took out the UAD-2, put the RME MADIface ExpressCard back in, and all is well. The next PCIe card going into the machine will be a Blackmagic Design BMDPCB95. It's a 2x SDI + 2x HDMI-HD PCIe x1 card for video capture. The hope/plan is to use it as a two-input 'webcam' card for Zoom sessions, fed by high-grade DSLR cameras instead of my wimpy Logitech C615C USB webcam.

UPDATE-2:

The UAD- 2 now works because ASRock issued a new 2.30 BIOS for this X570 Creator motherboard. As of April-17th it's not on any of their webpages, so you have to directly request if from ASRock Tech Support.

As for the timing offset. The correct answer for an RME MADI Expresscard through a Midas M32 is 45 samples. I recorded a metronome into a CbB track, then played it back through the mixer as an analog input, and checked the offset by zooming into the track level. The 44-sample figure was obtained from a Oblique RTL Utility. The 45-sample figure is obtained by directly measuring the sample-level alignment of the playback and recorded track.

-

1

1

-

7 hours ago, Kevin Perry said:

I'd read elsewhere about some Ryzen BIOSes enabling (this is from memory) 10 bit PCIe IDs which cause issues with some devices. See if you have something like that in the BIOS you can change?

Thanks for the tip!

The BIOS parameter is Advanced AMD CBS / NBIO Common Options / PCIe Ten Bit Tag Support

Its default status is .Auto=Disabled.. I changed it to 'Enabled.'. There was no change in the symptom...still Code 10... Device not Started (UAD2Pcie)

I'll see if I care enough when I get up tomorrow to send an error report to ASRock tech support. They may welcome the distraction, or deem me insular and self-involved to bring up such an issue at a time like this.

-

1 hour ago, Kevin Walsh said:

I've not run into any problems with interfaces with the Ryzen system at all, sorry to hear about your UAD device. I assume you have updated your BIOS, chipset drivers and obtained the latest UAD device drivers?

I've tested a rather long-in-the-tooth MOTU 8pre connected to a PCIE Firewire card with great results. I recently moved an Audient ID22 to the primary interface role, also with very good results.

Yes, the latest UAD 9.11 download, the latest 2.10 BIOS for the ASRock Creator, and the latest AMD chipset driver [2.03.12.0657] dated 3/19/2020 are all in place.

A few months ago I had attempted to use the UAD-2 across my 10GbE network using Vienna Ensemble Pro v7. I got a few things going, but it was clumsy. I decided then to begin a slow walk away from the UAD-2 stuff.

I had consistently used only the EMT140, DreamVerb, Reflection Engine, LA-2A, and Oxide from among the UAD-2 stuff. I will now substitute the Waves Abbey Road Plate, PhoenixVerb and Valhalla Vintage Verb, Cakewalk CA-2A, and my collection of tape emulator products that include Nomad Factory Magnetic-II, ToneBoosters ReelBus, Abbey J37, iZotope Vintage Tape, and of course the wonderfully useful Overloud tape emulator in the ProChannel of CbB. It'll be fine.

-

I just updated my signature, having substantially migrated from my 12-core 2010 Mac Pro to my newly-built AMD 16-core 3950X. I'll try my first serious CbB mixing today. The machine has:

- AMD 3950X / 128GB RAM (Corsair Vengeance LPX 3200-C16) ASRock x570 Creator

- Sabrent Gen4 1TB NVMe, Sabrent Gen3 2TB NVMe, 2TB Crucial MX500

- 10GbE link to external servers : Media server with 4TB SSD RAID-0 and 16TB RAID-0 HDD. Redundant Server with two 12TB RAID HDD's.

- Cooler Master HAF XB EVO case

All the Universal Audio software installs fine, and the UAD-2 Duo PCIe is recognized in the Device Manager, but it fails with a Code-10 (failed to start). So for now, the UAD-2 stays back in the Mac. I have no Thunderbolt devices to test. My M32 mixer/interface is connected via an RME MADI ExpressCard.

If anything unexpected happens with Cakewalk or Reaper or Studio One in the new machine that might be relevant to this CbB community, I'll update here.

-

3

3

-

1

1

-

To @Stavv ... since this discussion has revived:

- Is your 3840x2160 set at 100% or some other number?

- What is the diagonal size of your display?

-

13 hours ago, John Hamblen said:

I am having the same issue with the midas m32 with cakewalk. also when i am in record mode with the midas it wont play the tracks already recorded...

You'll probably had to start a new thread to discuss this issue. I use an M32 in my studio, so I can offer help. It certainly seems like a routing issue.

-

I wonder what the certification means as a practical/operational matter. For example:

- some things will hot plug and some won't in the non-certified implementations

- works in Windows but has hackintosh limitations for the non-certified

- some kind of per-board-manufactured fee must be paid to Intel despite the new royalty-free status

- all USB and SSD peripheral stuff works, but DisplayPort stuff doesn't work

Any ideas about the differences?

ODeus ASIO Link now available free

in Deals

Posted

Thanks to @msmcleod and to @Will_Kaydo for your interest in this topic. I may have to draw a proper picture of my network connectivity, but the Echo Pre8 and the RME 9652 are in separate machines across a 10GbE network, so I don't get the benefit of a loopback/in-computer timing.

RME 9652 PCI-----Computer1-----10GbitEthernet-----Computer2-----FireWire----Echo Pre8

The 10Gbit cards are Solarflare 5122F's that have fiber optic links through a MikroTik [CRS309-1G-8S+IN] LAN switch. Solarflare cards have sophisticated drivers with TCP/IP Offload capabilities. That may or may not contribute to what I'm seeing here. Computer1 and Computer2 are both HP Z220 workstation computers. Comp1 has an 8GB i5-3470 and its primary job is as a server. Comp2 is a 16GB i7-3770 whose primary job is as an Administration machine.

Those two machines are participating and showing the 12-millisecond arrival difference between the Firewire and ASIO Link network signals.

The picture attached below is the topology of the two studio computers when the MADI and ADAT connections were merged via the RME driver in the AMD/Win10 computer that's in the lower left side of the diagram. This combination achieved a 0.4-millisecond difference. The computer in the lower right is a 2010 Mac Pro now running Catalina. Since that diagram, I'm temporarily running the M32 via good old USB 2.0.

The fact that ASIO Link Pro works at all may be enough, since the network-sourced audio inputs at best would be supplemental for experimental. However, if The function of ASIO Link Pro and AudioGridder ever showed up within the same product, like a poor-man's point-to-point Dante with the added sophistication of horizontal/remote VST processing, that would be a game-changing event in audio studio solutions. [hint, hint]