-

Posts

68 -

Joined

-

Last visited

Posts posted by MediaGary

-

-

I know I am reviving an old thread, but lately this has been top-of-mind:

I would like to have a keystroke shortcut that performs the ProChannel EQ-Flyout function . If there is already a way to do this, I'd love to know.

-

Yup... Contour Shuttle Pro V2 for many years.

I have it mapped for common Cakewalk functions, and have mapped identical buttons for matching functions in Reaper, Logic Pro X, and to some extent in DaVinci Resolve. It really makes me smoother and more confident in jumping around between products.

I glued a 1-inch high skirted aluminum knob to the jog wheel, and have it mapped for up/down values in plugins. It's notchy, but still allows me to fly the cursor over the plugin GUI with my right hand, and change the values of the GUI knob with the Contour on my left hand.

-

Yes!

I use Mix Recall in pretty much every studio session because multiple songs are in the same project, and in most concert recordings because the stage setup changes demand different effects combos.

It's a terrific feature. Thanks, Bakers.

-

This is decimal arithmetic so the full precision of binary values isn't here, but you will get in the right ballpark.

48000x3=144kByte/s for each track.

32x144k=4608kB/s for 32 tracks

4,000,000k is 4GB / 4608k

~ 868 seconds ~ 14.5 minutes per 4GB segment.

EDIT: Feeling ambitious...

Binary arithmetic: 4194304k is 4GB yielding -15.17 minutes per 32-track segment.

Therefore you'd need 4-segments x 3hr = 12 segments (48GB).

The last time I used this X-Live recording process, I think any of the SD cards over 32GB had to be externally formatted, but perhaps ExFAT is supported within the X-Live now... dunno. I only mention it because (obviously) you'd have to go for relay-recording between two 32GB SD cards to meet your 3-hour requirement

HTH

-

Yes, the import and breakout/explosion works fine. As expected, you have to carefully position/concatenate the 4GB segments to avoid clicks/dropouts at the edges of the files.

But yes, when I was doing remote recordings, it was my standard procedure and Cakewalk always worked with 8, 16, and 32 tracks.

-

You've certainly piqued my curiosity:

I have used a Midas M32 (full-size) in my studio since 2016 or perhaps little earlier. For most of that time it was on the normal X-USB interface/expansion, and I have since migrated to using an X-ADAT expansion driven by RME HDSP 9652's. When using USB, I was always using USB2-only ports and set to 24-bit recording in Cakewalk; never 32-bit. [That's for the hardware capture. Track processing at 32-bit is left as-is.] Most of the time I ran X-USB at a 256-sample buffer. I now run most of the time at a 64-sample buffer with the RME.

For a while, concert events were recorded via a Behringer X32 Core using an X-Live card with a 'safety' concurrent recording into a Lenovo laptop. Again, the drill was ASIO, USB2-only and 24-bit. There has never been a problem with either the outside events or the studio M32.

It should at least be encouraging that that your setup isn't unusual, and should work just fine. Checking the LatencyMon statistics is important to establish how well-behaved your system should be. Also checking for a mis-matched sample rate with the native motherboard sound thingie is good advice. I generally leave the Windows set for 'No Sounds' .

That's about all the in-the-blind advice I can offer. Stay encouraged, and let's hope we can quickly get this sorted.

-

On 4/24/2021 at 12:39 PM, RICHARD HUTCHINS said:

...So...I record the various tracks and get the signal on these to around -18db on the VU, all okay so far. I use my Steinberg interface to adjust the gain. But my vocals are almost imperceptible when I record them at this level, maybe peaking at -12db, average 18. ...

Just to be clear, are you looking at a VU meter or the Peak/RMS meters of the track?

I ask because the "standard" calibration of a a VU is that 0VU is equal to -18dBFS RMS. For recording, it's routine to target -18dBFS average with -12dBFS or -6dBFS peaks, but you're in a for a world of weak signals if you target -18dB on the VU meter. Let us know for sure.

-

1

1

-

-

On 3/18/2021 at 4:44 PM, Heb Gnawd said:

SOLVED! ... I wanted to update this post in case anyone in the future finds themselves where I was. ... As soon as I replaced these "hybrid" drives with real SSD drives the problem disappeared. This was probably a mistake not many of you would have made ....

Truly, it's wonderful that you got to the bottom of this issue. I wish I had seen this thread earlier, as I could have saved you some heartache.

Back in 2015, I had a plan to add two more SSHD's (a reasonable term for a hybrid HDD) to the two that I already had in order to build a very interesting RAID array. Things went badly *very* quickly, and that flavor of the project was abandoned. It's documented in the 2nd article of a 5-article series on my website. [ https://www.tedlandstudio.com/torpedo-at-the-dock ]

The additional SSD cache in the HDD of a hybrid drive isn't especially effective because its small size makes it inherently slow, and it's not especially well-matched to media-based workloads that audio and video creates. Since then, I've made quite a few combinations of hybrid hard drives, and finally, at this time, I use a limited-horizon approach to this by using PrimoCache.

You can read my summary description of PrimoCache, and the way that I use it in two posts that I did on another forum: [ https://www.gearslutz.com/board/showpost.php?p=15353007&postcount=13648 ]

[ https://www.gearslutz.com/board/showpost.php?p=15354350&postcount=13655 ]

Thanks OP for closing the loop and updating us with the solution!

-

About the curve...

I had the 55-inch (diagonal) LG OLED C6 curved screen on my DAWs for about two years. The curve for that one peaks at about 1.5-inches deep across its 48-inch width. If it was two flat panels in a 'V' arrangement, it calculates out to about a 3.4-degree angle.

One advantage of curved screens is that it reduces the color/contrast falloff that's typical of IPS and VA technology panels when viewed an oblique angles. OLED panels don't have that falloff issue, so curves are wasted in that respect. I specifically chose the Samsung Q80T QLED for its contrast consistency at oblique angle views. I still would rather have kept the flat LG CX were it not for its too-aggressive brightness limiter. I have since learned that there is a way to overcome the CX ABL by delving into the service menus. Oh well...

Once I went back from curved to flat, my brain had to re-adjust! My eyes/brain had developed habit of making the curved top edges of app windows of the apps to look 'normal' to me. When I went flat, it looked *wrong* for two days until I re-adjusted.

In terms of sound, there was no difference to me in the interaction between the speakers and the screen. That's not surprising, considering how mild the curvature is.

-

1

1

-

-

3 hours ago, musikman1 said:

I'm not big on computer terminology, but I've noticed that some people will call a 6 core - 12 thread processor just "12 core", and an 8 core - 16 thread processor just "16 core". Am I to assume that for example a 6 core - 12 thread is really a 12 core?

Watch out for people who get lazy with terminology in the world of computers! It's deadly.

The Intel Hyperthreading and AMD Symmetrical Multithreading are both technologies that provide two threads per physical computer core; hence the 6/12 or 8/16, etc. terminology.

-

This has come up before on this forum. I'm providing two links (below) to my posts on the topic.

I used a curved 55-inch LG OLED display for about two years, and have since gone back to a flat LED display of the same size, even after testing the new LG CX OLED. I found that the auto-dimming, ABL (Automatic Brightness Limiter) and self-protection algorithms were bugging me.

-

1

1

-

1

1

-

-

I got a mental cramp as I tried to translate your description into a topology diagram. If you'd draw one for me, I can be more helpful.

Nevertheless, it seems to me that CbB can't see the reported latency of two "layers" of drivers, so its 'Recording Offset' setting needs to be changed to achieve alignment.

Get an out-to-in jumper cable on the out-of-time recording input, and run a pre-recorded click track to it. Record the input click track coming through the loopback and adjust the Recording Offset value to make is all happy. That's the best I can offer without a more clear understanding of what's connected to what.

-

1 hour ago, Bruno de Souza Lino said:

You mean, using all the stuff that doesn't come with CbB but comes with SONAR? How would people that don't have access to these even test it on their systems?

No, I actually didn't think it through to check whether the LP EQ was standard or not. I just remember it being a 'heavy' plugin.

My intent is for the test project to be based on an unadorned/vanilla version of CbB so that everyone can participate and we can have valid comparisons between machine configurations.

-

On 1/28/2021 at 9:02 AM, Noel Borthwick said:

Could any of you folks running high core count PC’s please try the latest hot fix? ESP those running AMD Ryzen systems.

There are some optimizations and fixes for MMCSS that might improve performance. If nothing I just want to make sure that it doesn’t cause any issues.Can you offer a standardized CbB project that we can all use for comparison? Perhaps a beastly combination of linear phase EQ's, synth/patterns, and other heavy stuff to stress performance the aspects that you'd like to explore.

-

Seems to me your best first move is to install a AMD Radeon video card. Nothing exotic is required; it's just a way to get DPC latency-friendly behavior from the drivers, since the Nvidia card you have now is a major hindrance to *any* good audio experience.

Don't get me wrong, I run an Nvidia card in my AMD-based rig right now, and everything runs great. However, something older/cheaper might be easier to find, and the AMD Radeon world is a good place to start.

-

Latency of USB ports is *practically* unrelated to its speed in Gbit/sec.

You will find that USB 2.0 and 3.1, 3.2 in all its flavors have latency figures that are all clustered around what the driver suite is able to accomplish. Each vendor has its own device driver implementation, and that is a strong predictor of what you'll experience/measure. Also, as you know, a USB 3.x port will 'downshift' to run at USB 2.0 speeds when presented with a USB 2.0 device.

Also keep in mind that PCI is less than 1.1Gbit/sec, and that PCIe interfaces tend to be just one PCIe lane. Usually that's a PCIe 1.1 lane, so 250MByte/sec or a net of 2Gbit/sec (payload after decode) is a common performance metric. However, both PCIe and PCI have a vastly different and more efficient driver implementation, and therefore is able to achieve lower latency than USB.

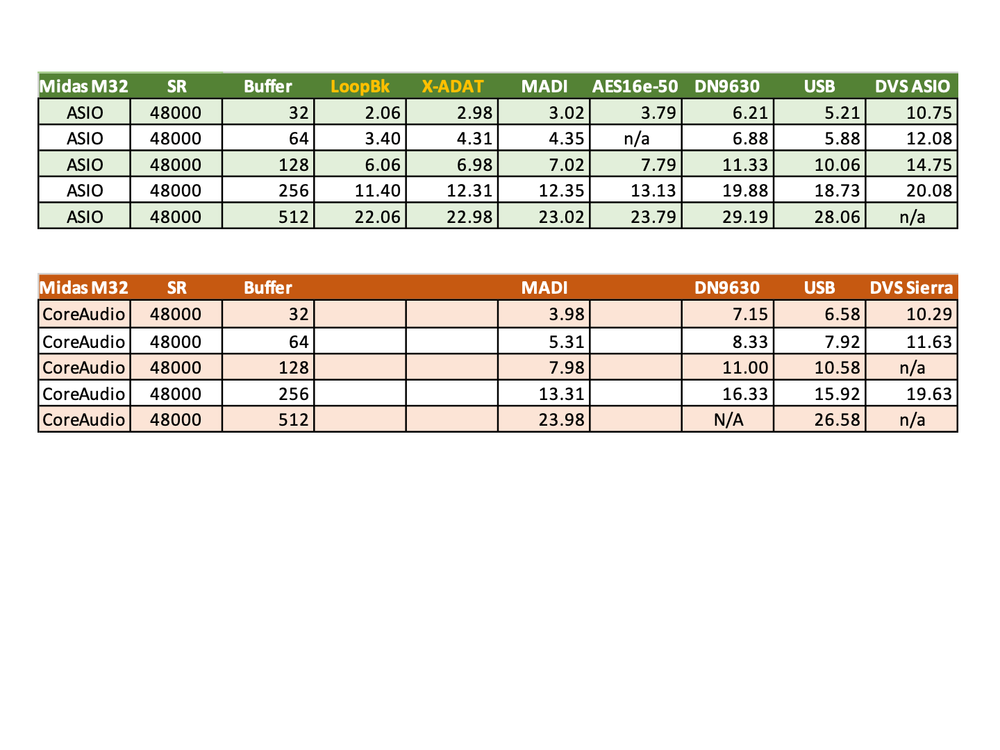

I've attached a chart I made a few weeks ago that summarizes the Round-Trip-Latency of all of the attachment methods that I've used with my Midas M32 and Behringer X32 mixers, both in Win10 and macOS. To helps with the nomenclature of the chart: DVS is Dante Virtual Soundcard, AES16e-50 is a Lynx PCIe card, the DN9630 is a USB 2.0-to-AES50 adapter, the MADI interface is an RME PCIe ExpressCard, the LoopBk was direct ADAT-out-to-ADAT-in of an RME HDSP 9652 connected through an external box to a PCIe slot. Lastly, X-ADAT is the way that RME 9652 card is connected through my Midas M32 that serves as the center of my studio.

-

Hey, @GreenLight

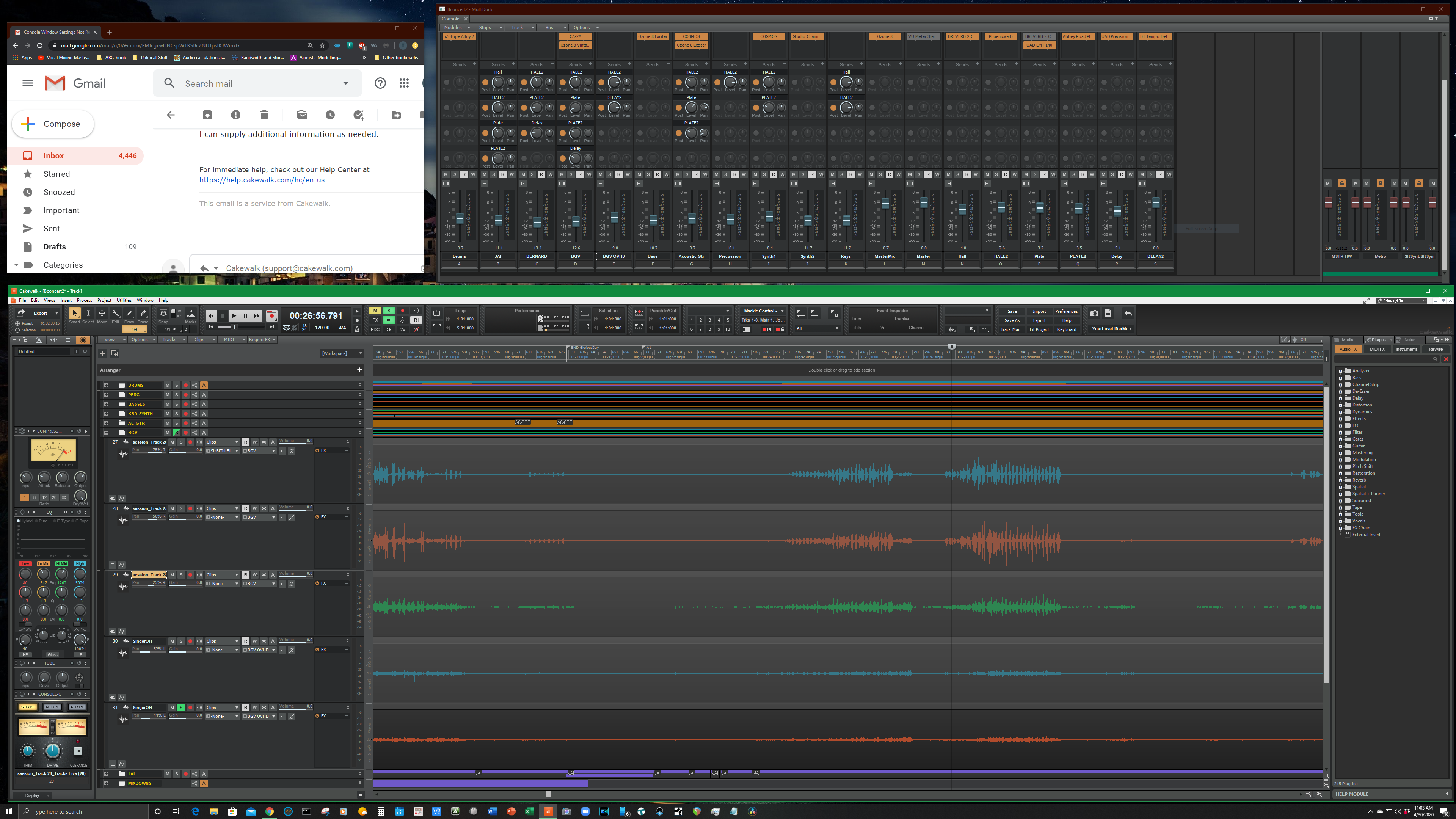

I have a 4k screen, and there's a screenshot capture of how I have it organized when I'm running Cakewalk. I run CbB at 100% so nothing is magnified. That requires a pretty large screen that allows all of the little numbers/icons in the Control Bar to be represented.

Use a website called www.isthisretina.com to compare and calculate reasonable viewing distances. If your 4:3 screen is 1280x960, then it's about 84 pixels per inch with a "retina distance" of 41-inches. To maintain the same 84-PPI , a 52-inch diagonal screen would be required at 4k. To simply have a reasonable 35-inch viewing distance, my calculations show that a 45-inch diagonal screen would be required to run at 4k/100% . Pick the size that works for you.

-

Just adding my testimony here:

I have/use Nectar 2 Suite; always as an insert. It's super handy to have around because it's a very convenient way to keep a profile of a "plug-in chain" for individuals that send me vocal tracks. The EQ, compression, de-essing, saturation, etc. all in a single preset saved me a bunch of time yesterday when a church called me for an emergency edit/mix for some Christmas presentation content they needed.

I find the manual pitch correction function to be clumsy, but the De-Breath works well. I only have used the Harmony Generator function twice in the past 6 years (also have Nectar 1) so I can't say much about those functions.

-

On 12/10/2020 at 4:28 AM, Bill Ruys said:

- RAM is 3200, CL16

- Motherboard is MSI MAG X570 Tomahawk WiFi

- I have the MOTU 896 Mk3 hybrid, so I can use either USB or Firewire - currently using Firewire via a Texas Instruments based PCIe card

- Windows 10 release 20H2

I'm back with two questions:

- Did you happen to run the LatencyMon tool by Resplendence on your former 3900X?

- Can you run LatencyMon for 7 or 10 minutes on this new 5900X?

-

-

This is hopefully helpful: I have an MR18 that is similar to the XR18 which is functionally similar to your X18 (whew!).

First checking the hardware routing ... in the X-Edit app, the In/Out panel (pop-up window) there is a 2x11 grid where 'USB 1/2' column has a blue dot in the cell on the 'Main' row. That's what's needed to get CbB 1/2 out to go to the physical main outs of your X18.

As for what you mean about "no audio going to the X18 via the USB lead", you should check the 'Meter' view in the main X-Edit app to see if something is coming from CbB or not. I'm not familiar with the tablet app that runs the X18, checking those two things should get you closer to a solution.

Keep us posted.

-

9 hours ago, Bill Ruys said:

I just replaced my Ryzen 3900X (12 core) with the new 5900X (12 core) and I simply can't believe the results in CbB.

Please tell me a little more:

- What RAM speed and latency and motherboard (e.g. 3200-C16, X570) ?

- Are you using the Firewire or the USB of the MOTU 896 Mk3 ?

- Which release of Win10 are you using?

-

You should run Xmeters in the taskbar of your computer. I have it configured to show operating system stuff in orange, and the other color for application work. You'll quickly see that a CPU logical core is never dedicated to one or the other, but *very* dynamically mixes the workload.

Another thing is that it's quite interesting to see CbB go all out with Drum Replacement. It's actually quite beautiful to see how evenly loaded all 32 logical cores are during that process.

-

1

1

-

1

1

-

-

I volunteer my machine for running testing projects if that would help the cause.

Feature Request: Keystroke for ProChannel EQ Flyout

in Feedback Loop

Posted

I frequently use the ProChannel EQ, play the track a few seconds and then invoke the ProChannel EQ again to tweak as needed. Repeat 10 times per track.

I'd like to keep my mouse out in the region that I'm editing, and only navigate over to the EQ flyout without having to overtly pop it in and out. If this function already is possible, please let me know!