-

Posts

290 -

Joined

-

Last visited

Posts posted by Sunshine Dreaming

-

-

On 4/13/2024 at 12:10 AM, msmcleod said:

For example, say your room has a dip at 350Hz or at 800Hz - you think everything sounds thin, so you boost the frequencies around there. However you then play it on another system, and everything sounds muddy or nasal. You've boosted a frequency because you can't hear it properly - not because it's not there, but because your room has EQ'd it out.

When you say, the room has "a dip", about which range are we talking here?

-

1 hour ago, CSistine said:

By the way some comments above mention the number of digits for 32-bit float. This is only true for IEEE floats (as used in PCs). There exist other float formats that have a totally different construction!

CbB is Windows-Only. So either you use a PC or a mobile ARM device. And x68/x64 and even ARM uses also IEEE float: [..]The ARM floating-point environment is an implementation of the IEEE 754 standard for binary floating-point arithmetic.[..]

Please tell me, to which other architecture do you refer to related to CbB?

-

1 hour ago, Glenn Stanton said:

and in effect, 32-bit is "lossy" because of the float... same reason banks don't use floating point for currency calculations... e.g .https://dzone.com/articles/never-use-float-and-double-for-monetary-calculatio

the mantissa of a 32bitt IEEE single precision float is 23bit and that float has additionally a "sign" bit in its 31st bit, which together represent 24bits precision. The 24bit sample has indeed exactly the same "sign" bit, but in its 23th bit, not in its 31st bit of the DWORD.

Additionally single float has an 8bit "biased exponent" which allows values bigger than 1.0 and smaller than -1.0 adding a total (normal) number space up to 10e-38 to 10e+38; Anything beyond that (where biased exponent == 0) would be denormals, which are avoided because of speed losses.

https://en.wikipedia.org/wiki/Single-precision_floating-point_format

[..]All integers with 7 or fewer decimal digits, and any 2^n for a whole number −149 ≤ n ≤ 127, can be converted exactly into an IEEE 754 single-precision floating-point value.[..]

2^24 = 16,777,216 combinations of a 24bit sample

signed range : - 8,388,608 .. + 8,388,607 (these are 7 decimal digits)

However, floats for audio use the mantissa (fraction) part, not a 1:1 conversion of the integers.

[..]1 + 2^(−23) ≈ 1.00000011920928955 (smallest number larger than one)[..]

which is ~ 10e-7 above 1.0; at this (or similar) level for example a VST3 host would add the noise floor to ensure speed advantages (avoiding denormal calculations); from VST3 SDK docs: [..]The host has the responsibility to clear the input buffers (set to something near zero, like 10e-7, to prevent de-normalization issue)[..]

However, if increasing the volume much higher than +/- 1.0 the precision will become lower, but those values would completely clip on a 24bit sample, where data actually being lost on 24bit samples.

-

1

1

-

-

3 hours ago, Antonio Soriano said:

Interesting, Confusing and Complicated.

Sunshine have you attemted this?

Can you give an example?

Thanks

I've not fully finished with it yet.

In a PM I could send you some more information, if you wish.

-

On 2/1/2024 at 12:07 PM, msmcleod said:

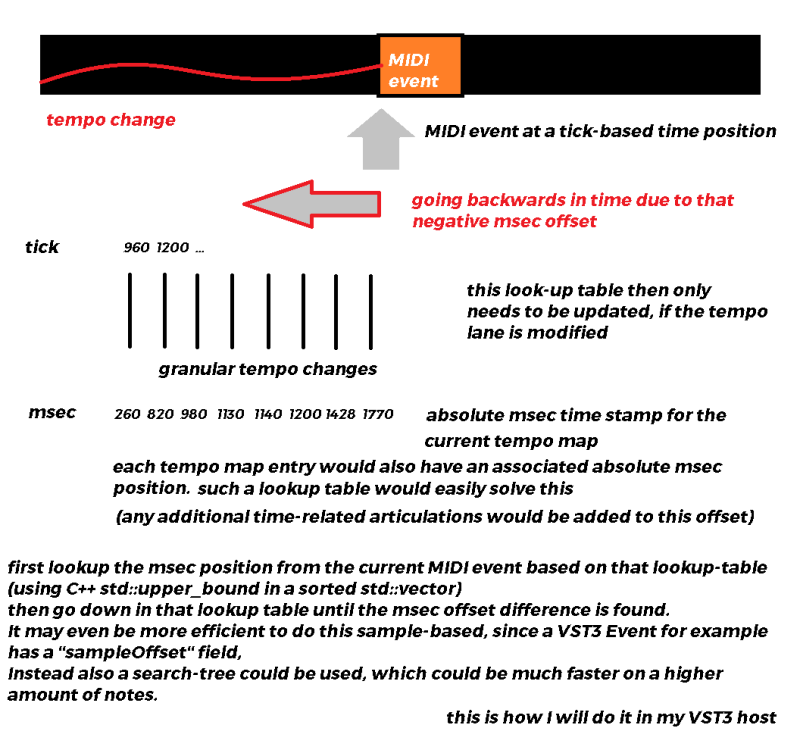

Tempo changes are still discrete jumps, but just at a more granular level. The tempo map is derived from the tempo envelope each time it's edited so it doesn't have to calculate it in real-time.

However, an absolute time offset it's still quite complex to do, due to the way MIDI events are queued up in time ready to play. Part of the queuing process is determining which events to queue and when to queue them. This is all done in batches of musical ticks at the moment. If the delay (negative or otherwise) was specified in milliseconds, this would ultimately have to be converted to ticks - but when tempo changes are in play, the amount of ticks could be different for the start time for searching through what to buffer and the start time of each MIDI event. Getting this wrong could result in either missing notes or notes queued up twice.

As far as it being computationally intensive... this is partially true. Retrieving the tempo at any point in time is a fairly quick process, but does involve a binary search of the tempo map. Doing this for every note may have an adverse affect on engine performance, but then again, it might be fine on faster machines.Not saying it's impossible, but it's not trivial. That part of the code is pretty involved (even more so now that we have articulations).

My suggestion;

In the end any MIDI Event (at least when using VST3 plugins) are time-based positions. They are applied in a plugin at an absolute sample position, based on the current block position plus the sampleOffset field in VST3 Event struct. That sampleOffset field there itself cannot be used for negative offstes, since its only valid within the current blocksize;

Some fine tuning afterwards is additionally required; Also some sort of marker in a clip should be set to have an easy indicator where the clip is located within the look-up table. This would additionally increase speed.

-

1

1

-

-

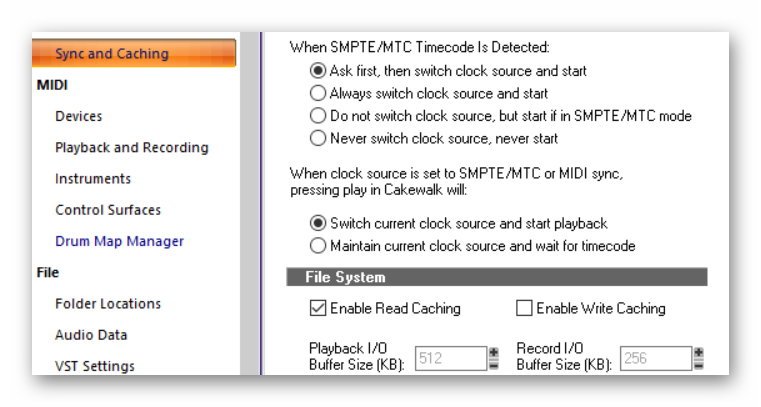

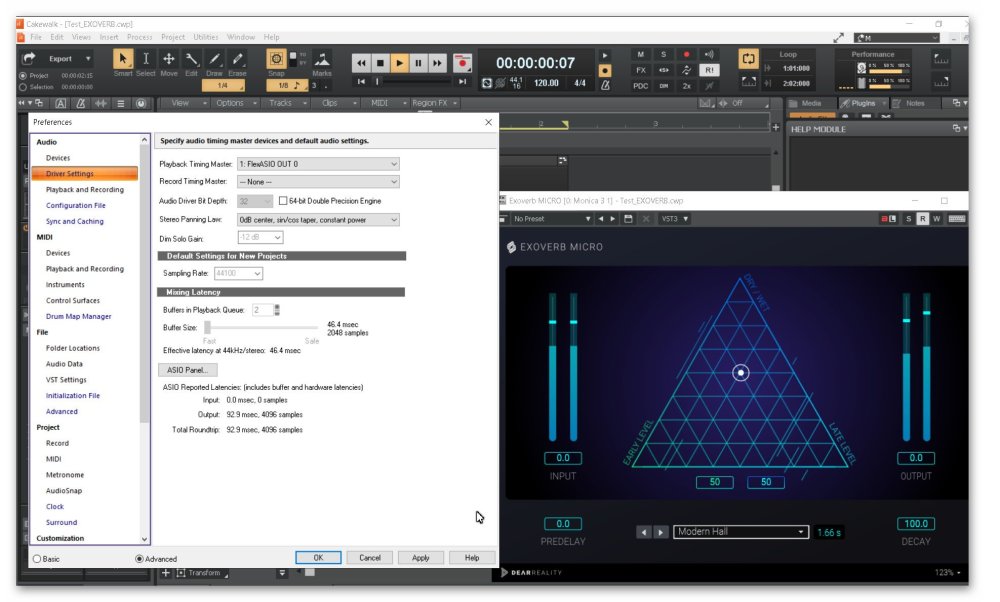

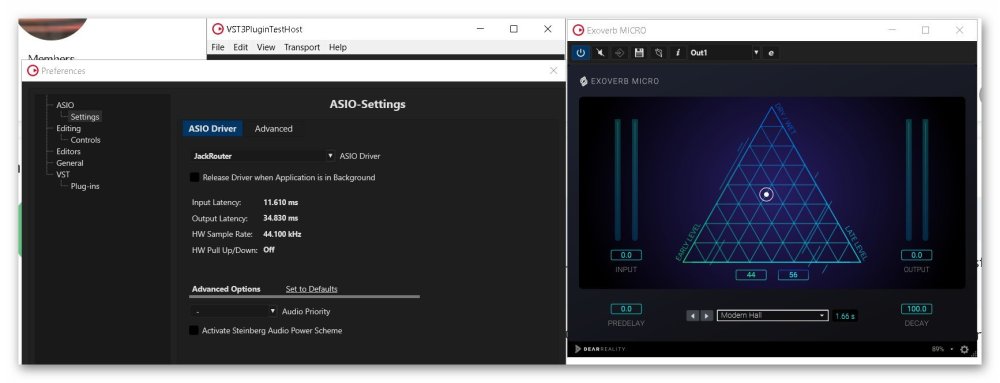

ASIO4ALL sets the buffersize to 512 and the plugin runs correctly, without the need of running an external service.

Rendering to an audio output file succeeds (The plugin does not silence the track anymore). And other WASAPI players can continue to play, but yet the live audio output is not configured. Although the VU meters show the audio level, the soundcard does not yet output any sound.

I think I'll find a way also for that. but I don't think I need it, cause:Then I found FlexASIO 1.9. The buffer size can be set in a configuration file "FlexASIO.toml" (

althoughI installed also FlexASIO.GUI,the UI does not open on my systemand after another reboot it finally opens) and as backend WASAPI is used. Both settings Shared and Exclusive are supported. I chose "Shared" and finally it works! Also a bigger project plays in the same way now as it did on "WASAPI Shared" before. EXOVERB MICRO loads correctly in Cakewalk, no error message/warning. Even a media player continues its playback while CbB is playing through "flexASIO". Increasing the blocksize to 2048 improved the sound quality. I never do live recording with CbB anyway, for that I use another DAW, but also there an USB microphone does not need any interface. And if I want to use an external keyboard that also would use USB.This might not be a solution for everybody. Those who do recordings from a non-digital source might need an interface, I would never doubt the experience of those people. But if you only have digital input (like me) or stems or USB microphone and 3.5" cable monitor speakers with own amplifiers (and there are good ones) and an external sub woofer, for what do you then need that additional device? So no energy is wasted for an additional device, when finally WASAPI Shared works with this setting.

For those who are interested how to set it up: on default it uses (the preferred) 882 samples and the slider in CbB is enabled, but the setting is not applied... mmh, I heard a similar problem somewhere before...), but it can be set in the configuration file; You might need to change the device name to your audio device name; that you can find through running C:\Program Files\FlexASIO\x64>portaudiodevices.exe And you can test your configuration outside CbB by running C:\Program Files\FlexASIO\x64>flexasiotest.exe

Here the C:\Users\<your username>\FlexASIO.toml:

# Use WASAPI as the PortAudio host API backend.

backend = "Windows WASAPI"

bufferSizeSamples = 2048[input]

# Disable the input. It is strongly recommended to do this if you only want to

# stream audio in one direction.

device = ""

suggestedLatencySeconds = 0.0

wasapiExclusiveMode = false[output]

device = "Lautsprecher (High Definition Audio Device)"

channels = 2

suggestedLatencySeconds = 0.0

wasapiExclusiveMode = false

Thanks for your help and insights. -

Ok, I got an answer from the plugin vendor support. They said, I should tryout ASIO4ALL. And thats a known issue with Cakewalk.

I had ASIOLink installed. But I don't know how to use that.

So I will tryout ASIO4ALL.

-

2 hours ago, Starship Krupa said:

Why does this discussion continue after this point?

You got it to work.

Now you're claiming that there's a bug in Cakewalk and someone else is suggesting that your onboard hardware CODEC may be "garbage."

WTF?

Cakewalk and Exoverb Micro work together when you configure Cakewalk to use WASAPI Exclusive, which is the preferred driver mode for onboard sound. What is there to say beyond that point?

You're right the hardware side is out of the scope of what I said. And it sort of works with some caveats (Exlcusive only and also running the Jack Audio Router additionally).

WASAPI Shared is not limited to a certain sample count and its unnecessary that its grayed in Cakewalk. So whatever you want to call it. To me that is an issue or a bug. Enabling that slider would solve it. I did not say more than that that restriction is not based on a limitation of Shared WASAPI, since I successfully can use different blocksizes in different WASAPI apps at the same time.

And I just found this older post, where that problem obviously also was raised:

-

7 minutes ago, Byron Dickens said:

Say what?

This topic is not about interfaces, this is about a bug in CbB, which could be easily corrected.

Wasting resources for devices people (like me) do not need is never a good idea, especially when its a software bug, which I simply can avoid in my own VST3 host...

-

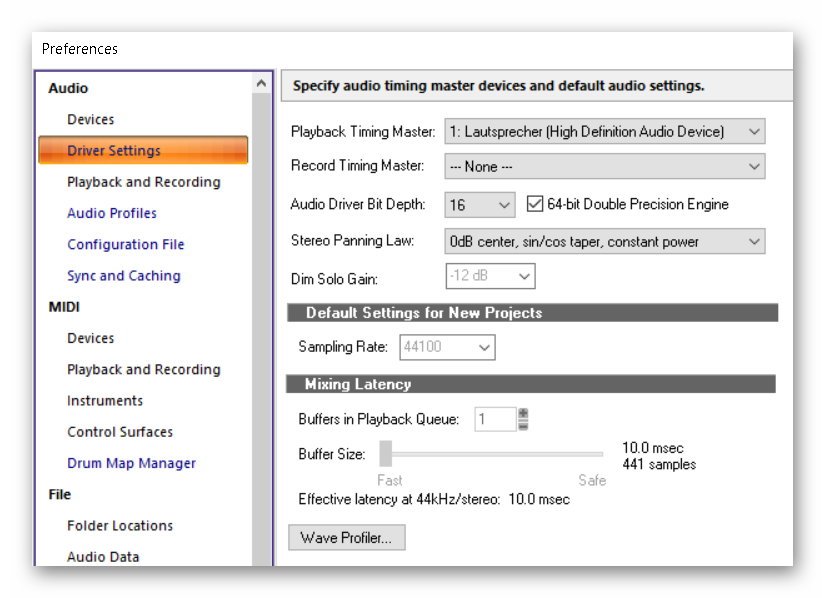

When calling IAudioClient::GetDevicePeriod in the "RenderSharedEventDriven" Sample from the Windows SDK, it returns:

defaultDevicePeriod: 100000

minimumDevicePeriod: 26667devicePeriodInMSEC = defaultDevicePeriod / (10000.0); = 10msec this leads to 441 samples on 44.1kHz

devicePeriodInMSEC = minimumDevicePeriod / (10000.0); = 2.66.. msec this leads to 117 samples on 44.1kHz

-

On 11/8/2023 at 12:35 AM, John Vere said:

You could try changing your on board audio settings in Windows Sound Settings. All my on board audio can be set to use 48/24 which is better for compatibility with movies etc.

Then if you use WASAPI exclusive mode you can change your buffer settings.

This is possibly a good reason to purchase an audio interface which would not be as limited as using on board audio.

Thank you for your answer. An audio interface is no option. And Cakewalk was already on WASAPI (Shared). There it has 441 samples.

When switching it to MME it also failed, switching it in Cakewalk to ASIO now works; It uses 512 samples (Mixing Latency) then! It then uses the JackAudio Router (which must be active externally) Without any Windows Sound Settings changes.

Setting Cakewalk to Exclusive WASAPI indeed sets it to 512 samples. And it works. Thank You!

But setting Cakewalk back to Shared WASAPI unfortunately also sets it back to 441 samples, although I can change the sample rate; but 441 samples even on 48000Hz...

The setting for Shared WASAPI seems to be wrong, why would it always switch back to 441 samples?

As you suggest in your video I've set 24bit 48000Hz in the Windows Sound settings for my output device. Makes no difference. After restarting CbB now the Shared WASAPI mode shows 480samples... (not a power of 2)

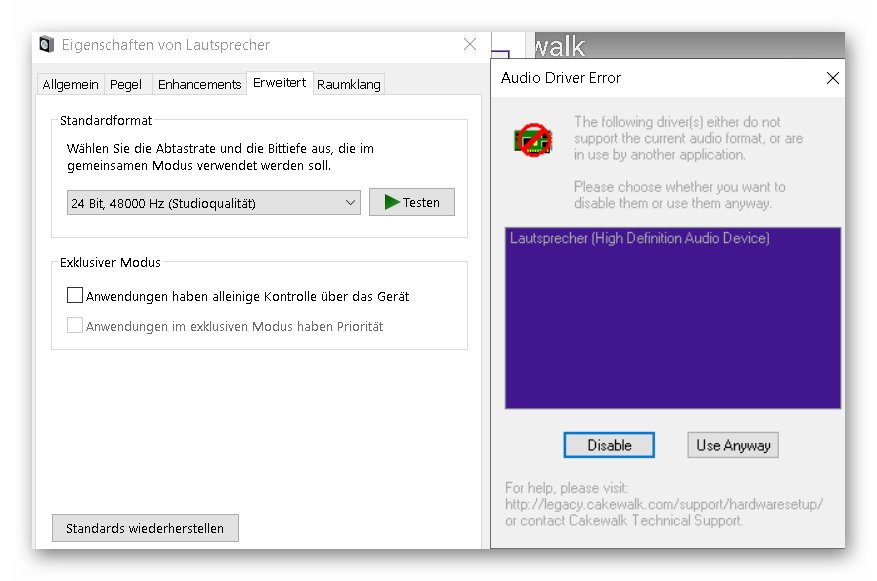

After your suggestion in the video to deactivate "Allow applications to take exclusive control of this device" CbB gives this error:

and if I click "use anyway" then np sound output...

-

11 minutes ago, Xoo said:

441 is indeed not a power of 2 (2, 4, 8, 16, 32, 64, 128, 256, 512, 1024...). It's expecting to be used with an ASIO driver which (almost always) has a power of 2 buffer size.

Thank you for your answer.

I just checked it with the VSTPluginTestHost using the Steinberg ASIO (in-built driver) from the VST3 SDK. Also there it gives that error message,

But when I switch that test host to "Jack Audio Router" it also works without complaining as in my own VST3 host, which is built from that same SDK.

-

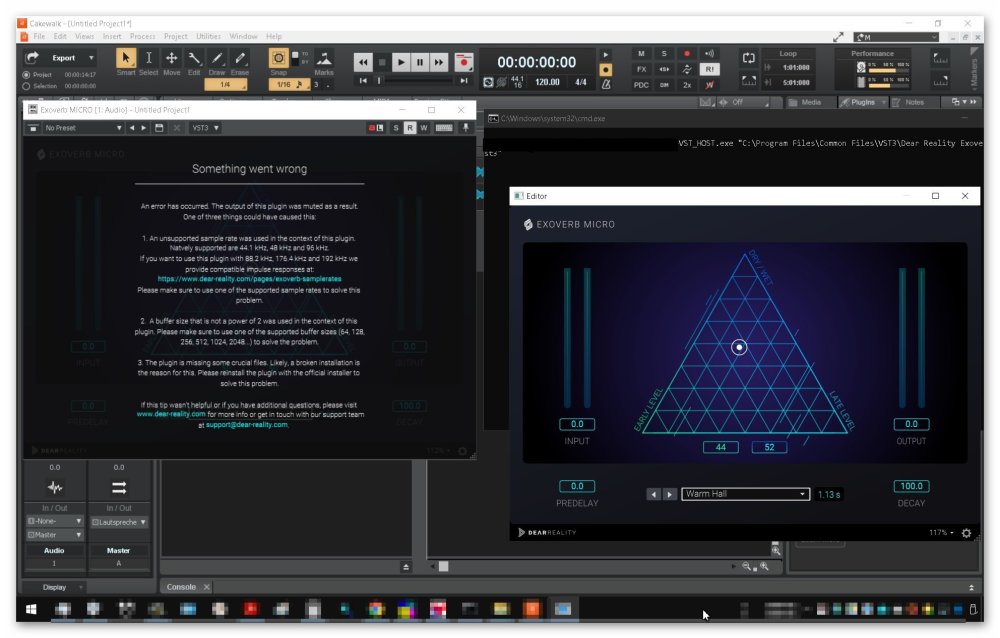

I installed EXOVERB MICRO and activated it. Then in Cakewalk it shows an error screen (left on the screenshot), complaining about some settings, although they seem all to be met!? Sample Rate is at 44.1kHz in my project, and for buffer sizes Audio->Sync & Caching->File System->Playback I/O I tried 8, 256, 512 in the settings menu, always same error.

But when I open it with my own VST3 host it works fine (see right in the screenshot):

The plugin has VST3 SDK 3.7.2.

My own VST3 host (using VST SDK 3.7.9) used a buffersize of 512 samples.

I use Cakewalk 2023.09 (075); Windows10Pro 22H2 (build 19045.2846);

I sent the link of this topic thread already to the support of Dear Reality.

Mixing Latency is 441 Samples; Could that be the reason? EXOVERB MICRO expect a buffer size with a power of 2; 441 is not such a size. I also installed the "EXOVERB MICRO Additional Samplerate Support". However, I use 44100kHz Sample Rate, so it was not expected, that that additional samplerate package would change anything; and it didn't.

I cannot change that button, its grayed :

-

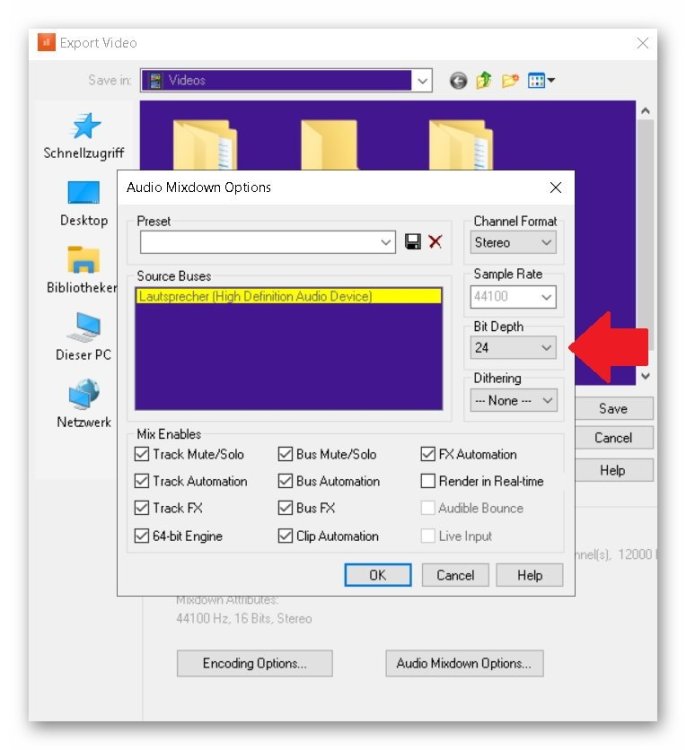

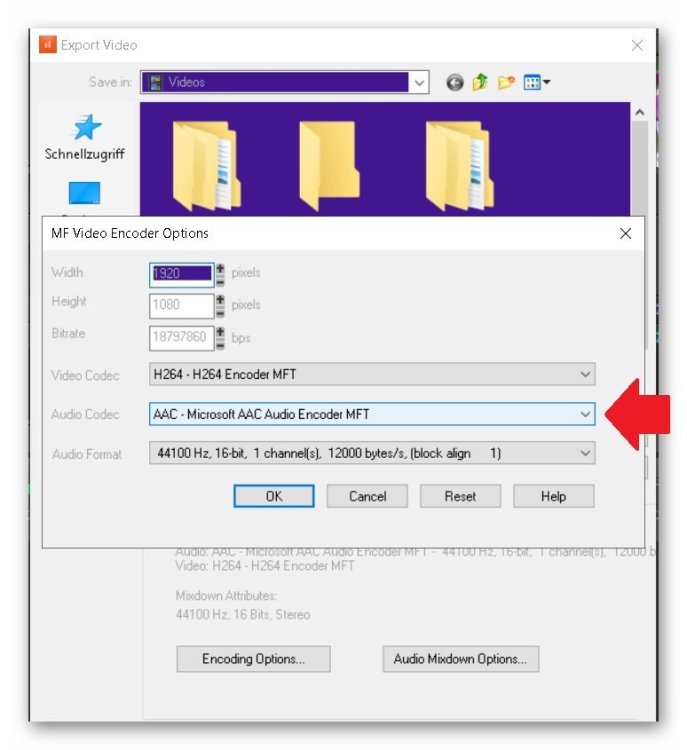

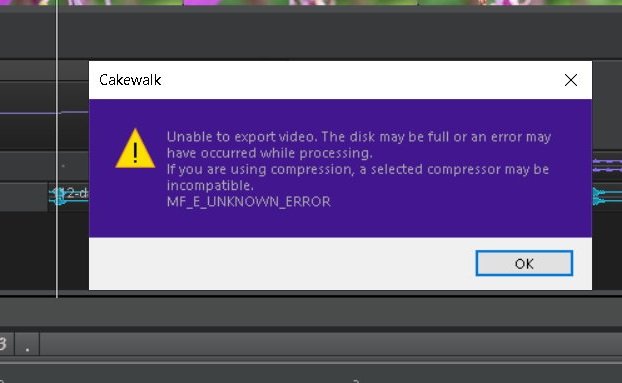

I imported a .MOV video from my iphone, added some synth notes in another channel in CbB and exported that as .MP4 file (standard settings). and it works perfectly fine. What file input format do you use? On which Win OS version?

I can reproduce that error, when I choose 24bit for the "audio mixdown options" and using AAC in the "Encoding Options".

When using MP3 export as 24bit works fine. Also 16bit AAC works fine.

And its clear, why. Look at the MF implementation "AAC Encoder" section:

https://learn.microsoft.com/de-de/windows/win32/medfound/aac-encoder

MF_MT_AUDIO_BITS_PER_SAMPLE must be 16.

MF_MT_AUDIO_SAMPLES_PER_SECOND must be 44100 or 48000;

So in the "audio mixdown options" dialog selecting anything other than 16 should be impossible, when "AAC (Microsoft MFT)" is selected in "Encoding Options". Or a message box should occur in this situtaion to indicate that requirement, when attempting anything other than 16bit. Same warning if attempting to use any other sample rate than 44100 or 48000 for "AAC (Microsoft MFT)". And when changing to "AAC (Microsoft MFT)" in "Encoding Options". then the settings in "audio mixdown options" could automatically adjusted to the valid ones (most near to the selection); or also a message box about that fact. Developers can correct that easily in minutes.

Or instead internally the bit depth / sample rate could be automatically converted to a supported setting (maybe additionally giving a warning message box about it).

Also exporting using "MPEG - Microsoft MpeG-2- Audio Encoder MFT" works with 24bit on my machine, but I cannot playback the file, probably due to that missing codec.

But also here some restrictions on the sample rate should be added in the dialog. See:

https://learn.microsoft.com/de-de/windows/win32/medfound/mpeg-2-audio-encoder

MF_MT_AUDIO_SAMPLES_PER_SECOND only supports 32000, 44100, 48000 and in case its MPEG-2 LSF, then also 16000, 22050, 24000 Any other sample rate is not supported.

-

On 10/24/2023 at 9:38 AM, sjoens said:

Are you on the latest update? It's broken in 2022.11 as well.

yes I'm on 2023.09 (075) and have not knowingly encountered this situation before 2023.09;

-

8 hours ago, gustabo said:

Is there a chance you updated the plugin after it was first frozen?

This may be actually the real reason! Thank you!

I opened a new project, and when then adding the TAL Bassline plugin (incl. all the FX's) then freezing and unfreezing works fine.

But I always assumed, that the CLSID of that plugin never changes among versions; I often had the case, that although the .dll/.vst plugin file was moved, Cakewalk still found the plugin; And so when it finds it based on the CLSID, an update normally would not change that id, does it?

A few weeks ago I had a total system crash and had to rebuilt all configurations

In the old .pgl file (from before the crash) now I found Plugin CLSID="{141AC902-3837-3567-5441-4C2D42417E31}"

The current plugin has {DA906672-7AD1-35CE-5B14-6FBBA844C418}

Normally then Cakewalk should have given a warning on loading, that a plugin was not found.

But there was nothing like this; When you open a project from the ones in the reproducer no warning occurs.

A bit strange is, that although Cakewalk does not "know" the new plugin based on the different CLSID, it crashes

with the message, that the exception occured in that plugin...

-

When you create a track 1 (e.g. using TTS-1), put some MIDI notes in there and then add a Bus "D" (which outputs then to Master).

Then set the outout of that track 1 to Bus "D".

Then SOLO the Bus "D"

Play back; You hear the output of TTS-1

Then freeze track 1.

=> the created waveform is pure silence, although its routed to Bus "D" which is SOLO and although playback works for it...

See in this example project:

-

1

1

-

-

1 hour ago, OutrageProductions said:

Try this experiment:

Bounce (NOT freeze) the audio from that track out to another audio track, preferably IRT, then Archive the offending track/VST and test again.

thanks for your answer.

yes, that would work.

however, using freeze/unfreeze much is more comfortable

Deactivating the FX's would not save the CPU usage, but because of that I want to freeze it.

I simply re-designed another track with different plug-ins and use that instead now

-

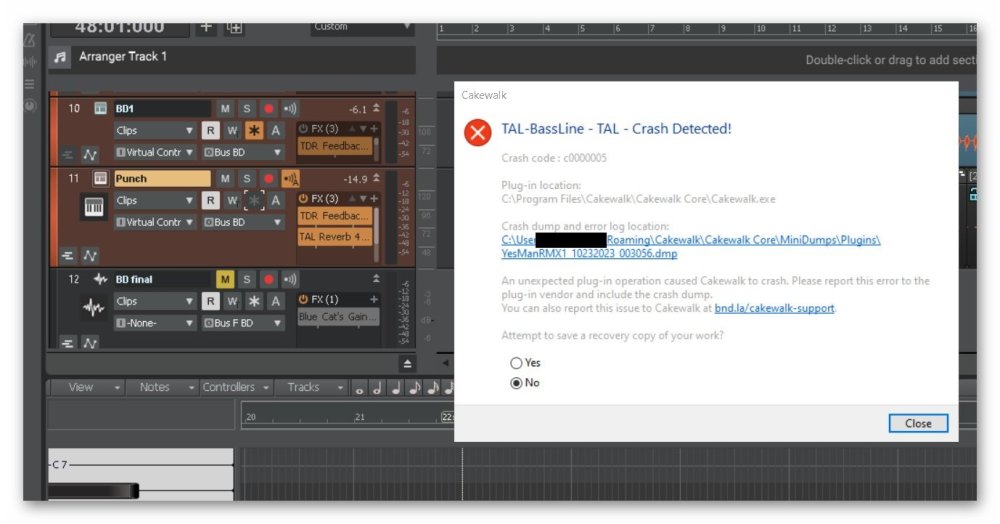

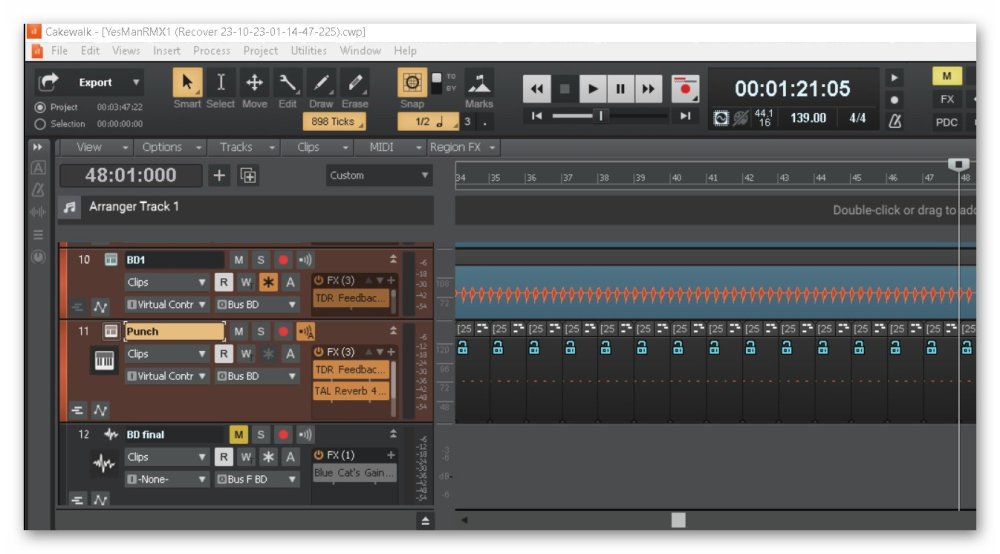

In a somewhat complex project. I have some frozen tracks.

Unfreezing a specific one (called "Punch" in my project), crashes "TAL Bassline" (https://tal-software.com/products/tal-bassline) of that track.

When opening the just created recovery project, the track is unfrozen. But when you click on the instrument, it does not open. And no audio output. (similar to the problem I already reported in my other post).

Since this happened now several times always during unfreezing on the same track, it seems not to be a random problem. That frozen track should be part of an internal template. So unfreezing at some point will occur over and over again in the future.

Here the crash:

and here the recovered project:

I created a test reproducer project Cakewalk_Reproducer2.zip in the attachment, which only contains the "Punch" track and another track. When loading that project and unfreezing the "Punch" track, Cakewalk reports a Crash Message of the Plugin or silently exits. Removing that other track caused another issue: Then the "freeze" button is not highlighted after reloading that project; examples in the ZIP.

Cakewalk_Reproducer2.zip has 5 CWP files:

\Cakewalk_Reproducer\YesManRMX1_test_reproducer.cwp=> At first I thought the reason is another track, before I realized, that the plugin was used by both tracks

\Cakewalk_Reproducer\YesManRMX1_test_reproducer2.cwp

=> I removed the other track; however the freeze button is not accessable, although the track is shown visible frozen, unfreezing not possible

\Cakewalk_Reproducer\YesManRMX1_test_reproducer3.cwp=> I removed some effects from the other track to simply it; the freeze button is still shown

\Cakewalk_Reproducer\YesManRMX1_test_reproducer4.cwp

=> I replaced the other track with TTS-1 and then the freeze button is still shown

\Cakewalk_Reproducer\YesManRMX1_test_reproducer4 (Recover 23-10-23-02-21-58-804).cwp

=> this is the recovery Cakewalk built. when loading it, the freeze button is not highlighted, and the MIDI audio blocks seem to be ok, but clicking on the instrument button has no effect; also playback gives nothing; The plug-in itself works fine, adding it to a new track there works fine. I use the 64bit VST2 version of the plug-in.

----

NOTE: To be able to uplaod it here I simply edited "\Audio\Punch (Abgemischt, 6).wav" in a way, that its completely fully of silence, this way its much smaller after compression, although its about 30MB when uncompressed. So it fits into the attachment of this post.

The only current work-around I see there, is to simply not freeze tracks with that plug-in; But is there another alternative?

Fortunately deleting that one track in the project does not crash. So replacing it with another plug-in may solve it as work-around.

-

Thank you for your information.

I want the original preview capture, but the project preview ONLY. No other unrelated components,

which are just there in the screen.

It is a bug, when the app captures anything unrelated on screen.

-

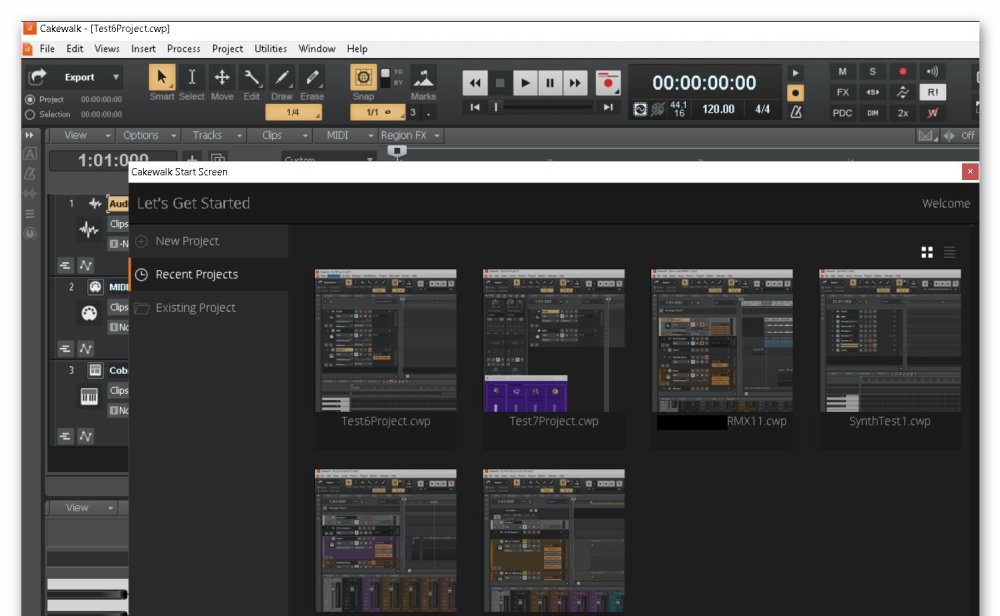

When you have another app (which is topmost) running at the same time, when "Cakewalk by BandLab" is running, sometimes chances are, that

part of that other app are captured visible in the preview images within the "Cakewalk Start Screen".

This issue was happening before version 2023.09

in the screenshot you see the "Windows Mixer" in front of the preview image.

I used a third-party tool to make it always TopMost, which almost does something like SetWindowPos(hWnd, HWND_TOPMOST ...)

FLEXASIO - EXPERIENCE

in Cakewalk Sonar

Posted · Edited by Sunshine Dreaming

If you can use "Visual Studio Community 2022" and understand C++ you can build WASAPI rendering/capturing engine samples yourself:

https://github.com/microsoft/Windows-classic-samples/tree/main/Samples/Win7Samples/multimedia/audio/RenderSharedEventDriven

https://github.com/microsoft/Windows-classic-samples/tree/main/Samples/Win7Samples/multimedia/audio/CaptureSharedEventDriven

Most of the source code is self-explaining if you are familiar with programming. You can run your own tests to get latency and other data.